A Survey of Defenses against AI-generated Visual Media: Detection, Disruption, and Authentication

Paper and Code

Jul 15, 2024

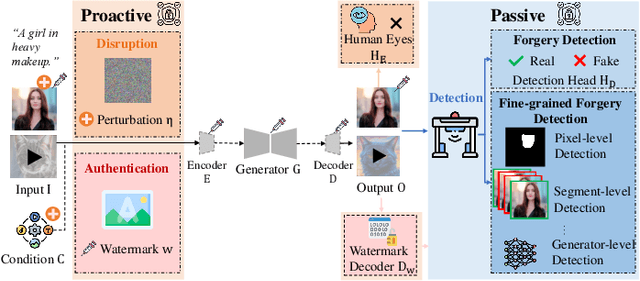

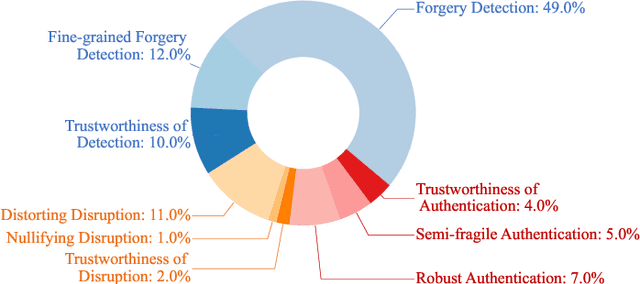

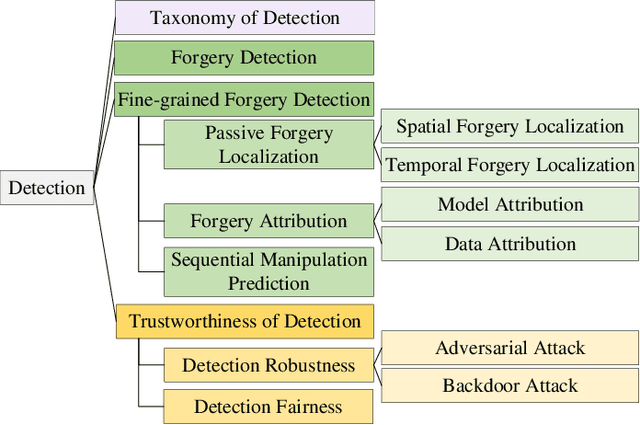

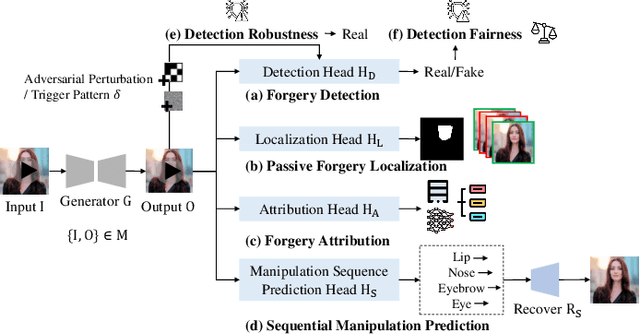

Deep generative models have demonstrated impressive performance in various computer vision applications, including image synthesis, video generation, and medical analysis. Despite their significant advancements, these models may be used for malicious purposes, such as misinformation, deception, and copyright violation. In this paper, we provide a systematic and timely review of research efforts on defenses against AI-generated visual media, covering detection, disruption, and authentication. We review existing methods and summarize the mainstream defense-related tasks within a unified passive and proactive framework. Moreover, we survey the derivative tasks concerning the trustworthiness of defenses, such as their robustness and fairness. For each task, we formulate its general pipeline and propose a taxonomy based on methodological strategies that are uniformly applicable to the primary subtasks. Additionally, we summarize the commonly used evaluation datasets, criteria, and metrics. Finally, by analyzing the reviewed studies, we provide insights into current research challenges and suggest possible directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge