A Deep Structured Learning Approach Towards Automating Connectome Reconstruction from 3D Electron Micrographs

Paper and Code

Sep 24, 2017

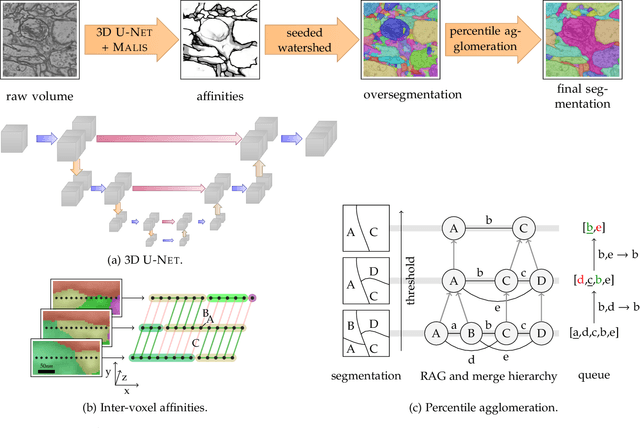

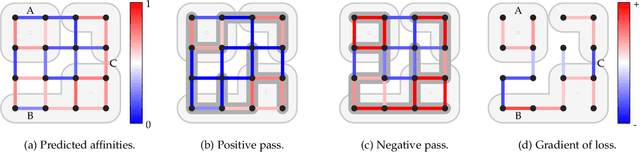

We present a deep structured learning method for neuron segmentation from 3D electron microscopy (EM) which improves significantly upon the state of the art in terms of accuracy and scalability. Our method consists of a 3D U-Net classifier predicting affinity graphs on voxels, followed by iterative region agglomeration. We train the U-Net using a new structured loss based on MALIS that encourages topological correctness. Our extension consists of two parts: First, an $O(n\log(n))$ method to compute the loss gradient, improving over the originally proposed $O(n^2)$ algorithm. Second, we compute the gradient in two separate passes to avoid spurious contributions in early training stages. Our affinity predictions are accurate enough that simple agglomeration outperforms more involved methods used earlier on inferior predictions. We present results on three datasets (CREMI, FIB, and SegEM) of different imaging techniques and animals and achieve improvements over previous results of 27%, 15%, and 250%. Our findings suggest that a single 3D segmentation strategy can be applied to both isotropic and anisotropic EM data. The runtime of our method scales with $O(n)$ in the size of the volume and achieves a throughput of about 2.6 seconds per megavoxel, allowing processing of very large datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge