A Benchmark Comparison of Imitation Learning-based Control Policies for Autonomous Racing

Paper and Code

Sep 29, 2022

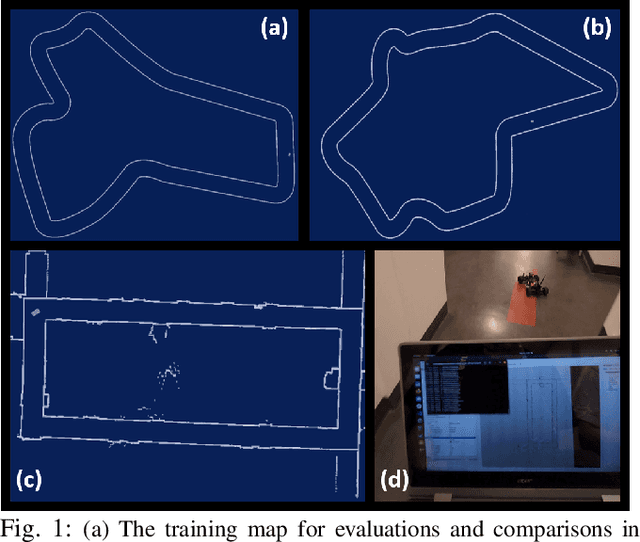

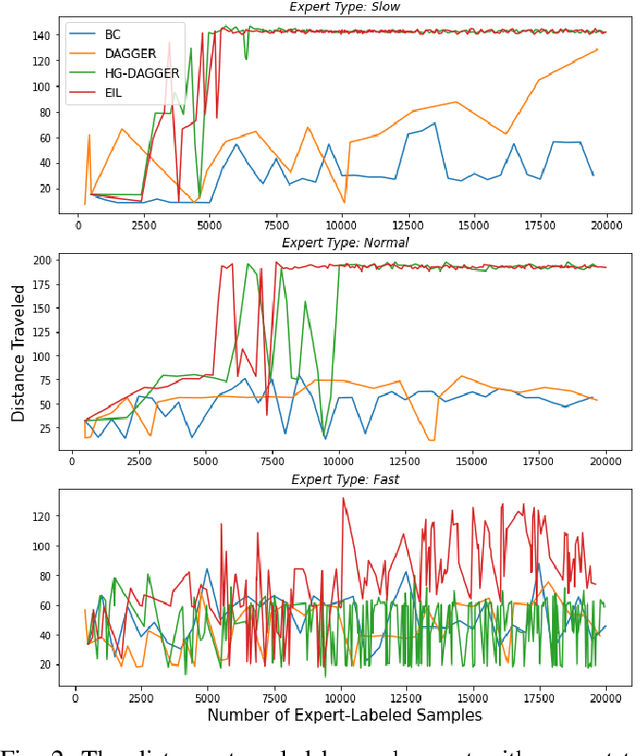

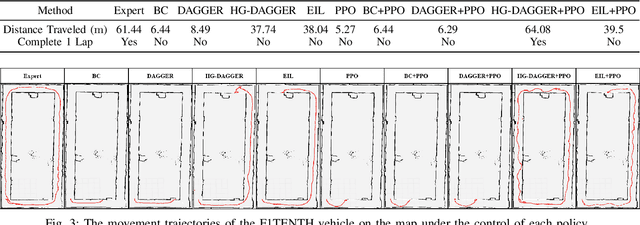

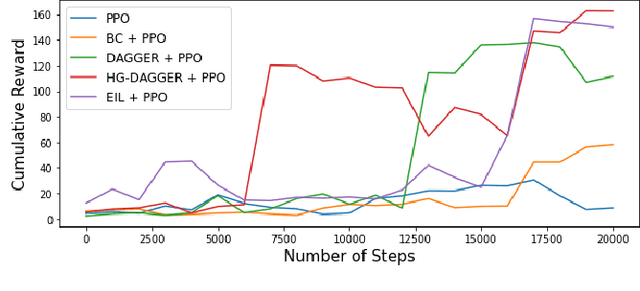

Autonomous racing with scaled race cars has gained increasing attention as an effective approach for developing perception, planning and control algorithms for safe autonomous driving at the limits of the vehicle's handling. To train agile control policies for autonomous racing, learning-based approaches largely utilize reinforcement learning, albeit with mixed results. In this study, we benchmark a variety of imitation learning policies for racing vehicles that are applied directly or for bootstrapping reinforcement learning both in simulation and on scaled real-world environments. We show that interactive imitation learning techniques outperform traditional imitation learning methods and can greatly improve the performance of reinforcement learning policies by bootstrapping thanks to its better sample efficiency. Our benchmarks provide a foundation for future research on autonomous racing using Imitation Learning and Reinforcement Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge