2nd Place Solution for ICCV 2021 VIPriors Image Classification Challenge: An Attract-and-Repulse Learning Approach

Paper and Code

Jun 13, 2022

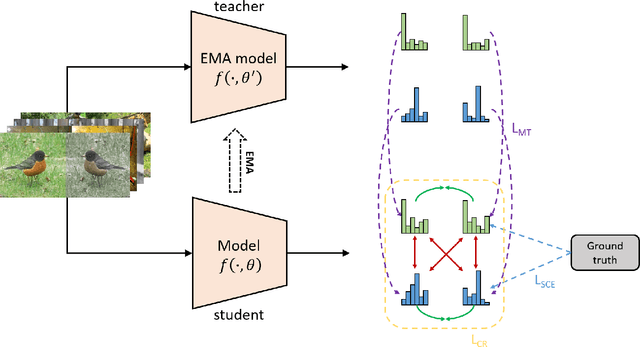

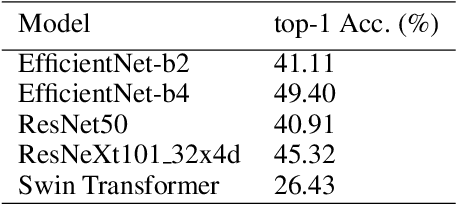

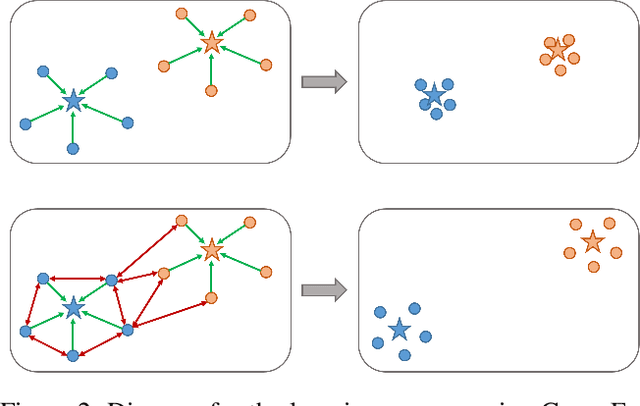

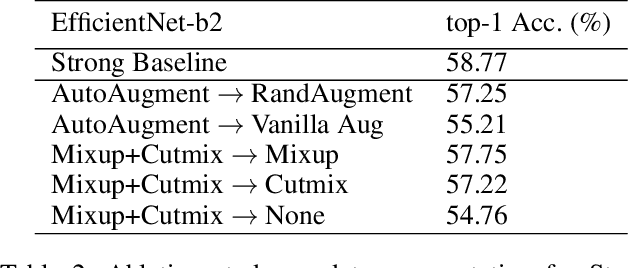

Convolutional neural networks (CNNs) have achieved significant success in image classification by utilizing large-scale datasets. However, it is still of great challenge to learn from scratch on small-scale datasets efficiently and effectively. With limited training datasets, the concepts of categories will be ambiguous since the over-parameterized CNNs tend to simply memorize the dataset, leading to poor generalization capacity. Therefore, it is crucial to study how to learn more discriminative representations while avoiding over-fitting. Since the concepts of categories tend to be ambiguous, it is important to catch more individual-wise information. Thus, we propose a new framework, termed Attract-and-Repulse, which consists of Contrastive Regularization (CR) to enrich the feature representations, Symmetric Cross Entropy (SCE) to balance the fitting for different classes and Mean Teacher to calibrate label information. Specifically, SCE and CR learn discriminative representations while alleviating over-fitting by the adaptive trade-off between the information of classes (attract) and instances (repulse). After that, Mean Teacher is used to further improve the performance via calibrating more accurate soft pseudo labels. Sufficient experiments validate the effectiveness of the Attract-and-Repulse framework. Together with other strategies, such as aggressive data augmentation, TenCrop inference, and models ensembling, we achieve the second place in ICCV 2021 VIPriors Image Classification Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge