Zongyong Deng

Hierarchical Generative Network for Face Morphing Attacks

Mar 17, 2024

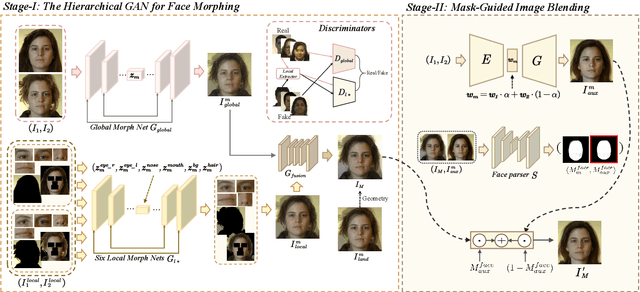

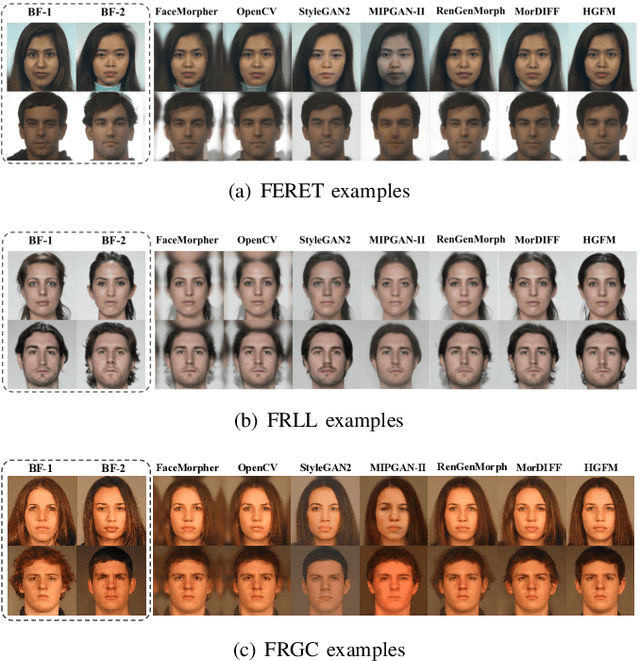

Abstract:Face morphing attacks circumvent face recognition systems (FRSs) by creating a morphed image that contains multiple identities. However, existing face morphing attack methods either sacrifice image quality or compromise the identity preservation capability. Consequently, these attacks fail to bypass FRSs verification well while still managing to deceive human observers. These methods typically rely on global information from contributing images, ignoring the detailed information from effective facial regions. To address the above issues, we propose a novel morphing attack method to improve the quality of morphed images and better preserve the contributing identities. Our proposed method leverages the hierarchical generative network to capture both local detailed and global consistency information. Additionally, a mask-guided image blending module is dedicated to removing artifacts from areas outside the face to improve the image's visual quality. The proposed attack method is compared to state-of-the-art methods on three public datasets in terms of FRSs' vulnerability, attack detectability, and image quality. The results show our method's potential threat of deceiving FRSs while being capable of passing multiple morphing attack detection (MAD) scenarios.

Optimal-Landmark-Guided Image Blending for Face Morphing Attacks

Jan 30, 2024

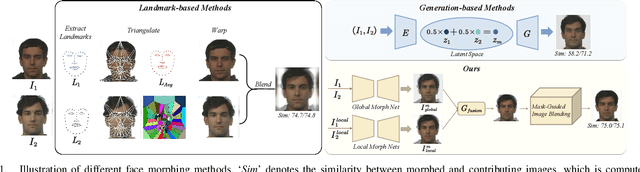

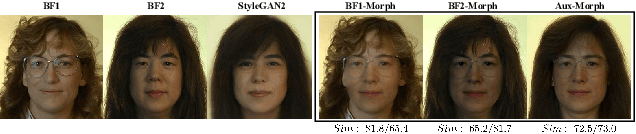

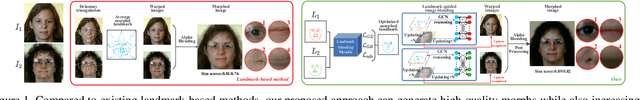

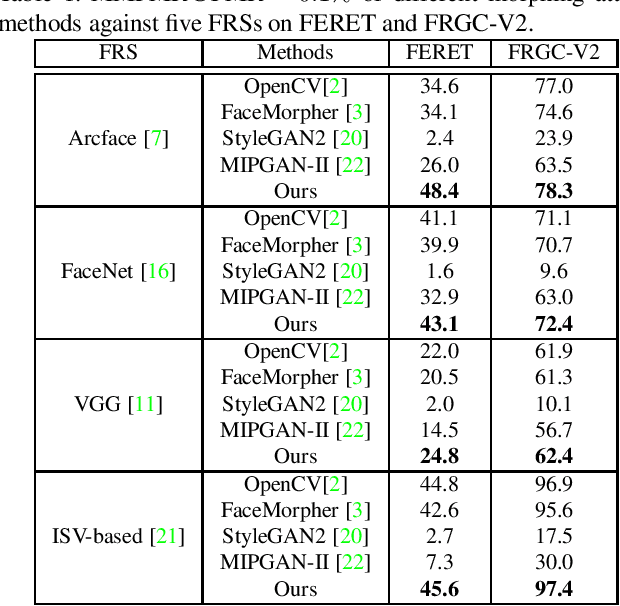

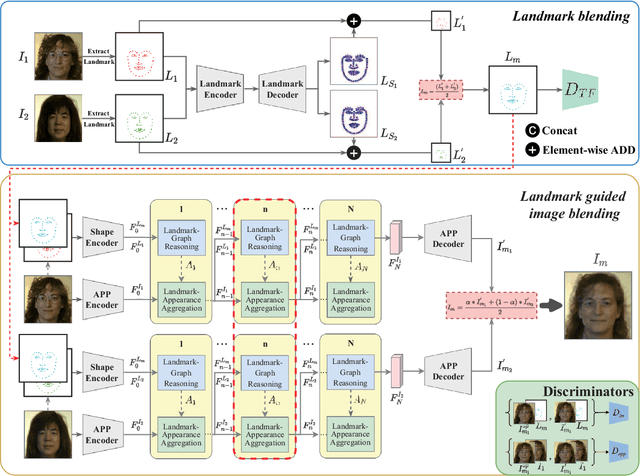

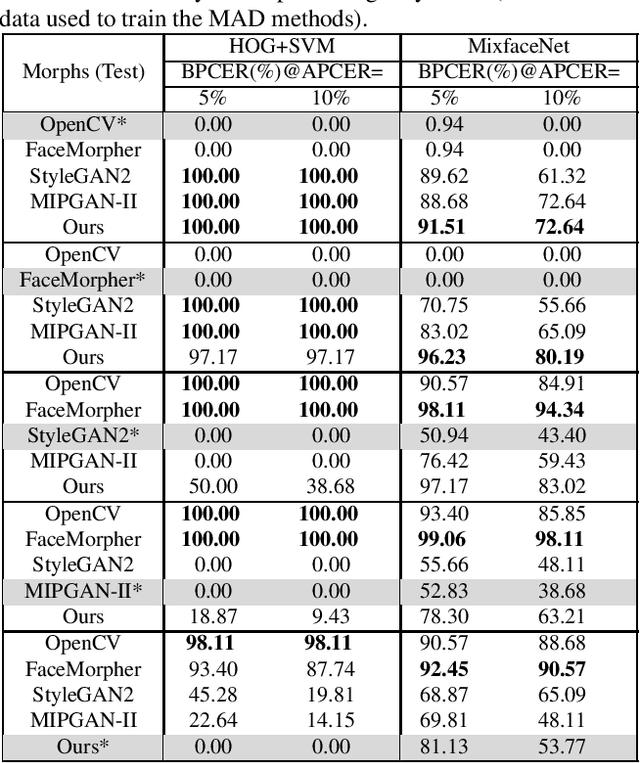

Abstract:In this paper, we propose a novel approach for conducting face morphing attacks, which utilizes optimal-landmark-guided image blending. Current face morphing attacks can be categorized into landmark-based and generation-based approaches. Landmark-based methods use geometric transformations to warp facial regions according to averaged landmarks but often produce morphed images with poor visual quality. Generation-based methods, which employ generation models to blend multiple face images, can achieve better visual quality but are often unsuccessful in generating morphed images that can effectively evade state-of-the-art face recognition systems~(FRSs). Our proposed method overcomes the limitations of previous approaches by optimizing the morphing landmarks and using Graph Convolutional Networks (GCNs) to combine landmark and appearance features. We model facial landmarks as nodes in a bipartite graph that is fully connected and utilize GCNs to simulate their spatial and structural relationships. The aim is to capture variations in facial shape and enable accurate manipulation of facial appearance features during the warping process, resulting in morphed facial images that are highly realistic and visually faithful. Experiments on two public datasets prove that our method inherits the advantages of previous landmark-based and generation-based methods and generates morphed images with higher quality, posing a more significant threat to state-of-the-art FRSs.

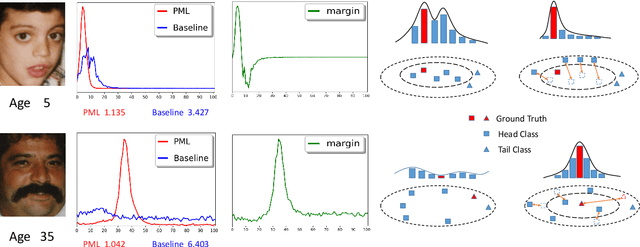

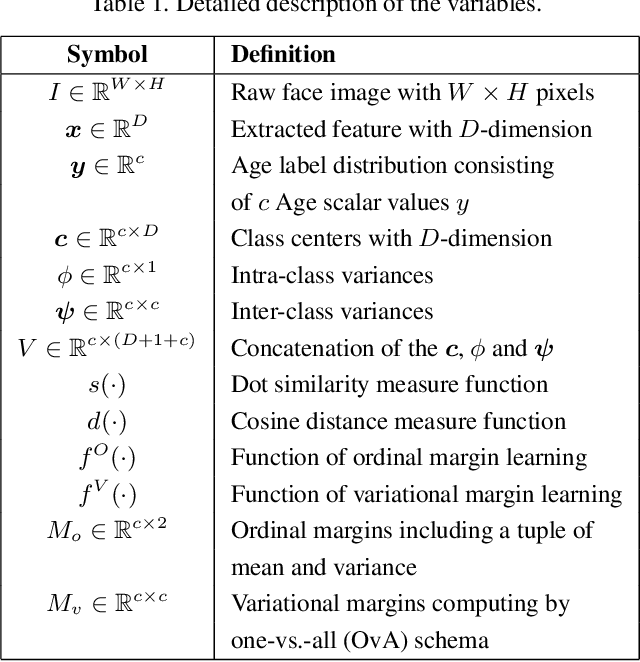

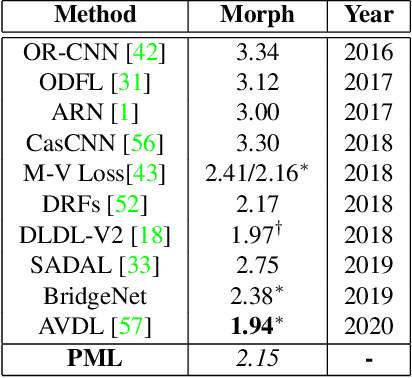

PML: Progressive Margin Loss for Long-tailed Age Classification

Mar 03, 2021

Abstract:In this paper, we propose a progressive margin loss (PML) approach for unconstrained facial age classification. Conventional methods make strong assumption on that each class owns adequate instances to outline its data distribution, likely leading to bias prediction where the training samples are sparse across age classes. Instead, our PML aims to adaptively refine the age label pattern by enforcing a couple of margins, which fully takes in the in-between discrepancy of the intra-class variance, inter-class variance and class center. Our PML typically incorporates with the ordinal margin and the variational margin, simultaneously plugging in the globally-tuned deep neural network paradigm. More specifically, the ordinal margin learns to exploit the correlated relationship of the real-world age labels. Accordingly, the variational margin is leveraged to minimize the influence of head classes that misleads the prediction of tailed samples. Moreover, our optimization carefully seeks a series of indicator curricula to achieve robust and efficient model training. Extensive experimental results on three face aging datasets demonstrate that our PML achieves compelling performance compared to state of the arts. Code will be made publicly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge