Zongmin Yang

Spirit Distillation: A Model Compression Method with Multi-domain Knowledge Transfer

Apr 29, 2021

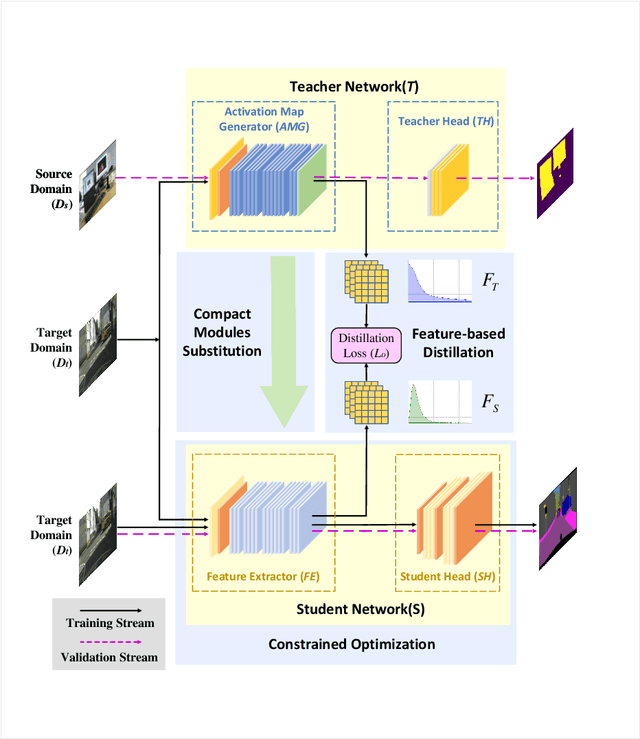

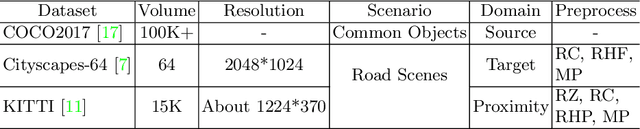

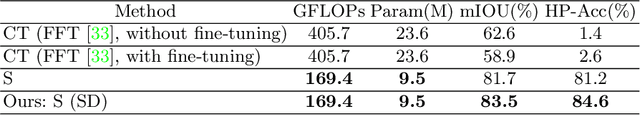

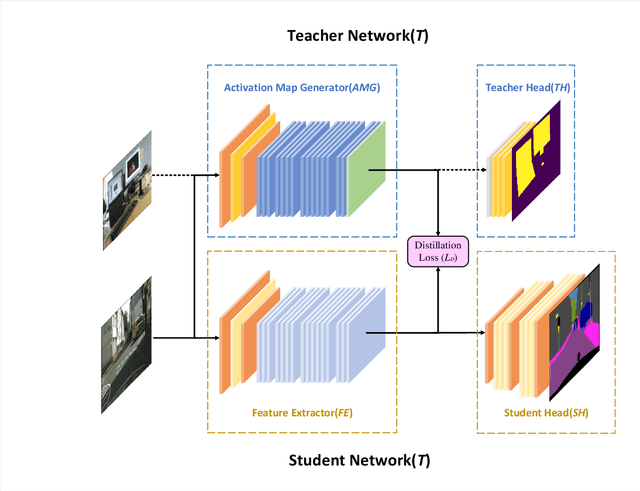

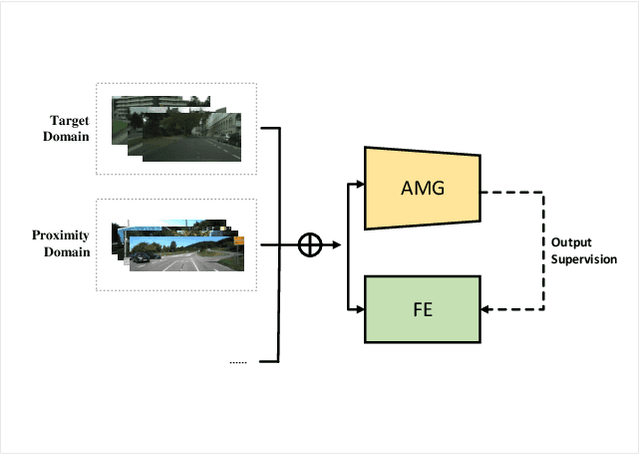

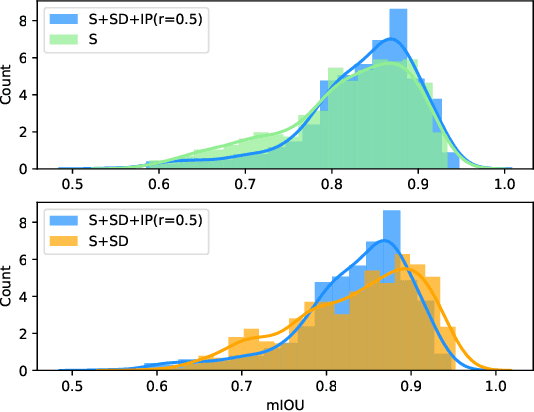

Abstract:Recent applications pose requirements of both cross-domain knowledge transfer and model compression to machine learning models due to insufficient training data and limited computational resources. In this paper, we propose a new knowledge distillation model, named Spirit Distillation (SD), which is a model compression method with multi-domain knowledge transfer. The compact student network mimics out a representation equivalent to the front part of the teacher network, through which the general knowledge can be transferred from the source domain (teacher) to the target domain (student). To further improve the robustness of the student, we extend SD to Enhanced Spirit Distillation (ESD) in exploiting a more comprehensive knowledge by introducing the proximity domain which is similar to the target domain for feature extraction. Results demonstrate that our method can boost mIOU and high-precision accuracy by 1.4% and 8.2% respectively with 78.2% segmentation variance, and can gain a precise compact network with only 41.8% FLOPs.

Spirit Distillation: Precise Real-time Semantic Segmentation of Road Scenes with Insufficient Data

Apr 17, 2021

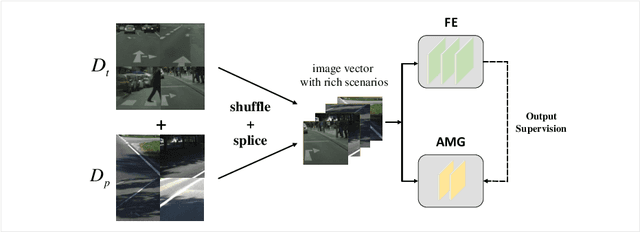

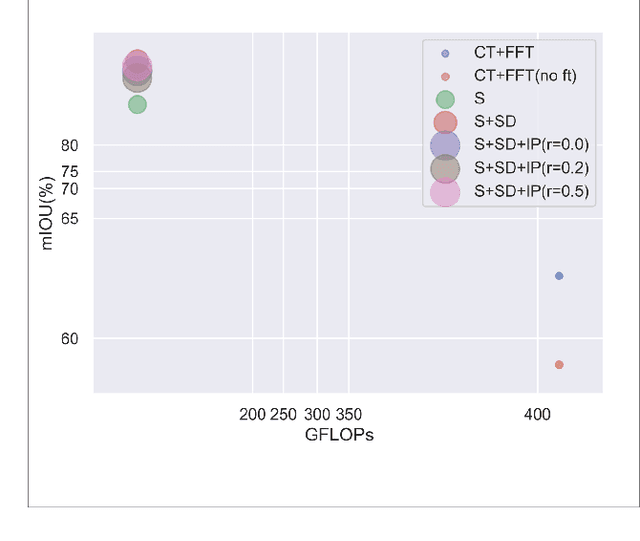

Abstract:Semantic segmentation of road scenes is one of the key technologies for realizing autonomous driving scene perception, and the effectiveness of deep Convolutional Neural Networks(CNNs) for this task has been demonstrated. State-of-art CNNs for semantic segmentation suffer from excessive computations as well as large-scale training data requirement. Inspired by the ideas of Fine-tuning-based Transfer Learning (FTT) and feature-based knowledge distillation, we propose a new knowledge distillation method for cross-domain knowledge transference and efficient data-insufficient network training, named Spirit Distillation(SD), which allow the student network to mimic the teacher network to extract general features, so that a compact and accurate student network can be trained for real-time semantic segmentation of road scenes. Then, in order to further alleviate the trouble of insufficient data and improve the robustness of the student, an Enhanced Spirit Distillation (ESD) method is proposed, which commits to exploit a more comprehensive general features extraction capability by considering images from both the target and the proximity domains as input. To our knowledge, this paper is a pioneering work on the application of knowledge distillation to few-shot learning. Persuasive experiments conducted on Cityscapes semantic segmentation with the prior knowledge transferred from COCO2017 and KITTI demonstrate that our methods can train a better student network (mIOU and high-precision accuracy boost by 1.4% and 8.2% respectively, with 78.2% segmentation variance) with only 41.8% FLOPs (see Fig. 1).

Activation Map Adaptation for Effective Knowledge Distillation

Oct 26, 2020

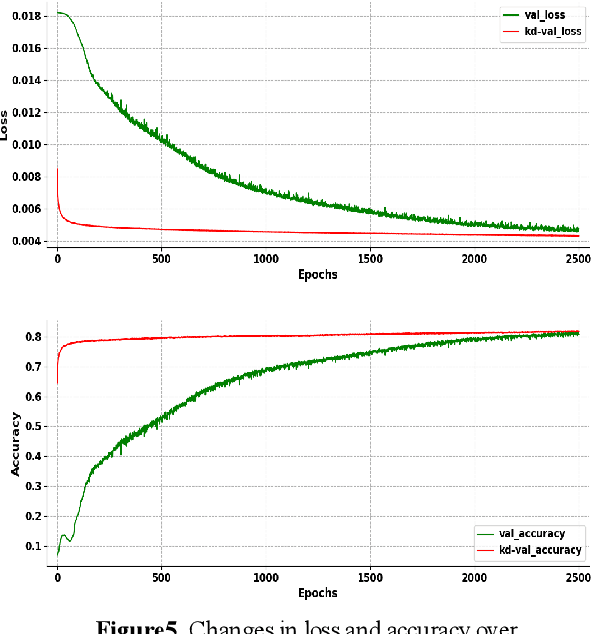

Abstract:Model compression becomes a recent trend due to the requirement of deploying neural networks on embedded and mobile devices. Hence, both accuracy and efficiency are of critical importance. To explore a balance between them, a knowledge distillation strategy is proposed for general visual representation learning. It utilizes our well-designed activation map adaptive module to replace some blocks of the teacher network, exploring the most appropriate supervisory features adaptively during the training process. Using the teacher's hidden layer output to prompt the student network to train so as to transfer effective semantic information.To verify the effectiveness of our strategy, this paper applied our method to cifar-10 dataset. Results demonstrate that the method can boost the accuracy of the student network by 0.6% with 6.5% loss reduction, and significantly improve its training speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge