Zixuan He

Center for Precision and Automated Agricultural Systems, Washington State University

A Multi-Granularity Supervised Contrastive Framework for Remaining Useful Life Prediction of Aero-engines

Nov 01, 2024

Abstract:Accurate remaining useful life (RUL) predictions are critical to the safe operation of aero-engines. Currently, the RUL prediction task is mainly a regression paradigm with only mean square error as the loss function and lacks research on feature space structure, the latter of which has shown excellent performance in a large number of studies. This paper develops a multi-granularity supervised contrastive (MGSC) framework from plain intuition that samples with the same RUL label should be aligned in the feature space, and address the problems of too large minibatch size and unbalanced samples in the implementation. The RUL prediction with MGSC is implemented on using the proposed multi-phase training strategy. This paper also demonstrates a simple and scalable basic network structure and validates the proposed MGSC strategy on the CMPASS dataset using a convolutional long short-term memory network as a baseline, which effectively improves the accuracy of RUL prediction.

Design, Modeling, and Control of a Low-Cost and Rapid Response Soft-Growing Manipulator for Orchard Operations

Nov 01, 2023

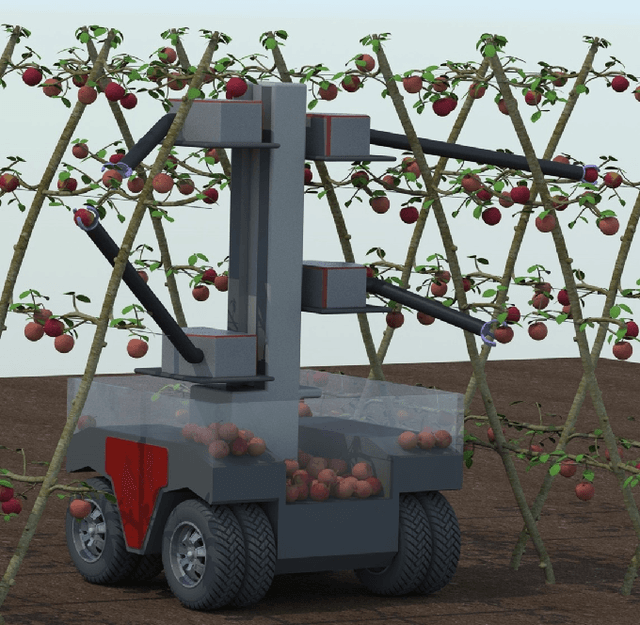

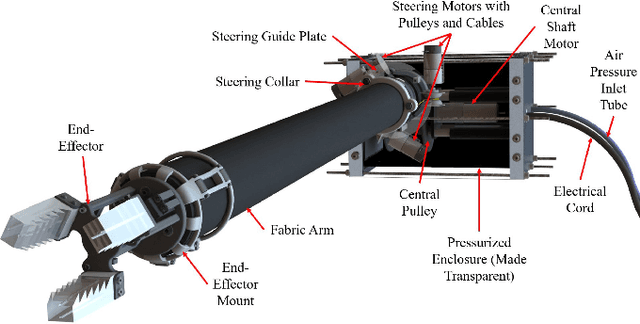

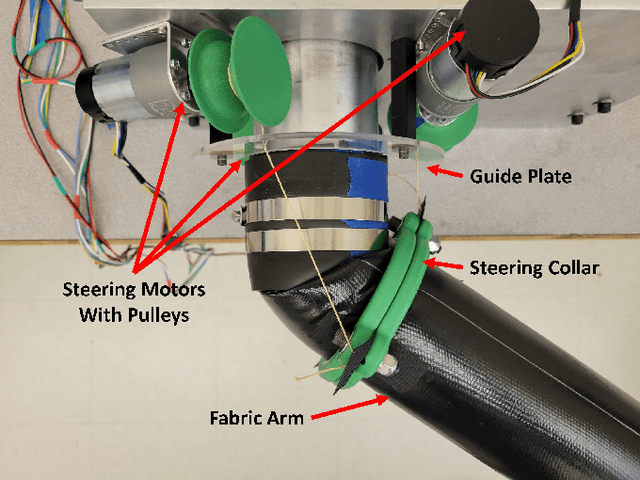

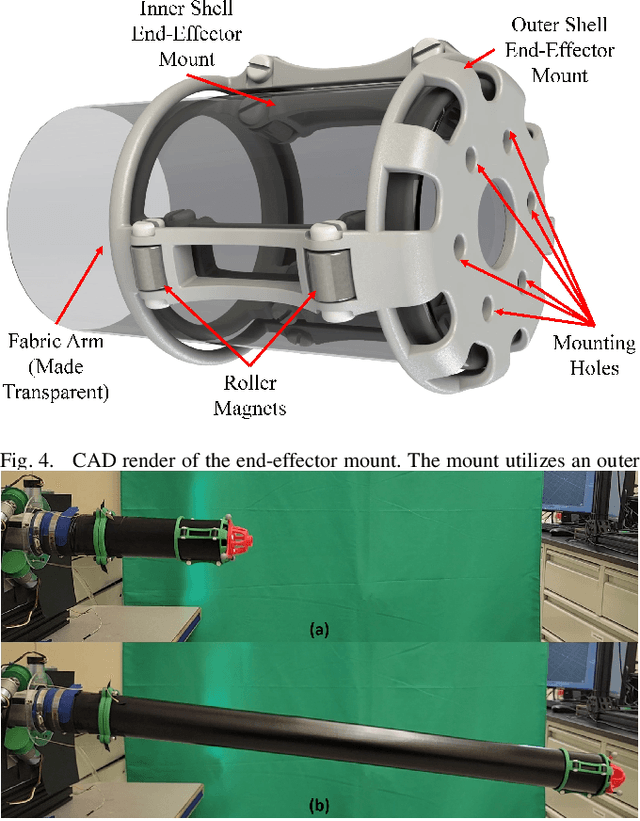

Abstract:Tree fruit growers around the world are facing labor shortages for critical operations, including harvest and pruning. There is a great interest in developing robotic solutions for these labor-intensive tasks, but current efforts have been prohibitively costly, slow, or require a reconfiguration of the orchard in order to function. In this paper, we introduce an alternative approach to robotics using a novel and low-cost soft-growing robotic platform. Our platform features the ability to extend up to 1.2 m linearly at a maximum speed of 0.27 m/s. The soft-growing robotic arm can operate with a terminal payload of up to 1.4 kg (4.4 N), more than sufficient for carrying an apple. This platform decouples linear and steering motions to simplify path planning and the controller design for targeting. We anticipate our platform being relatively simple to maintain compared to rigid robotic arms. Herein we also describe and experimentally verify the platform's kinematic model, including the prediction of the relationship between the steering angle and the angular positions of the three steering motors. Information from the model enables the position controller to guide the end effector to the targeted positions faster and with higher stability than without this information. Overall, our research show promise for using soft-growing robotic platforms in orchard operations.

Real-time Strawberry Detection Based on Improved YOLOv5s Architecture for Robotic Harvesting in open-field environment

Sep 01, 2023Abstract:This study proposed a YOLOv5-based custom object detection model to detect strawberries in an outdoor environment. The original architecture of the YOLOv5s was modified by replacing the C3 module with the C2f module in the backbone network, which provided a better feature gradient flow. Secondly, the Spatial Pyramid Pooling Fast in the final layer of the backbone network of YOLOv5s was combined with Cross Stage Partial Net to improve the generalization ability over the strawberry dataset in this study. The proposed architecture was named YOLOv5s-Straw. The RGB images dataset of the strawberry canopy with three maturity classes (immature, nearly mature, and mature) was collected in open-field environment and augmented through a series of operations including brightness reduction, brightness increase, and noise adding. To verify the superiority of the proposed method for strawberry detection in open-field environment, four competitive detection models (YOLOv3-tiny, YOLOv5s, YOLOv5s-C2f, and YOLOv8s) were trained, and tested under the same computational environment and compared with YOLOv5s-Straw. The results showed that the highest mean average precision of 80.3% was achieved using the proposed architecture whereas the same was achieved with YOLOv3-tiny, YOLOv5s, YOLOv5s-C2f, and YOLOv8s were 73.4%, 77.8%, 79.8%, 79.3%, respectively. Specifically, the average precision of YOLOv5s-Straw was 82.1% in the immature class, 73.5% in the nearly mature class, and 86.6% in the mature class, which were 2.3% and 3.7%, respectively, higher than that of the latest YOLOv8s. The model included 8.6*10^6 network parameters with an inference speed of 18ms per image while the inference speed of YOLOv8s had a slower inference speed of 21.0ms and heavy parameters of 11.1*10^6, which indicates that the proposed model is fast enough for real time strawberry detection and localization for the robotic picking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge