Zijia Zhang

Large-Scale Hyperspectral Image Clustering Using Contrastive Learning

Nov 15, 2021

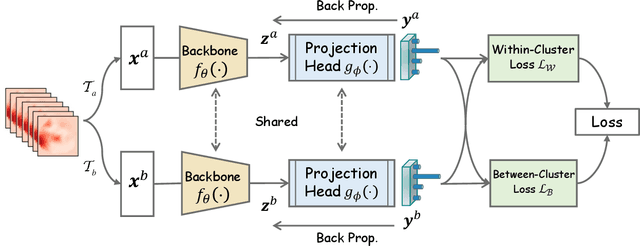

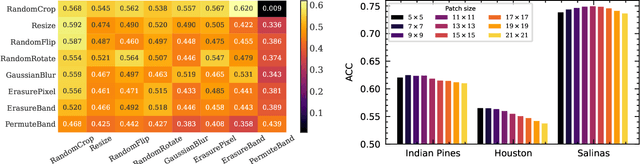

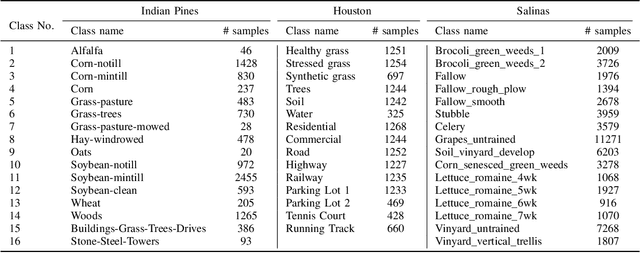

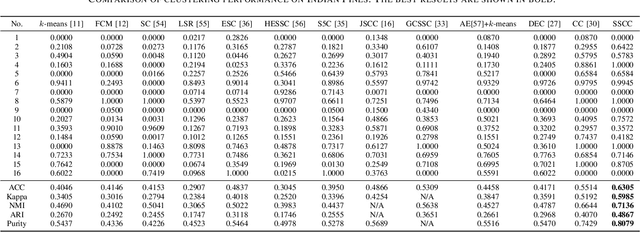

Abstract:Clustering of hyperspectral images is a fundamental but challenging task. The recent development of hyperspectral image clustering has evolved from shallow models to deep and achieved promising results in many benchmark datasets. However, their poor scalability, robustness, and generalization ability, mainly resulting from their offline clustering scenarios, greatly limit their application to large-scale hyperspectral data. To circumvent these problems, we present a scalable deep online clustering model, named Spectral-Spatial Contrastive Clustering (SSCC), based on self-supervised learning. Specifically, we exploit a symmetric twin neural network comprised of a projection head with a dimensionality of the cluster number to conduct dual contrastive learning from a spectral-spatial augmentation pool. We define the objective function by implicitly encouraging within-cluster similarity and reducing between-cluster redundancy. The resulting approach is trained in an end-to-end fashion by batch-wise optimization, making it robust in large-scale data and resulting in good generalization ability for unseen data. Extensive experiments on three hyperspectral image benchmarks demonstrate the effectiveness of our approach and show that we advance the state-of-the-art approaches by large margins.

Fully Linear Graph Convolutional Networks for Semi-Supervised Learning and Clustering

Nov 15, 2021

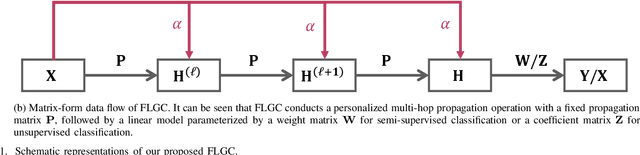

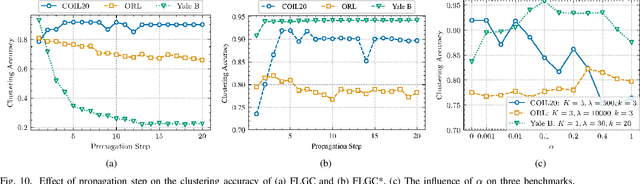

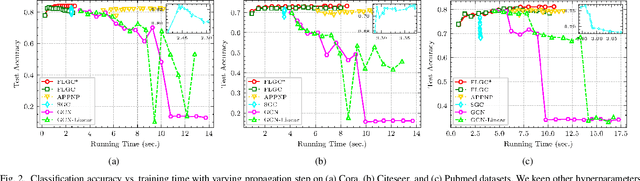

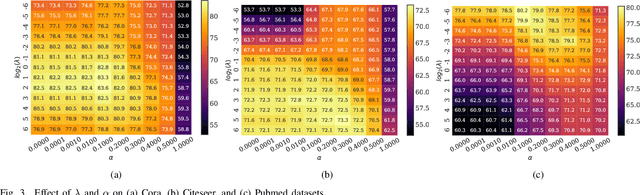

Abstract:This paper presents FLGC, a simple yet effective fully linear graph convolutional network for semi-supervised and unsupervised learning. Instead of using gradient descent, we train FLGC based on computing a global optimal closed-form solution with a decoupled procedure, resulting in a generalized linear framework and making it easier to implement, train, and apply. We show that (1) FLGC is powerful to deal with both graph-structured data and regular data, (2) training graph convolutional models with closed-form solutions improve computational efficiency without degrading performance, and (3) FLGC acts as a natural generalization of classic linear models in the non-Euclidean domain, e.g., ridge regression and subspace clustering. Furthermore, we implement a semi-supervised FLGC and an unsupervised FLGC by introducing an initial residual strategy, enabling FLGC to aggregate long-range neighborhoods and alleviate over-smoothing. We compare our semi-supervised and unsupervised FLGCs against many state-of-the-art methods on a variety of classification and clustering benchmarks, demonstrating that the proposed FLGC models consistently outperform previous methods in terms of accuracy, robustness, and learning efficiency. The core code of our FLGC is released at https://github.com/AngryCai/FLGC.

Graph Convolutional Subspace Clustering: A Robust Subspace Clustering Framework for Hyperspectral Image

Apr 22, 2020

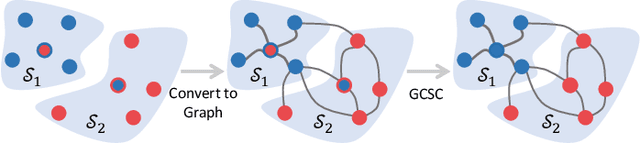

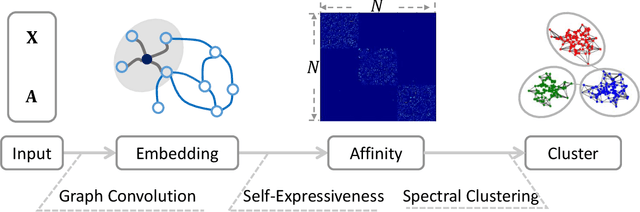

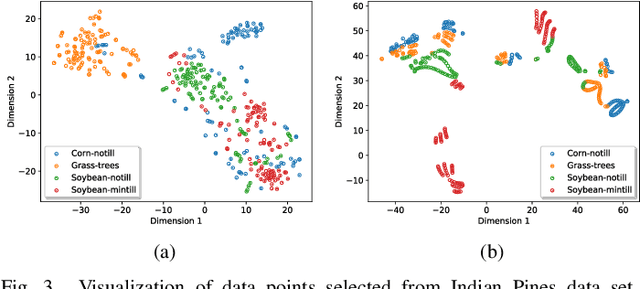

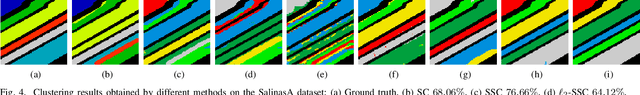

Abstract:Hyperspectral image (HSI) clustering is a challenging task due to the high complexity of HSI data. Subspace clustering has been proven to be powerful for exploiting the intrinsic relationship between data points. Despite the impressive performance in the HSI clustering, traditional subspace clustering methods often ignore the inherent structural information among data. In this paper, we revisit the subspace clustering with graph convolution and present a novel subspace clustering framework called Graph Convolutional Subspace Clustering (GCSC) for robust HSI clustering. Specifically, the framework recasts the self-expressiveness property of the data into the non-Euclidean domain, which results in a more robust graph embedding dictionary. We show that traditional subspace clustering models are the special forms of our framework with the Euclidean data. Basing on the framework, we further propose two novel subspace clustering models by using the Frobenius norm, namely Efficient GCSC (EGCSC) and Efficient Kernel GCSC (EKGCSC). Both models have a globally optimal closed-form solution, which makes them easier to implement, train, and apply in practice. Extensive experiments on three popular HSI datasets demonstrate that EGCSC and EKGCSC can achieve state-of-the-art clustering performance and dramatically outperforms many existing methods with significant margins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge