Zigfried Hampel-Arias

Improved Background Estimation for Gas Plume Identification in Hyperspectral Images

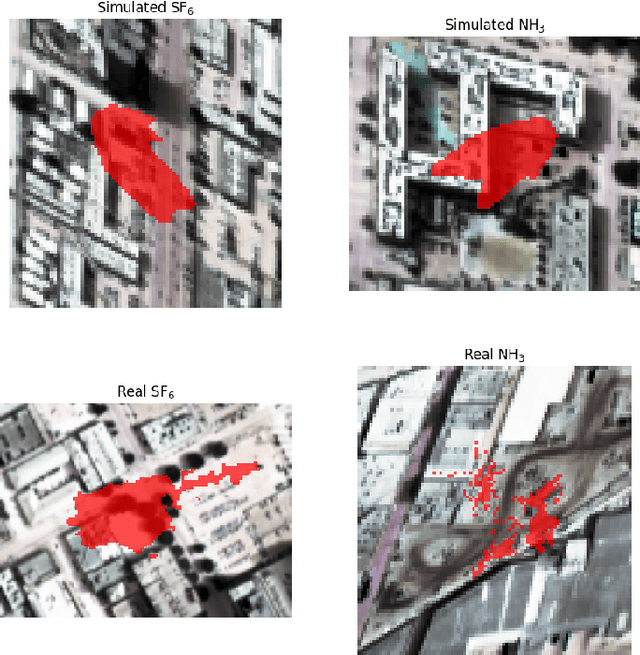

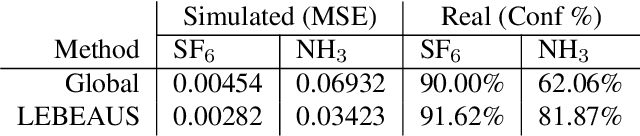

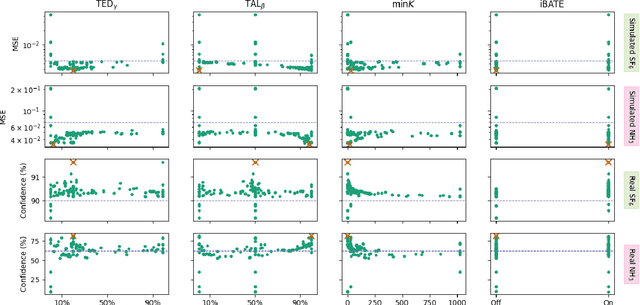

Nov 22, 2024Abstract:Longwave infrared (LWIR) hyperspectral imaging can be used for many tasks in remote sensing, including detecting and identifying effluent gases by LWIR sensors on airborne platforms. Once a potential plume has been detected, it needs to be identified to determine exactly what gas or gases are present in the plume. During identification, the background underneath the plume needs to be estimated and removed to reveal the spectral characteristics of the gas of interest. Current standard practice is to use ``global" background estimation, where the average of all non-plume pixels is used to estimate the background for each pixel in the plume. However, if this global background estimate does not model the true background under the plume well, then the resulting signal can be difficult to identify correctly. The importance of proper background estimation increases when dealing with weak signals, large libraries of gases of interest, and with uncommon or heterogeneous backgrounds. In this paper, we propose two methods of background estimation, in addition to three existing methods, and compare each against global background estimation to determine which perform best at estimating the true background radiance under a plume, and for increasing identification confidence using a neural network classification model. We compare the different methods using 640 simulated plumes. We find that PCA is best at estimating the true background under a plume, with a median of 18,000 times less MSE compared to global background estimation. Our proposed K-Nearest Segments algorithm improves median neural network identification confidence by 53.2%.

Local Background Estimation for Improved Gas Plume Identification in Hyperspectral Images

Jan 23, 2024

Abstract:Deep learning identification models have shown promise for identifying gas plumes in Longwave IR hyperspectral images of urban scenes, particularly when a large library of gases are being considered. Because many gases have similar spectral signatures, it is important to properly estimate the signal from a detected plume. Typically, a scene's global mean spectrum and covariance matrix are estimated to whiten the plume's signal, which removes the background's signature from the gas signature. However, urban scenes can have many different background materials that are spatially and spectrally heterogeneous. This can lead to poor identification performance when the global background estimate is not representative of a given local background material. We use image segmentation, along with an iterative background estimation algorithm, to create local estimates for the various background materials that reside underneath a gas plume. Our method outperforms global background estimation on a set of simulated and real gas plumes. This method shows promise in increasing deep learning identification confidence, while being simple and easy to tune when considering diverse plumes.

A general approach to bridge the reality-gap

Sep 03, 2020

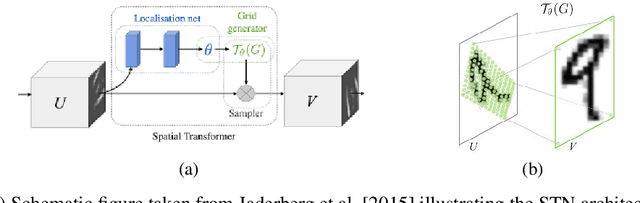

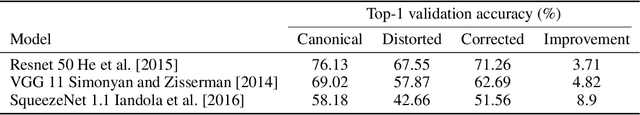

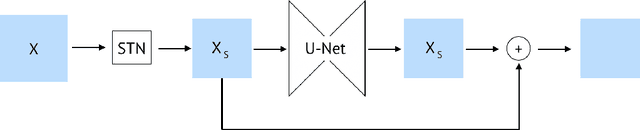

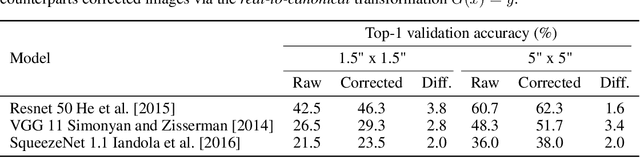

Abstract:Employing machine learning models in the real world requires collecting large amounts of data, which is both time consuming and costly to collect. A common approach to circumvent this is to leverage existing, similar data-sets with large amounts of labelled data. However, models trained on these canonical distributions do not readily transfer to real-world ones. Domain adaptation and transfer learning are often used to breach this "reality gap", though both require a substantial amount of real-world data. In this paper we discuss a more general approach: we propose learning a general transformation to bring arbitrary images towards a canonical distribution where we can naively apply the trained machine learning models. This transformation is trained in an unsupervised regime, leveraging data augmentation to generate off-canonical examples of images and training a Deep Learning model to recover their original counterpart. We quantify the performance of this transformation using pre-trained ImageNet classifiers, demonstrating that this procedure can recover half of the loss in performance on the distorted data-set. We then validate the effectiveness of this approach on a series of pre-trained ImageNet models on a real world data set collected by printing and photographing images in different lighting conditions.

Reducing audio membership inference attack accuracy to chance: 4 defenses

Oct 31, 2019

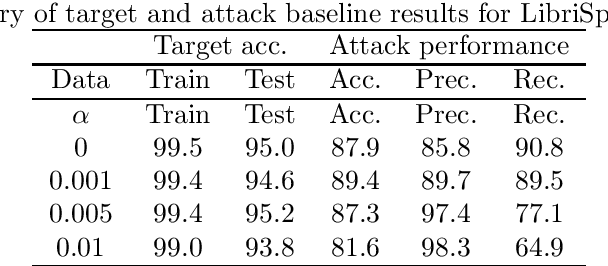

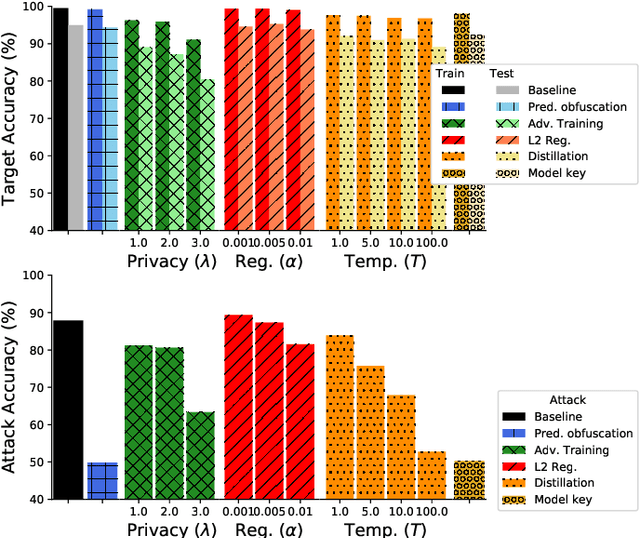

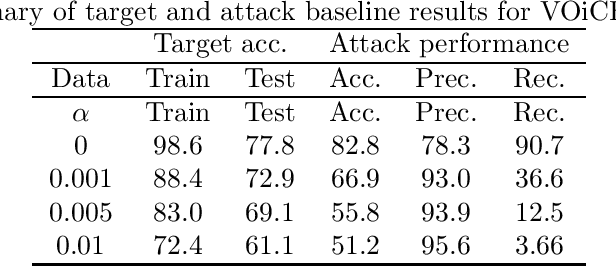

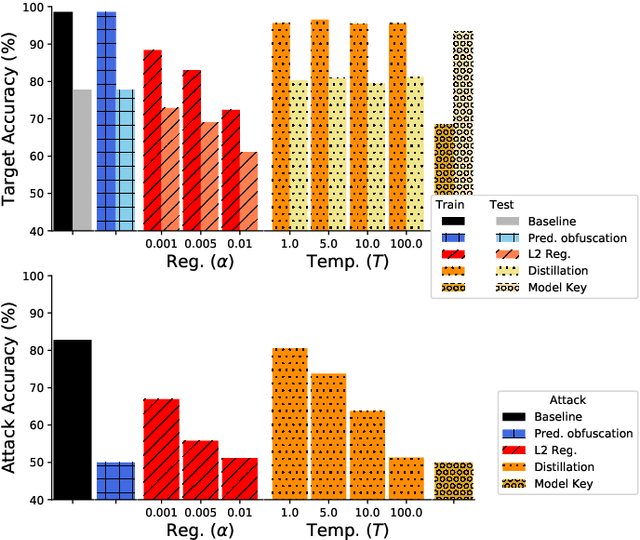

Abstract:It is critical to understand the privacy and robustness vulnerabilities of machine learning models, as their implementation expands in scope. In membership inference attacks, adversaries can determine whether a particular set of data was used in training, putting the privacy of the data at risk. Existing work has mostly focused on image related tasks; we generalize this type of attack to speaker identification on audio samples. We demonstrate attack precision of 85.9\% and recall of 90.8\% for LibriSpeech, and 78.3\% precision and 90.7\% recall for VOiCES (Voices Obscured in Complex Environmental Settings). We find that implementing defenses such as prediction obfuscation, defensive distillation or adversarial training, can reduce attack accuracy to chance.

Robust or Private? Adversarial Training Makes Models More Vulnerable to Privacy Attacks

Jun 15, 2019

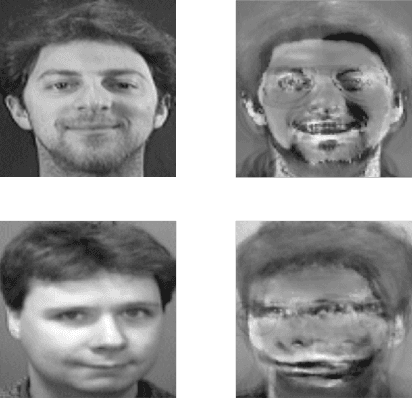

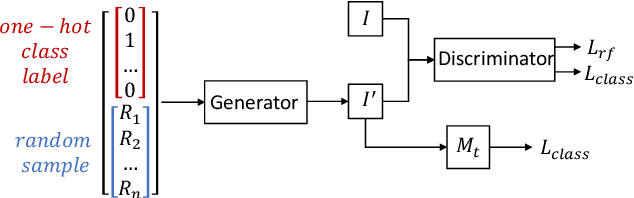

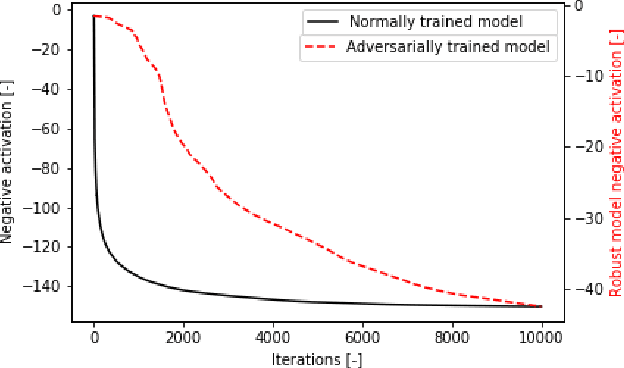

Abstract:Adversarial training was introduced as a way to improve the robustness of deep learning models to adversarial attacks. This training method improves robustness against adversarial attacks, but increases the models vulnerability to privacy attacks. In this work we demonstrate how model inversion attacks, extracting training data directly from the model, previously thought to be intractable become feasible when attacking a robustly trained model. The input space for a traditionally trained model is dominated by adversarial examples - data points that strongly activate a certain class but lack semantic meaning - this makes it difficult to successfully conduct model inversion attacks. We demonstrate this effect using the CIFAR-10 dataset under three different model inversion attacks, a vanilla gradient descent method, gradient based method at different scales, and a generative adversarial network base attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge