Zhongyuan Hau

Adversarial 3D Virtual Patches using Integrated Gradients

Jun 01, 2024

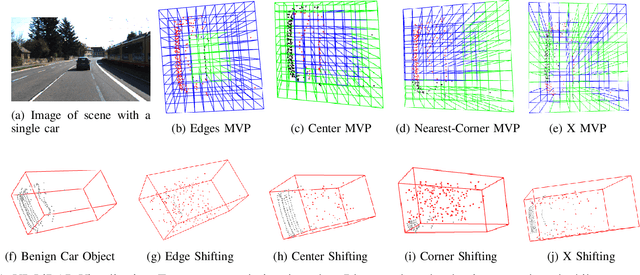

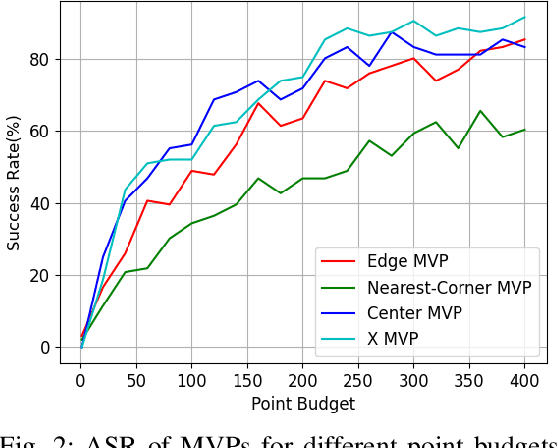

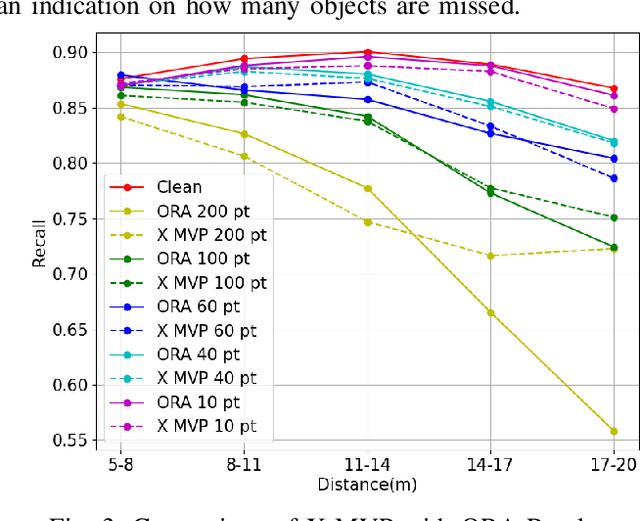

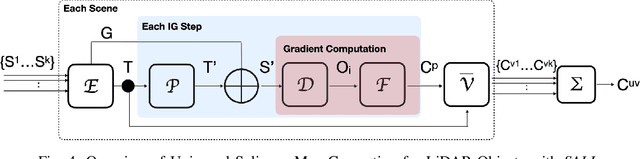

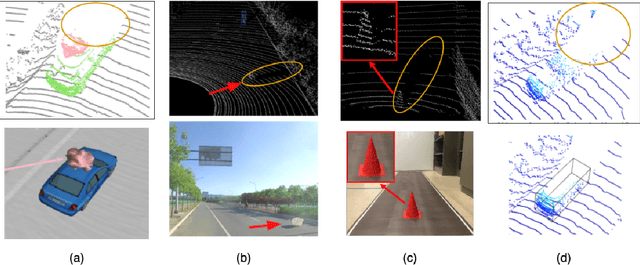

Abstract:LiDAR sensors are widely used in autonomous vehicles to better perceive the environment. However, prior works have shown that LiDAR signals can be spoofed to hide real objects from 3D object detectors. This study explores the feasibility of reducing the required spoofing area through a novel object-hiding strategy based on virtual patches (VPs). We first manually design VPs (MVPs) and show that VP-focused attacks can achieve similar success rates with prior work but with a fraction of the required spoofing area. Then we design a framework Saliency-LiDAR (SALL), which can identify critical regions for LiDAR objects using Integrated Gradients. VPs crafted on critical regions (CVPs) reduce object detection recall by at least 15% compared to our baseline with an approximate 50% reduction in the spoofing area for vehicles of average size.

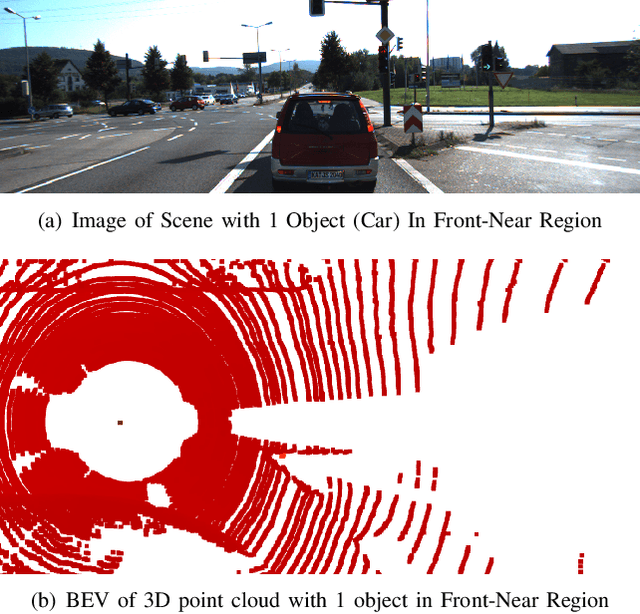

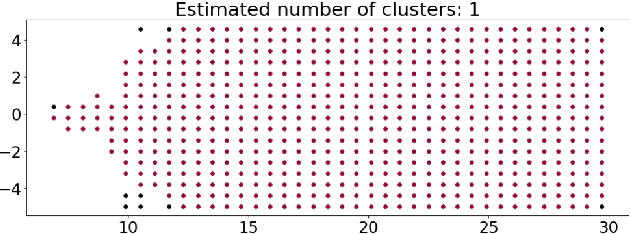

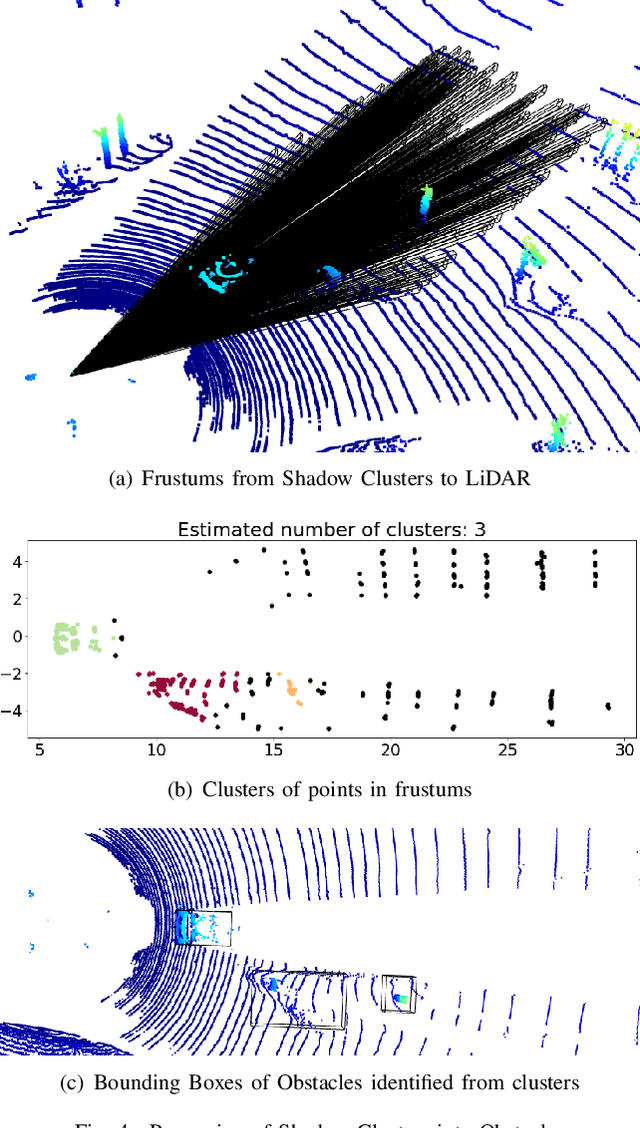

Using 3D Shadows to Detect Object Hiding Attacks on Autonomous Vehicle Perception

Apr 29, 2022

Abstract:Autonomous Vehicles (AVs) are mostly reliant on LiDAR sensors which enable spatial perception of their surroundings and help make driving decisions. Recent works demonstrated attacks that aim to hide objects from AV perception, which can result in severe consequences. 3D shadows, are regions void of measurements in 3D point clouds which arise from occlusions of objects in a scene. 3D shadows were proposed as a physical invariant valuable for detecting spoofed or fake objects. In this work, we leverage 3D shadows to locate obstacles that are hidden from object detectors. We achieve this by searching for void regions and locating the obstacles that cause these shadows. Our proposed methodology can be used to detect an object that has been hidden by an adversary as these objects, while hidden from 3D object detectors, still induce shadow artifacts in 3D point clouds, which we use for obstacle detection. We show that using 3D shadows for obstacle detection can achieve high accuracy in matching shadows to their object and provide precise prediction of an obstacle's distance from the ego-vehicle.

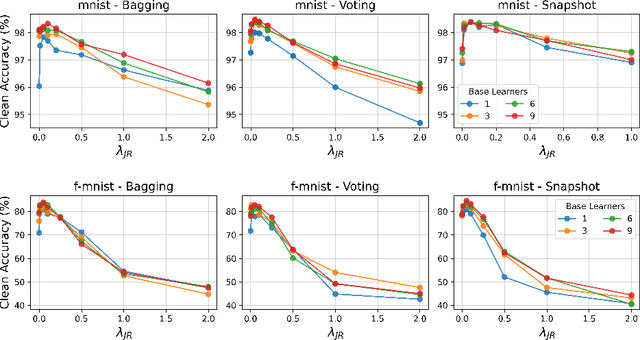

Jacobian Ensembles Improve Robustness Trade-offs to Adversarial Attacks

Apr 19, 2022

Abstract:Deep neural networks have become an integral part of our software infrastructure and are being deployed in many widely-used and safety-critical applications. However, their integration into many systems also brings with it the vulnerability to test time attacks in the form of Universal Adversarial Perturbations (UAPs). UAPs are a class of perturbations that when applied to any input causes model misclassification. Although there is an ongoing effort to defend models against these adversarial attacks, it is often difficult to reconcile the trade-offs in model accuracy and robustness to adversarial attacks. Jacobian regularization has been shown to improve the robustness of models against UAPs, whilst model ensembles have been widely adopted to improve both predictive performance and model robustness. In this work, we propose a novel approach, Jacobian Ensembles-a combination of Jacobian regularization and model ensembles to significantly increase the robustness against UAPs whilst maintaining or improving model accuracy. Our results show that Jacobian Ensembles achieves previously unseen levels of accuracy and robustness, greatly improving over previous methods that tend to skew towards only either accuracy or robustness.

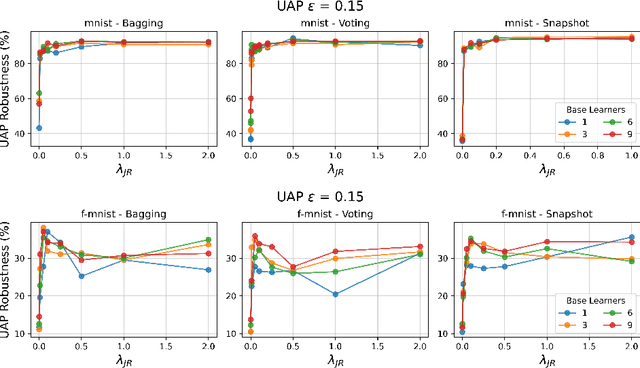

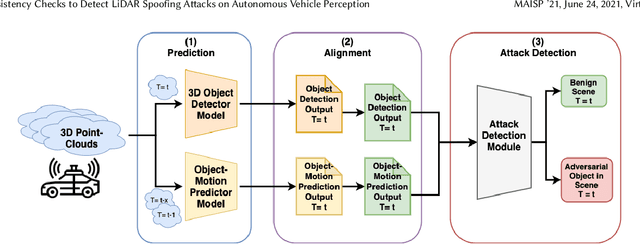

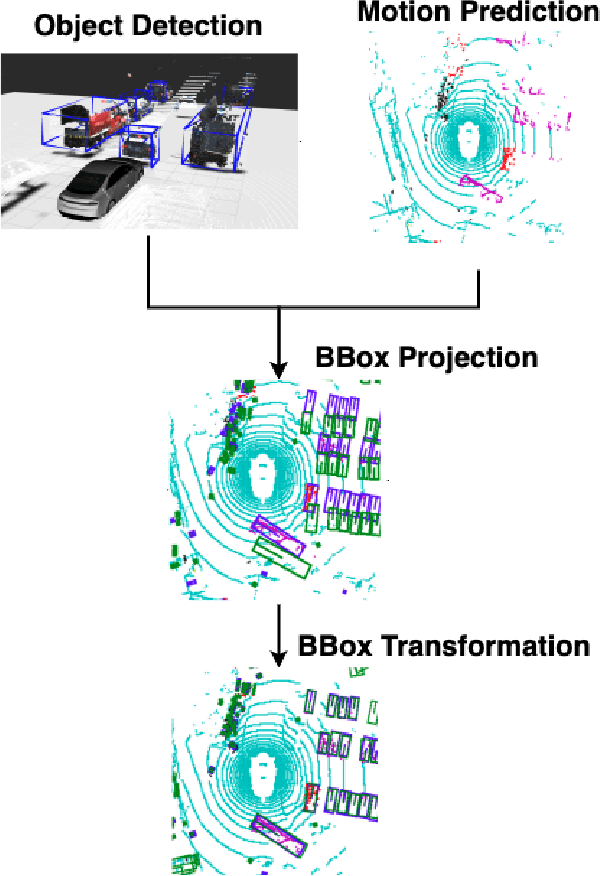

Temporal Consistency Checks to Detect LiDAR Spoofing Attacks on Autonomous Vehicle Perception

Jun 15, 2021

Abstract:LiDAR sensors are used widely in Autonomous Vehicles for better perceiving the environment which enables safer driving decisions. Recent work has demonstrated serious LiDAR spoofing attacks with alarming consequences. In particular, model-level LiDAR spoofing attacks aim to inject fake depth measurements to elicit ghost objects that are erroneously detected by 3D Object Detectors, resulting in hazardous driving decisions. In this work, we explore the use of motion as a physical invariant of genuine objects for detecting such attacks. Based on this, we propose a general methodology, 3D Temporal Consistency Check (3D-TC2), which leverages spatio-temporal information from motion prediction to verify objects detected by 3D Object Detectors. Our preliminary design and implementation of a 3D-TC2 prototype demonstrates very promising performance, providing more than 98% attack detection rate with a recall of 91% for detecting spoofed Vehicle (Car) objects, and is able to achieve real-time detection at 41Hz

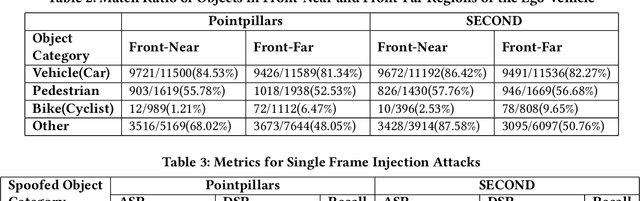

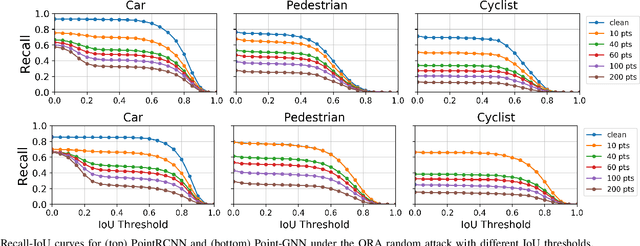

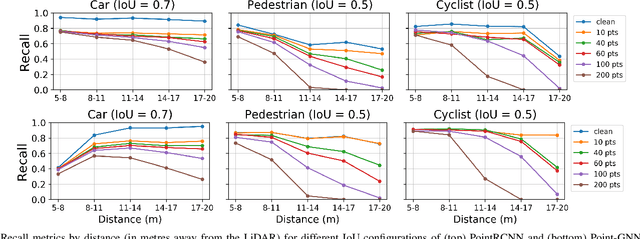

Object Removal Attacks on LiDAR-based 3D Object Detectors

Feb 07, 2021

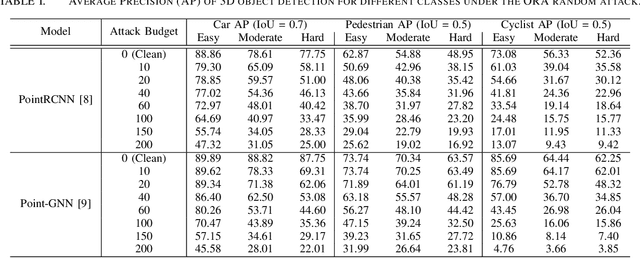

Abstract:LiDARs play a critical role in Autonomous Vehicles' (AVs) perception and their safe operations. Recent works have demonstrated that it is possible to spoof LiDAR return signals to elicit fake objects. In this work we demonstrate how the same physical capabilities can be used to mount a new, even more dangerous class of attacks, namely Object Removal Attacks (ORAs). ORAs aim to force 3D object detectors to fail. We leverage the default setting of LiDARs that record a single return signal per direction to perturb point clouds in the region of interest (RoI) of 3D objects. By injecting illegitimate points behind the target object, we effectively shift points away from the target objects' RoIs. Our initial results using a simple random point selection strategy show that the attack is effective in degrading the performance of commonly used 3D object detection models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge