Zhiwu Xu

Abstraction and Refinement: Towards Scalable and Exact Verification of Neural Networks

Jul 02, 2022

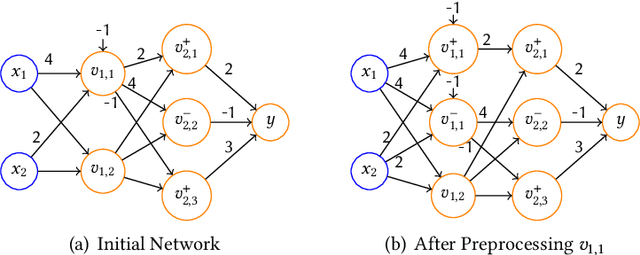

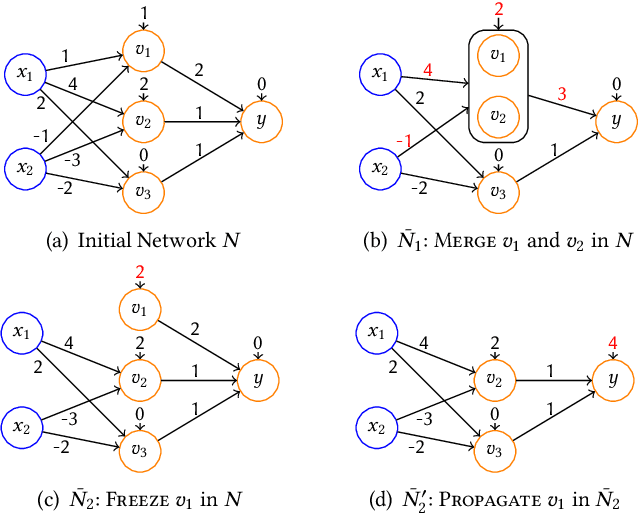

Abstract:As a new programming paradigm, deep neural networks (DNNs) have been increasingly deployed in practice, but the lack of robustness hinders their applications in safety-critical domains. While there are techniques for verifying DNNs with formal guarantees, they are limited in scalability and accuracy. In this paper, we present a novel abstraction-refinement approach for scalable and exact DNN verification. Specifically, we propose a novel abstraction to break down the size of DNNs by over-approximation. The result of verifying the abstract DNN is always conclusive if no spurious counterexample is reported. To eliminate spurious counterexamples introduced by abstraction, we propose a novel counterexample-guided refinement that refines the abstract DNN to exclude a given spurious counterexample while still over-approximating the original one. Our approach is orthogonal to and can be integrated with many existing verification techniques. For demonstration, we implement our approach using two promising and exact tools Marabou and Planet as the underlying verification engines, and evaluate on widely-used benchmarks ACAS Xu, MNIST and CIFAR-10. The results show that our approach can boost their performance by solving more problems and reducing up to 86.3% and 78.0% verification time, respectively. Compared to the most relevant abstraction-refinement approach, our approach is 11.6-26.6 times faster.

Toward `verifying' a Water Treatment System

May 10, 2018

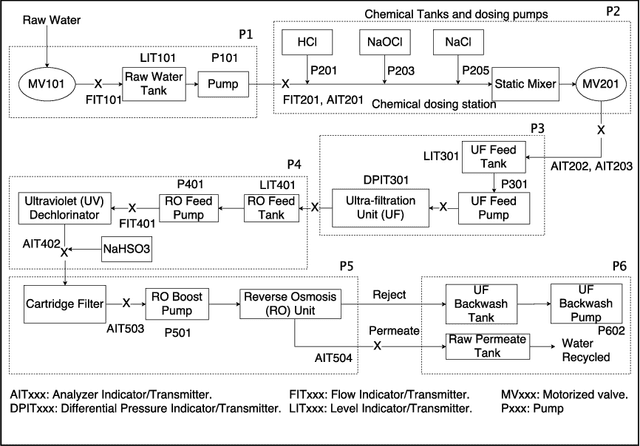

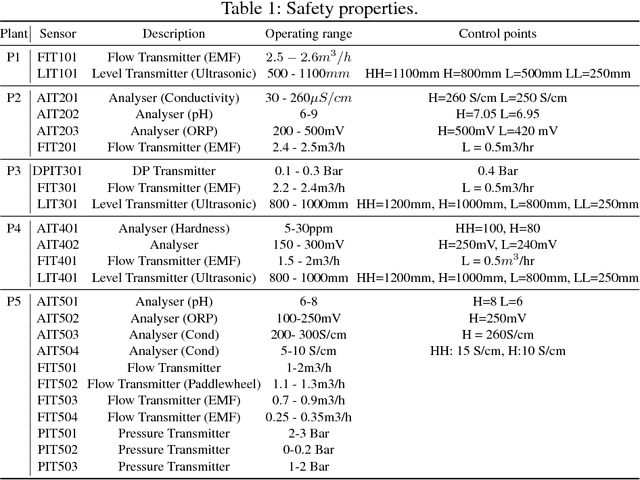

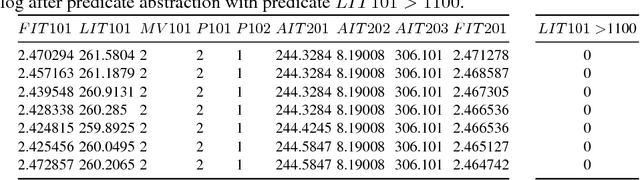

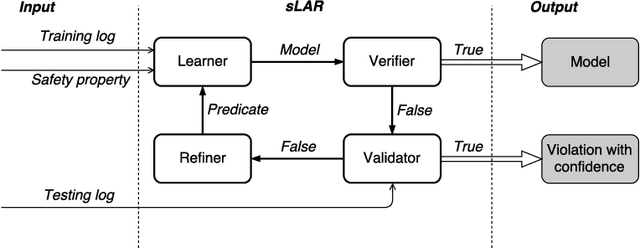

Abstract:Modeling and verifying real-world cyber-physical systems is challenging, which is especially so for complex systems where manually modeling is infeasible. In this work, we report our experience on combining model learning and abstraction refinement to analyze a challenging system, i.e., a real-world Secure Water Treatment system (SWaT). Given a set of safety requirements, the objective is to either show that the system is safe with a high probability (so that a system shutdown is rarely triggered due to safety violation) or not. As the system is too complicated to be manually modeled, we apply latest automatic model learning techniques to construct a set of Markov chains through abstraction and refinement, based on two long system execution logs (one for training and the other for testing). For each probabilistic safety property, we either report it does not hold with a certain level of probabilistic confidence, or report that it holds by showing the evidence in the form of an abstract Markov chain. The Markov chains can subsequently be implemented as runtime monitors in SWaT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge