Zhiren Xun

CREPES-X: Hierarchical Bearing-Distance-Inertial Direct Cooperative Relative Pose Estimation System

Dec 31, 2025Abstract:Relative localization is critical for cooperation in autonomous multi-robot systems. Existing approaches either rely on shared environmental features or inertial assumptions or suffer from non-line-of-sight degradation and outliers in complex environments. Robust and efficient fusion of inter-robot measurements such as bearings, distances, and inertials for tens of robots remains challenging. We present CREPES-X (Cooperative RElative Pose Estimation System with multiple eXtended features), a hierarchical relative localization framework that enhances speed, accuracy, and robustness under challenging conditions, without requiring any global information. CREPES-X starts with a compact hardware design: InfraRed (IR) LEDs, an IR camera, an ultra-wideband module, and an IMU housed in a cube no larger than 6cm on each side. Then CREPES-X implements a two-stage hierarchical estimator to meet different requirements, considering speed, accuracy, and robustness. First, we propose a single-frame relative estimator that provides instant relative poses for multi-robot setups through a closed-form solution and robust bearing outlier rejection. Then a multi-frame relative estimator is designed to offer accurate and robust relative states by exploring IMU pre-integration via robocentric relative kinematics with loosely- and tightly-coupled optimization. Extensive simulations and real-world experiments validate the effectiveness of CREPES-X, showing robustness to up to 90% bearing outliers, proving resilience in challenging conditions, and achieving RMSE of 0.073m and 1.817° in real-world datasets.

CREPES: Cooperative RElative Pose EStimation towards Real-World Multi-Robot Systems

Feb 02, 2023

Abstract:Mutual localization plays a crucial role in multi-robot systems. In this work, we propose a novel system to estimate the 3D relative pose targeting real-world applications. We design and implement a compact hardware module using active infrared (IR) LEDs, an IR fish-eye camera, an ultra-wideband (UWB) module and an inertial measurement unit (IMU). By leveraging IR light communication, the system solves data association between visual detection and UWB ranging. Ranging measurements from the UWB and directional information from the camera offer relative 3D position estimation. Combining the mutual relative position with neighbors and the gravity constraints provided by IMUs, we can estimate the 3D relative pose from every single frame of sensor fusion. In addition, we design an estimator based on the error-state Kalman filter (ESKF) to enhance system accuracy and robustness. When multiple neighbors are available, a Pose Graph Optimization (PGO) algorithm is applied to further improve system accuracy. We conduct experiments in various environments, and the results show that our system outperforms state-of-the-art accuracy and robustness, especially in challenging environments.

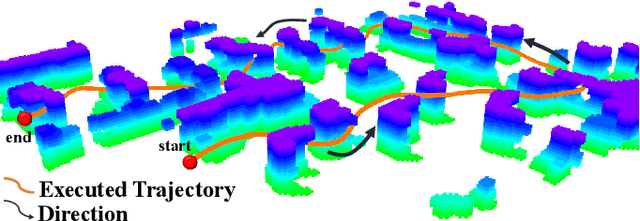

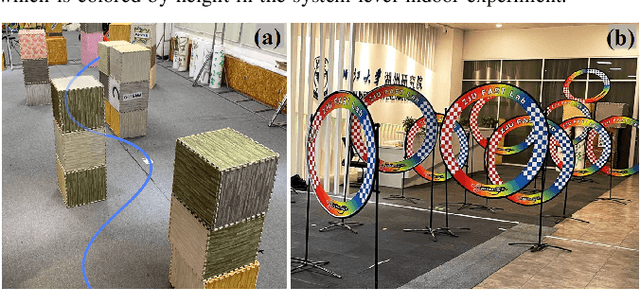

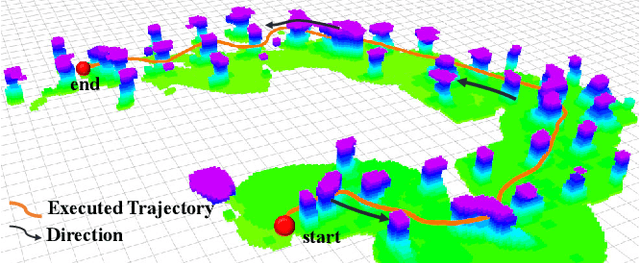

Dynamic Free-Space Roadmap for Safe Quadrotor Motion Planning

Apr 20, 2022

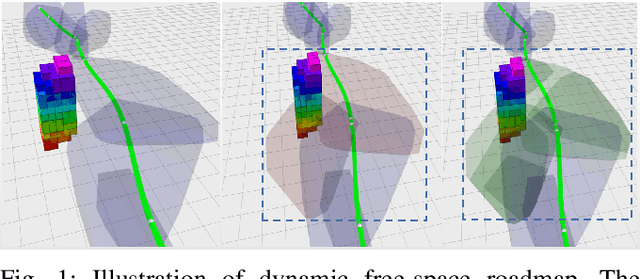

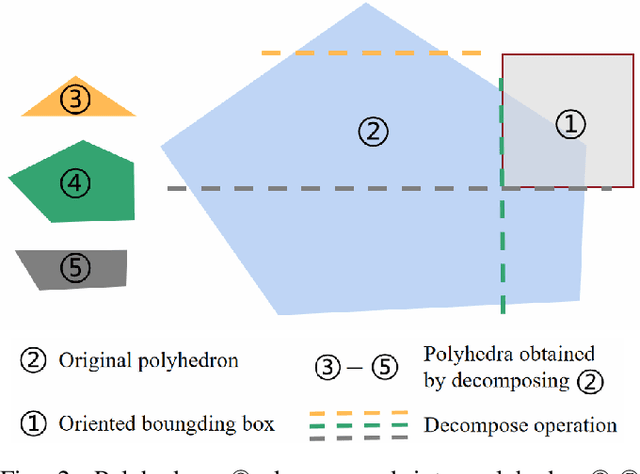

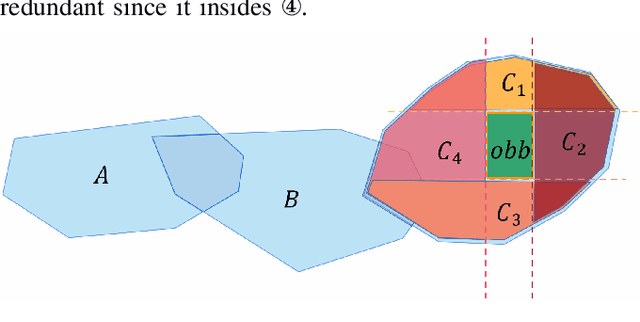

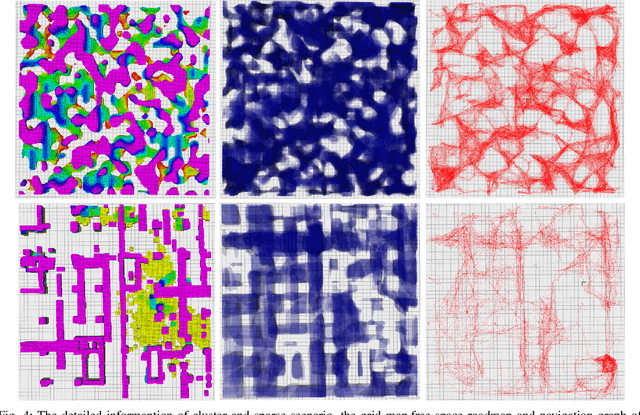

Abstract:Free-space-oriented roadmaps typically generate a series of convex geometric primitives, which constitute the safe region for motion planning. However, a static environment is assumed for this kind of roadmap. This assumption makes it unable to deal with dynamic obstacles and limits its applications. In this paper, we present a dynamic free-space roadmap, which provides feasible spaces and a navigation graph for safe quadrotor motion planning. Our roadmap is constructed by continuously seeding and extracting free regions in the environment. In order to adapt our map to environments with dynamic obstacles, we incrementally decompose the polyhedra intersecting with obstacles into obstacle-free regions, while the graph is also updated by our well-designed mechanism. Extensive simulations and real-world experiments demonstrate that our method is practically applicable and efficient.

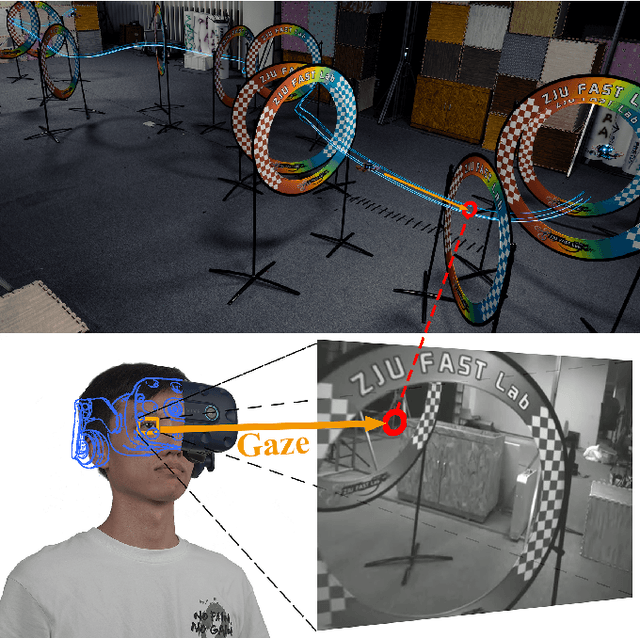

GPA-Teleoperation: Gaze Enhanced Perception-aware Safe Assistive Aerial Teleoperation

Sep 10, 2021

Abstract:Gaze is an intuitive and direct way to represent the intentions of an individual. However, when it comes to assistive aerial teleoperation which aims to perform operators' intention, rare attention has been paid to gaze. Existing methods obtain intention directly from the remote controller (RC) input, which is inaccurate, unstable, and unfriendly to non-professional operators. Further, most teleoperation works do not consider environment perception which is vital to guarantee safety. In this paper, we present GPA-Teleoperation, a gaze enhanced perception-aware assistive teleoperation framework, which addresses the above issues systematically. We capture the intention utilizing gaze information, and generate a topological path matching it. Then we refine the path into a safe and feasible trajectory which simultaneously enhances the perception awareness to the environment operators are interested in. Additionally, the proposed method is integrated into a customized quadrotor system. Extensive challenging indoor and outdoor real-world experiments and benchmark comparisons verify that the proposed system is reliable, robust and applicable to even unskilled users. We will release the source code of our system to benefit related researches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge