Zhiqing Zhu

DR-RAG: Applying Dynamic Document Relevance to Retrieval-Augmented Generation for Question-Answering

Jun 12, 2024

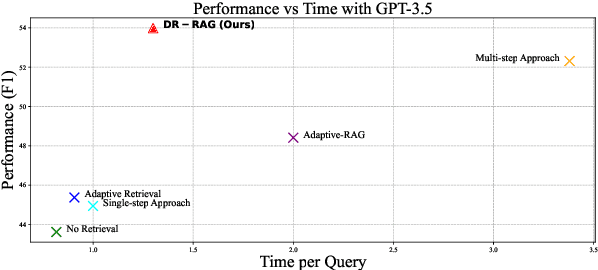

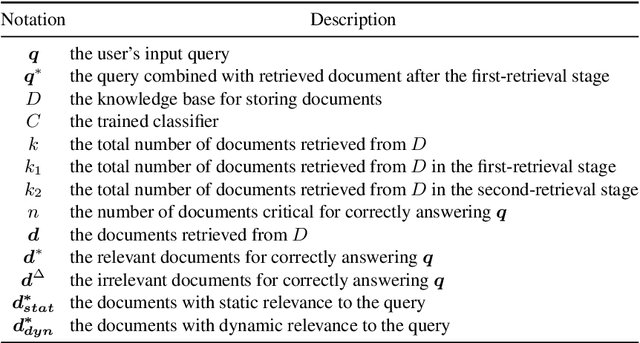

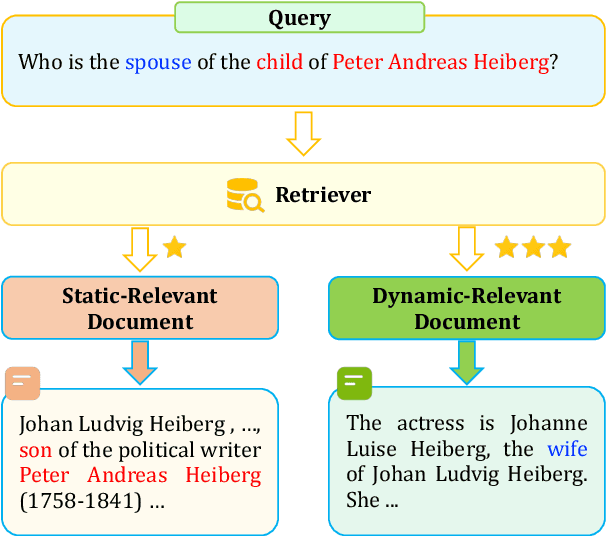

Abstract:Retrieval-Augmented Generation (RAG) has significantly demonstrated the performance of Large Language Models (LLMs) in the knowledge-intensive tasks, such as Question-Answering (QA). RAG expands the query context by incorporating external knowledge bases to enhance the response accuracy. However, it would be inefficient to access LLMs multiple times for each query and unreliable to retrieve all the relevant documents by a single query. We find that even though there is low relevance between some critical documents and query, it is possible to retrieve the remaining documents by combining parts of the documents with the query. To mine the relevance, a two-stage retrieval framework called Dynamic-Relevant Retrieval-Augmented Generation (DR-RAG) is proposed to improve document retrieval recall and the accuracy of answers while maintaining efficiency. Also, a small classifier is applied to two different selection strategies to determine the contribution of the retrieved documents to answering the query and retrieve the relatively relevant documents. Meanwhile, DR-RAG call the LLMs only once, which significantly improves the efficiency of the experiment. The experimental results on multi-hop QA datasets show that DR-RAG can significantly improve the accuracy of the answers and achieve new progress in QA systems.

RS-MetaNet: Deep meta metric learning for few-shot remote sensing scene classification

Sep 28, 2020

Abstract:Training a modern deep neural network on massive labeled samples is the main paradigm in solving the scene classification problem for remote sensing, but learning from only a few data points remains a challenge. Existing methods for few-shot remote sensing scene classification are performed in a sample-level manner, resulting in easy overfitting of learned features to individual samples and inadequate generalization of learned category segmentation surfaces. To solve this problem, learning should be organized at the task level rather than the sample level. Learning on tasks sampled from a task family can help tune learning algorithms to perform well on new tasks sampled in that family. Therefore, we propose a simple but effective method, called RS-MetaNet, to resolve the issues related to few-shot remote sensing scene classification in the real world. On the one hand, RS-MetaNet raises the level of learning from the sample to the task by organizing training in a meta way, and it learns to learn a metric space that can well classify remote sensing scenes from a series of tasks. We also propose a new loss function, called Balance Loss, which maximizes the generalization ability of the model to new samples by maximizing the distance between different categories, providing the scenes in different categories with better linear segmentation planes while ensuring model fit. The experimental results on three open and challenging remote sensing datasets, UCMerced\_LandUse, NWPU-RESISC45, and Aerial Image Data, demonstrate that our proposed RS-MetaNet method achieves state-of-the-art results in cases where there are only 1-20 labeled samples.

* 13 pages, 11 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge