Zhenghao Zhou

Deep-Learning-based Frequency-Domain Watermarking for Energy System Time Series Data Asset Protection

Nov 11, 2025Abstract:Data has been regarded as a valuable asset with the fast development of artificial intelligence technologies. In this paper, we introduce deep-learning neural network-based frequency-domain watermarking for protecting energy system time series data assets and secure data authenticity when being shared or traded across communities. First, the concept and desired watermarking characteristics are introduced. Second, a deep-learning neural network-based watermarking model with specially designed loss functions and network structure is proposed to embed watermarks into the original dataset. Third, a frequency-domain data preprocessing method is proposed to eliminate the frequency bias of neural networks when learning time series datasets to enhance the model performances. Last, a comprehensive watermarking performance evaluation framework is designed for measuring its invisibility, restorability, robustness, secrecy, false-positive detection, generalization, and capacity. Case studies based on practical load and photovoltaic time series datasets demonstrate the effectiveness of the proposed method.

A Causal-Guided Multimodal Large Language Model for Generalized Power System Time-Series Data Analytics

Nov 11, 2025Abstract:Power system time series analytics is critical in understanding the system operation conditions and predicting the future trends. Despite the wide adoption of Artificial Intelligence (AI) tools, many AI-based time series analytical models suffer from task-specificity (i.e. one model for one task) and structural rigidity (i.e. the input-output format is fixed), leading to limited model performances and resource wastes. In this paper, we propose a Causal-Guided Multimodal Large Language Model (CM-LLM) that can solve heterogeneous power system time-series analysis tasks. First, we introduce a physics-statistics combined causal discovery mechanism to capture the causal relationship, which is represented by graph, among power system variables. Second, we propose a multimodal data preprocessing framework that can encode and fuse text, graph and time series to enhance the model performance. Last, we formulate a generic "mask-and-reconstruct" paradigm and design a dynamic input-output padding mechanism to enable CM-LLM adaptive to heterogeneous time-series analysis tasks with varying sample lengths. Simulation results based on open-source LLM Qwen and real-world dataset demonstrate that, after simple fine-tuning, the proposed CM-LLM can achieve satisfying accuracy and efficiency on three heterogeneous time-series analytics tasks: missing data imputation, forecasting and super resolution.

Meaning Beyond Truth Conditions: Evaluating Discourse Level Understanding via Anaphora Accessibility

Feb 19, 2025Abstract:We present a hierarchy of natural language understanding abilities and argue for the importance of moving beyond assessments of understanding at the lexical and sentence levels to the discourse level. We propose the task of anaphora accessibility as a diagnostic for assessing discourse understanding, and to this end, present an evaluation dataset inspired by theoretical research in dynamic semantics. We evaluate human and LLM performance on our dataset and find that LLMs and humans align on some tasks and diverge on others. Such divergence can be explained by LLMs' reliance on specific lexical items during language comprehension, in contrast to human sensitivity to structural abstractions.

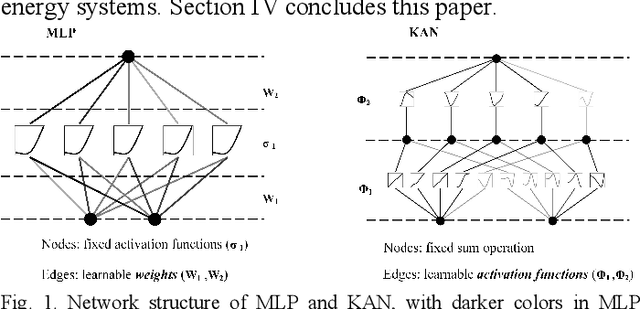

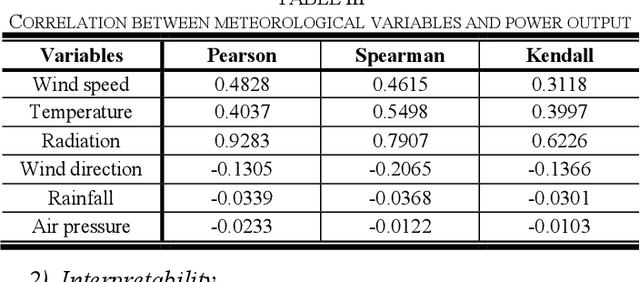

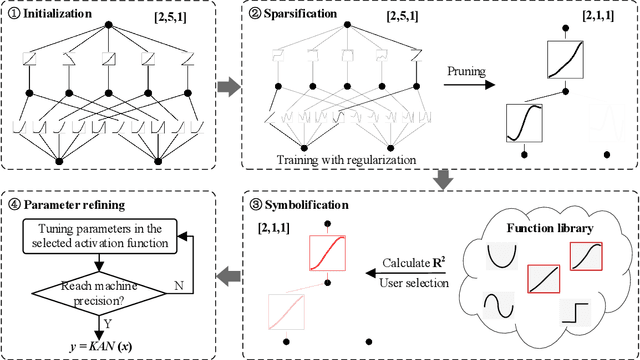

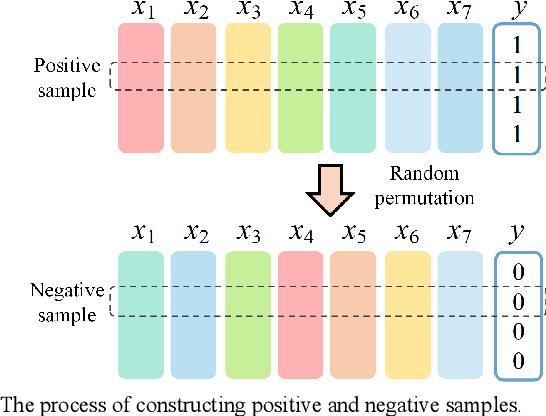

A White-Box Deep-Learning Method for Electrical Energy System Modeling Based on Kolmogorov-Arnold Network

Sep 12, 2024

Abstract:Deep learning methods have been widely used as an end-to-end modeling strategy of electrical energy systems because of their conveniency and powerful pattern recognition capability. However, due to the "black-box" nature, deep learning methods have long been blamed for their poor interpretability when modeling a physical system. In this paper, we introduce a novel neural network structure, Kolmogorov-Arnold Network (KAN), to achieve "white-box" modeling for electrical energy systems to enhance the interpretability. The most distinct feature of KAN lies in the learnable activation function together with the sparse training and symbolification process. Consequently, KAN can express the physical process with concise and explicit mathematical formulas while remaining the nonlinear-fitting capability of deep neural networks. Simulation results based on three electrical energy systems demonstrate the effectiveness of KAN in the aspects of interpretability, accuracy, robustness and generalization ability.

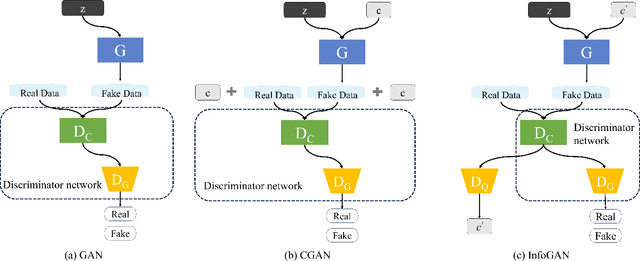

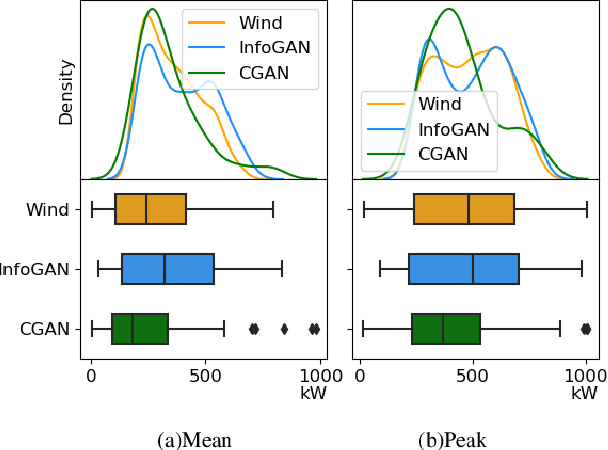

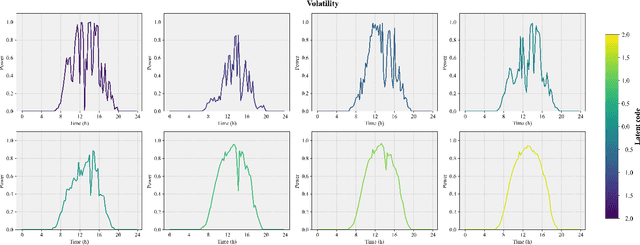

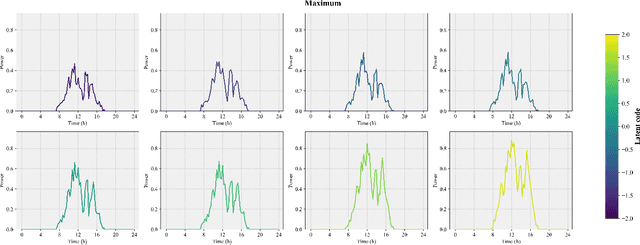

Unsupervised and Interpretable Synthesizing for Electrical Time Series Based on Information Maximizing Generative Adversarial Nets

Jul 18, 2024

Abstract:Generating synthetic data has become a popular alternative solution to deal with the difficulties in accessing and sharing field measurement data in power systems. However, to make the generation results controllable, existing methods (e.g. Conditional Generative Adversarial Nets, cGAN) require labeled dataset to train the model, which is demanding in practice because many field measurement data lacks descriptive labels. In this paper, we introduce the Information Maximizing Generative Adversarial Nets (infoGAN) to achieve interpretable feature extraction and controllable synthetic data generation based on the unlabeled electrical time series dataset. Features with clear physical meanings can be automatically extracted by maximizing the mutual information between the input latent code and the classifier output of infoGAN. Then the extracted features are used to control the generation results similar to a vanilla cGAN framework. Case study is based on the time series datasets of power load and renewable energy output. Results demonstrate that infoGAN can extract both discrete and continuous features with clear physical meanings, as well as generating realistic synthetic time series that satisfy given features.

Is In-Context Learning a Type of Gradient-Based Learning? Evidence from the Inverse Frequency Effect in Structural Priming

Jun 26, 2024

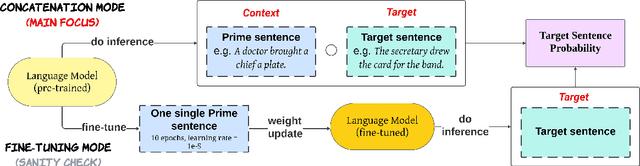

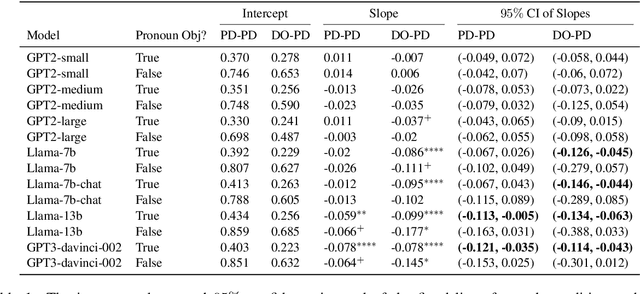

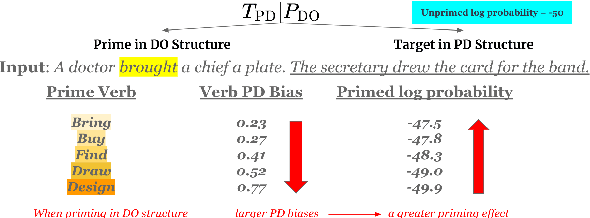

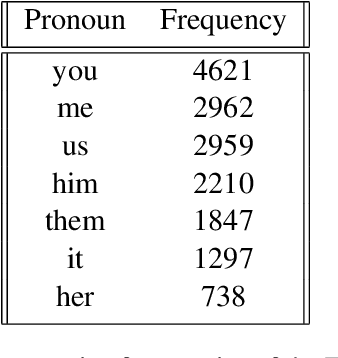

Abstract:Large language models (LLMs) have shown the emergent capability of in-context learning (ICL). One line of research has explained ICL as functionally performing gradient descent. In this paper, we introduce a new way of diagnosing whether ICL is functionally equivalent to gradient-based learning. Our approach is based on the inverse frequency effect (IFE) -- a phenomenon in which an error-driven learner is expected to show larger updates when trained on infrequent examples than frequent ones. The IFE has previously been studied in psycholinguistics because humans show this effect in the context of structural priming (the tendency for people to produce sentence structures they have encountered recently); the IFE has been used as evidence that human structural priming must involve error-driven learning mechanisms. In our experiments, we simulated structural priming within ICL and found that LLMs display the IFE, with the effect being stronger in larger models. We conclude that ICL is indeed a type of gradient-based learning, supporting the hypothesis that a gradient component is implicitly computed in the forward pass during ICL. Our results suggest that both humans and LLMs make use of gradient-based, error-driven processing mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge