Zhendong Xue

RFUniverse: A Physics-based Action-centric Interactive Environment for Everyday Household Tasks

Feb 01, 2022Abstract:Household environments are important testbeds for embodied AI research. Many simulation environments have been proposed to develop learning models for solving everyday household tasks. However, though interactions are paid attention to in most environments, the actions operating on the objects are not well supported concerning action types, object types, and interaction physics. To bridge the gap at the action level, we propose a novel physics-based action-centric environment, RFUniverse, for robot learning of everyday household tasks. RFUniverse supports interactions among 87 atomic actions and 8 basic object types in a visually and physically plausible way. To demonstrate the usability of the simulation environment, we perform learning algorithms on various types of tasks, namely fruit-picking, cloth-folding and sponge-wiping for manipulation, stair-chasing for locomotion, room-cleaning for multi-agent collaboration, milk-pouring for task and motion planning, and bimanual-lifting for behavior cloning from VR interface. Client-side Python APIs, learning codes, models, and the database will be released. Demo video for atomic actions can be found in supplementary materials: \url{https://sites.google.com/view/rfuniverse}

H2O: A Benchmark for Visual Human-human Object Handover Analysis

Apr 23, 2021

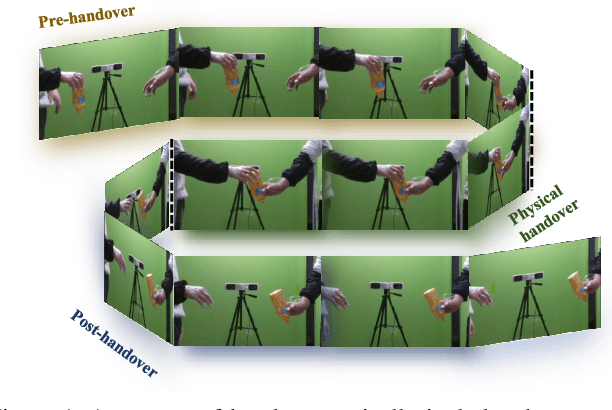

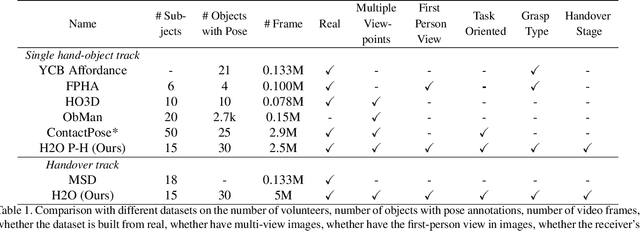

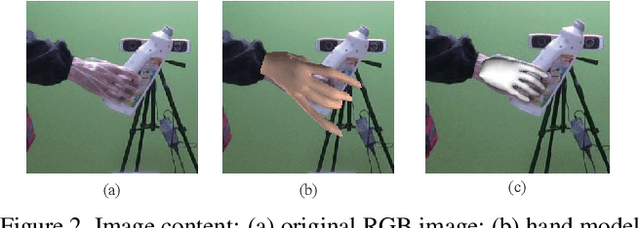

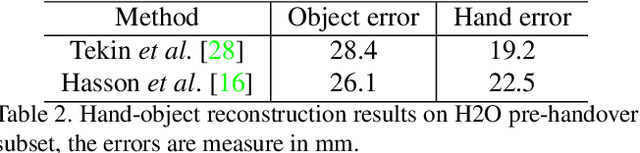

Abstract:Object handover is a common human collaboration behavior that attracts attention from researchers in Robotics and Cognitive Science. Though visual perception plays an important role in the object handover task, the whole handover process has been specifically explored. In this work, we propose a novel rich-annotated dataset, H2O, for visual analysis of human-human object handovers. The H2O, which contains 18K video clips involving 15 people who hand over 30 objects to each other, is a multi-purpose benchmark. It can support several vision-based tasks, from which, we specifically provide a baseline method, RGPNet, for a less-explored task named Receiver Grasp Prediction. Extensive experiments show that the RGPNet can produce plausible grasps based on the giver's hand-object states in the pre-handover phase. Besides, we also report the hand and object pose errors with existing baselines and show that the dataset can serve as the video demonstrations for robot imitation learning on the handover task. Dataset, model and code will be made public.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge