Zexi Hu

Texture-enhanced Light Field Super-resolution with Spatio-Angular Decomposition Kernels

Nov 07, 2021

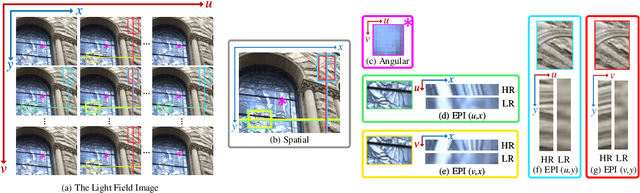

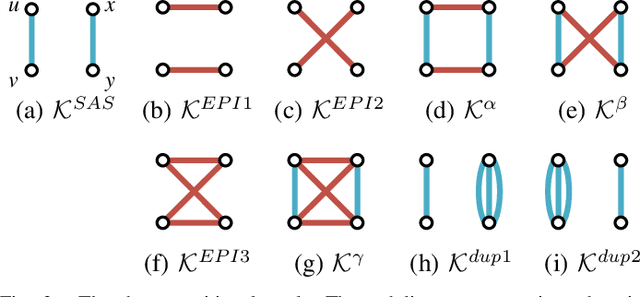

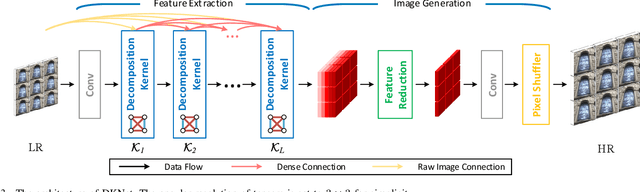

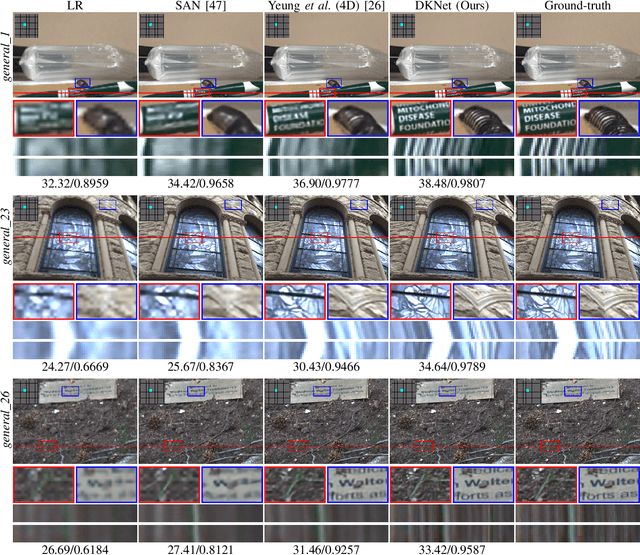

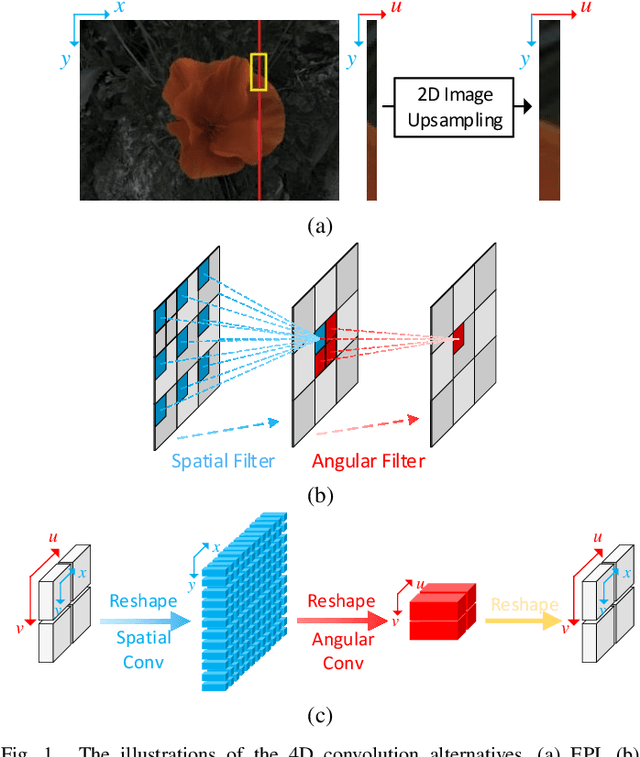

Abstract:Despite the recent progress in light field super-resolution (LFSR) achieved by convolutional neural networks, the correlation information of light field (LF) images has not been sufficiently studied and exploited due to the complexity of 4D LF data. To cope with such high-dimensional LF data, most of the existing LFSR methods resorted to decomposing it into lower dimensions and subsequently performing optimization on the decomposed sub-spaces. However, these methods are inherently limited as they neglected the characteristics of the decomposition operations and only utilized a limited set of LF sub-spaces ending up failing to comprehensively extract spatio-angular features and leading to a performance bottleneck. To overcome these limitations, in this paper, we thoroughly discover the potentials of LF decomposition and propose a novel concept of decomposition kernels. In particular, we systematically unify the decomposition operations of various sub-spaces into a series of such decomposition kernels, which are incorporated into our proposed Decomposition Kernel Network (DKNet) for comprehensive spatio-angular feature extraction. The proposed DKNet is experimentally verified to achieve substantial improvements by 1.35 dB, 0.83 dB, and 1.80 dB PSNR in 2x, 3x and 4x LFSR scales, respectively, when compared with the state-of-the-art methods. To further improve DKNet in producing more visually pleasing LFSR results, based on the VGG network, we propose a LFVGG loss to guide the Texture-Enhanced DKNet (TE-DKNet) to generate rich authentic textures and enhance LF images' visual quality significantly. We also propose an indirect evaluation metric by taking advantage of LF material recognition to objectively assess the perceptual enhancement brought by the LFVGG loss.

Efficient Light Field Reconstruction via Spatio-Angular Dense Network

Aug 08, 2021

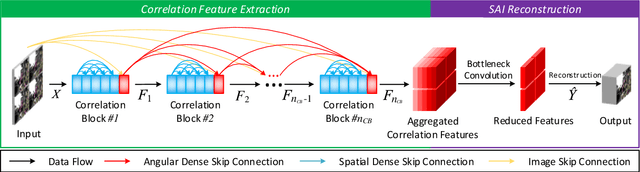

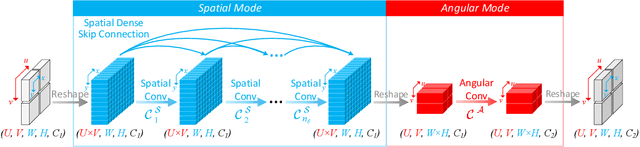

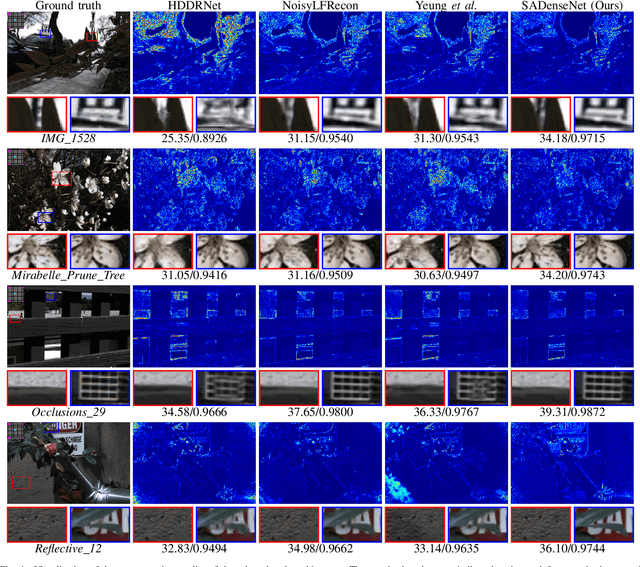

Abstract:As an image sensing instrument, light field images can supply extra angular information compared with monocular images and have facilitated a wide range of measurement applications. Light field image capturing devices usually suffer from the inherent trade-off between the angular and spatial resolutions. To tackle this problem, several methods, such as light field reconstruction and light field super-resolution, have been proposed but leaving two problems unaddressed, namely domain asymmetry and efficient information flow. In this paper, we propose an end-to-end Spatio-Angular Dense Network (SADenseNet) for light field reconstruction with two novel components, namely correlation blocks and spatio-angular dense skip connections to address them. The former performs effective modeling of the correlation information in a way that conforms with the domain asymmetry. And the latter consists of three kinds of connections enhancing the information flow within two domains. Extensive experiments on both real-world and synthetic datasets have been conducted to demonstrate that the proposed SADenseNet's state-of-the-art performance at significantly reduced costs in memory and computation. The qualitative results show that the reconstructed light field images are sharp with correct details and can serve as pre-processing to improve the accuracy of related measurement applications.

A Universal Update-pacing Framework For Visual Tracking

Mar 01, 2016

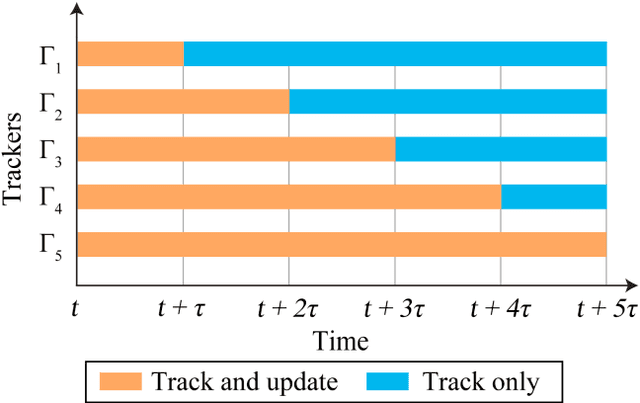

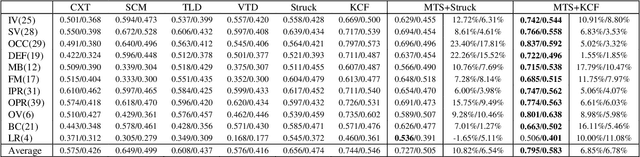

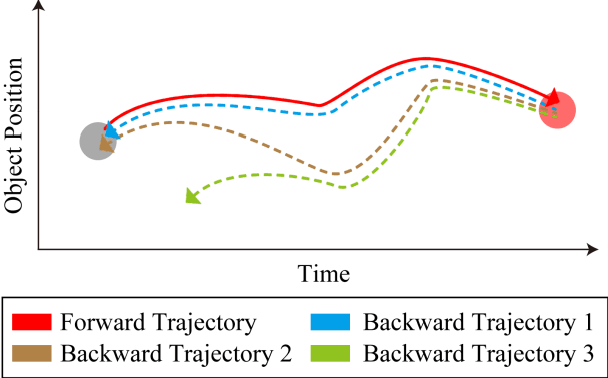

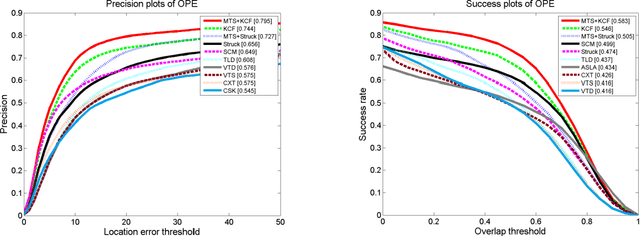

Abstract:This paper proposes a novel framework to alleviate the model drift problem in visual tracking, which is based on paced updates and trajectory selection. Given a base tracker, an ensemble of trackers is generated, in which each tracker's update behavior will be paced and then traces the target object forward and backward to generate a pair of trajectories in an interval. Then, we implicitly perform self-examination based on trajectory pair of each tracker and select the most robust tracker. The proposed framework can effectively leverage temporal context of sequential frames and avoid to learn corrupted information. Extensive experiments on the standard benchmark suggest that the proposed framework achieves superior performance against state-of-the-art trackers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge