Zengqiang Zheng

A Range-Null Space Decomposition Approach for Fast and Flexible Spectral Compressive Imaging

May 16, 2023Abstract:We present RND-SCI, a novel framework for compressive hyperspectral image (HSI) reconstruction. Our framework decomposes the reconstructed object into range-space and null-space components, where the range-space part ensures the solution conforms to the compression process, and the null-space term introduces a deep HSI prior to constraining the output to have satisfactory properties. RND-SCI is not only simple in design with strong interpretability but also can be easily adapted to various HSI reconstruction networks, improving the quality of HSIs with minimal computational overhead. RND-SCI significantly boosts the performance of HSI reconstruction networks in retraining, fine-tuning or plugging into a pre-trained off-the-shelf model. Based on the framework and SAUNet, we design an extremely fast HSI reconstruction network, RND-SAUNet, which achieves an astounding 91 frames per second while maintaining superior reconstruction accuracy compared to other less time-consuming methods. Code and models are available at https://github.com/hustvl/RND-SCI.

A Simple Adaptive Unfolding Network for Hyperspectral Image Reconstruction

Jan 24, 2023

Abstract:We present a simple, efficient, and scalable unfolding network, SAUNet, to simplify the network design with an adaptive alternate optimization framework for hyperspectral image (HSI) reconstruction. SAUNet customizes a Residual Adaptive ADMM Framework (R2ADMM) to connect each stage of the network via a group of learnable parameters to promote the usage of mask prior, which greatly stabilizes training and solves the accuracy degradation issue. Additionally, we introduce a simple convolutional modulation block (CMB), which leads to efficient training, easy scale-up, and less computation. Coupling these two designs, SAUNet can be scaled to non-trivial 13 stages with continuous improvement. Without bells and whistles, SAUNet improves both performance and speed compared with the previous state-of-the-art counterparts, which makes it feasible for practical high-resolution HSI reconstruction scenarios. We set new records on CAVE and KAIST HSI reconstruction benchmarks. Code and models are available at https://github.com/hustvl/SAUNet.

Anomaly Discovery in Semantic Segmentation via Distillation Comparison Networks

Dec 18, 2021

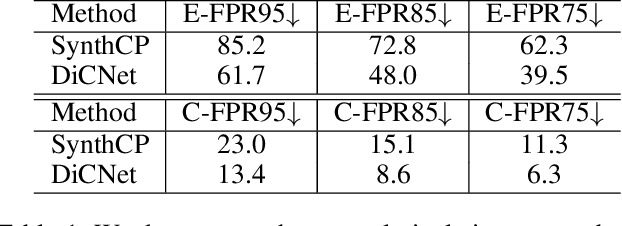

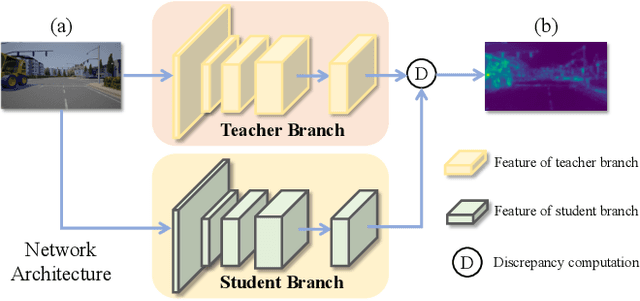

Abstract:This paper aims to address the problem of anomaly discovery in semantic segmentation. Our key observation is that semantic classification plays a critical role in existing approaches, while the incorrectly classified pixels are easily regarded as anomalies. Such a phenomenon frequently appears and is rarely discussed, which significantly reduces the performance of anomaly discovery. To this end, we propose a novel Distillation Comparison Network (DiCNet). It comprises of a teacher branch which is a semantic segmentation network that removed the semantic classification head, and a student branch that is distilled from the teacher branch through a distribution distillation. We show that the distillation guarantees the semantic features of the two branches hold consistency in the known classes, while reflect inconsistency in the unknown class. Therefore, we leverage the semantic feature discrepancy between the two branches to discover the anomalies. DiCNet abandons the semantic classification head in the inference process, and hence significantly alleviates the issue caused by incorrect semantic classification. Extensive experimental results on StreetHazards dataset and BDD-Anomaly dataset are conducted to verify the superior performance of DiCNet. In particular, DiCNet obtains a 6.3% improvement in AUPR and a 5.2% improvement in FPR95 on StreetHazards dataset, achieves a 4.2% improvement in AUPR and a 6.8% improvement in FPR95 on BDD-Anomaly dataset. Codes are available at https://github.com/zhouhuan-hust/DiCNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge