Zelin Xiao

Endowing Deep 3D Models with Rotation Invariance Based on Principal Component Analysis

Oct 20, 2019

Abstract:In this paper, we propose a simple yet effective method to endow deep 3D models with rotation invariance by expressing the coordinates in an intrinsic frame determined by the object shape itself. Key to our approach is to find such an intrinsic frame which should be unique to the identical object shape and consistent across different instances of the same category, e.g. the frame axes of desks should be all roughly along the edges. Interestingly, the principal component analysis exactly provides an effective way to define such a frame, i.e. setting the principal components as the frame axes. As the principal components have direction ambiguity caused by the sign-ambiguity of eigenvector computation, there exist several intrinsic frames for each object. In order to achieve absolute rotation invariance for a deep model, we adopt the coordinates expressed in all intrinsic frames as inputs to obtain multiple output features, which will be further aggregated as a final feature via a self-attention module. Our method is theoretically rotation-invariant and can be flexibly embedded into the current network architectures. Comprehensive experiments demonstrate that our approach can achieve near state-of-the-art performance on rotation-augmented dataset for ModelNet40 classification and outperform other models on SHREC'17 perturbed retrieval task.

Justlookup: One Millisecond Deep Feature Extraction for Point Clouds By Lookup Tables

Aug 14, 2019

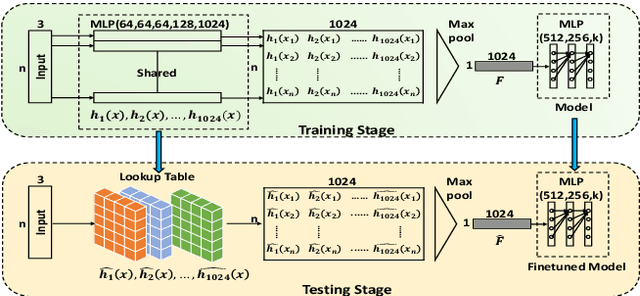

Abstract:Deep models are capable of fitting complex high dimensional functions while usually yielding large computation load. There is no way to speed up the inference process by classical lookup tables due to the high-dimensional input and limited memory size. Recently, a novel architecture (PointNet) for point clouds has demonstrated that it is possible to obtain a complicated deep function from a set of 3-variable functions. In this paper, we exploit this property and apply a lookup table to encode these 3-variable functions. This method ensures that the inference time is only determined by the memory access no matter how complicated the deep function is. We conduct extensive experiments on ModelNet and ShapeNet datasets and demonstrate that we can complete the inference process in 1.5 ms on an Intel i7-8700 CPU (single core mode), 32x speedup over the PointNet architecture without any performance degradation.

Face Recognition from Sequential Sparse 3D data via Deep Registration

Oct 23, 2018

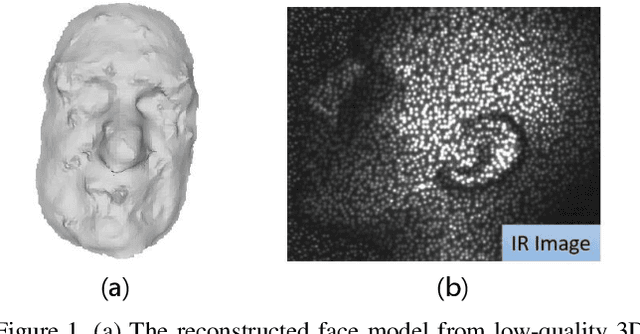

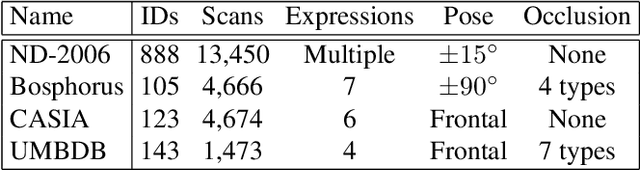

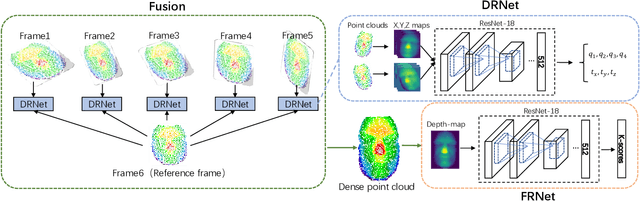

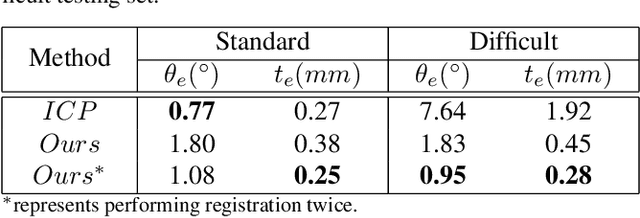

Abstract:Previous works have shown that face recognition with high accuracy 3D data is more reliable and insensitive to pose and light variations. Recently, low-cost and portable 3D acquisition techniques like ToF(Time of Flight) and DoE based structured light enable us to access 3D data easily, e.g. via a mobile phone. However, these devices can only provide sparse(limited speckles in structured light system) and noisy 3D data which can not support face recognition directly. In this paper, we aim at achieving high performance face recognition for devices equipped with such modules which is very meaningful in practice as such devices will be very popular. We propose a framework to perform face recognition by fusing a sequence of low-quality 3D data. As 3D data are sparse and noisy which can not be well handled by conventional methods like the ICP algorithm, we design a PointNet-like Deep Registration Network(DRNet) which works with ordered 3D point coordinates while preserving the ability of mining local structures via convolution. Meanwhile we develop a novel loss function to optimize our DRNet based on the quaternion expression which obviously outperforms other widely used functions. For face recognition, we design a deep convolutional network which takes the fused 3D depth-map as input based on AMSoftmax model. Experiments show that our DRNet can achieve rotation error 0.95 degrees and translation error 0.28mm for registration. The face recognition on fused data also achieves rank-1 accuracy 99.2%, FAR-0.001 97.5% on Bosphorus dataset which is comparable with state-of-the-art high-quality data based recognition performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge