Zehui Jiang

Neuron Empirical Gradient: Connecting Neurons' Linear Controllability and Representational Capacity

Dec 24, 2024Abstract:Although neurons in the feed-forward layers of pre-trained language models (PLMs) can store factual knowledge, most prior analyses remain qualitative, leaving the quantitative relationship among knowledge representation, neuron activations, and model output poorly understood. In this study, by performing neuron-wise interventions using factual probing datasets, we first reveal the linear relationship between neuron activations and output token probabilities. We refer to the gradient of this linear relationship as ``neuron empirical gradients.'' and propose NeurGrad, an efficient method for their calculation to facilitate quantitative neuron analysis. We next investigate whether neuron empirical gradients in PLMs encode general task knowledge by probing skill neurons. To this end, we introduce MCEval8k, a multi-choice knowledge evaluation benchmark spanning six genres and 22 tasks. Our experiments confirm that neuron empirical gradients effectively capture knowledge, while skill neurons exhibit efficiency, generality, inclusivity, and interdependency. These findings link knowledge to PLM outputs via neuron empirical gradients, shedding light on how PLMs store knowledge. The code and dataset are released.

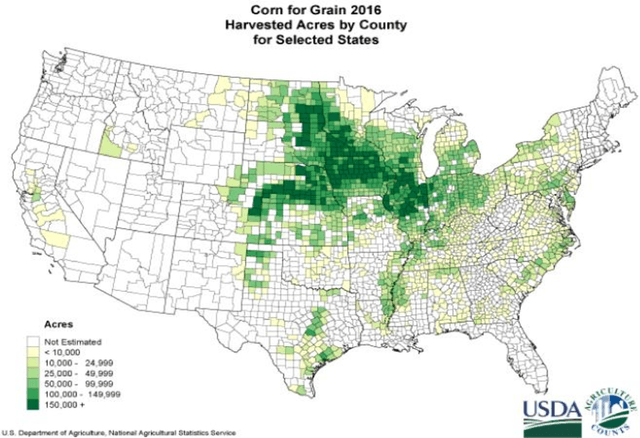

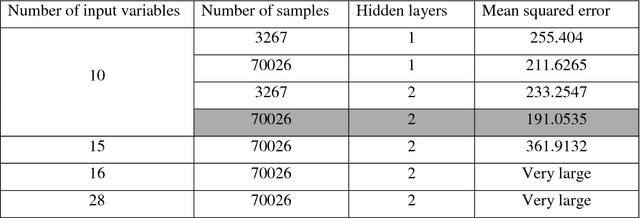

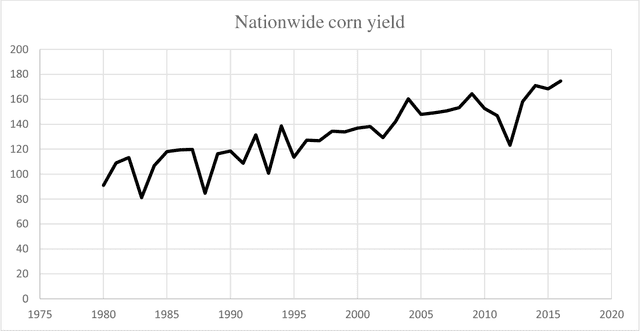

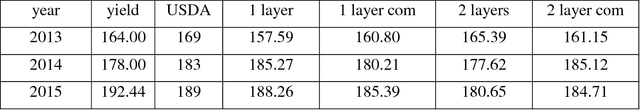

Predicting County Level Corn Yields Using Deep Long Short Term Memory Models

May 30, 2018

Abstract:Corn yield prediction is beneficial as it provides valuable information about production and prices prior the harvest. Publicly available high-quality corn yield prediction can help address emergent information asymmetry problems and in doing so improve price efficiency in futures markets. This paper is the first to employ Long Short-Term Memory (LSTM), a special form of Recurrent Neural Network (RNN) method to predict corn yields. A cross sectional time series of county-level corn yield and hourly weather data made the sample space large enough to use deep learning technics. LSTM is efficient in time series prediction with complex inner relations, which makes it suitable for this task. The empirical results from county level data in Iowa show promising predictive power relative to existing survey based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge