Yunshi Wen

MTS-JEPA: Multi-Resolution Joint-Embedding Predictive Architecture for Time-Series Anomaly Prediction

Feb 04, 2026Abstract:Multivariate time series underpin modern critical infrastructure, making the prediction of anomalies a vital necessity for proactive risk mitigation. While Joint-Embedding Predictive Architectures (JEPA) offer a promising framework for modeling the latent evolution of these systems, their application is hindered by representation collapse and an inability to capture precursor signals across varying temporal scales. To address these limitations, we propose MTS-JEPA, a specialized architecture that integrates a multi-resolution predictive objective with a soft codebook bottleneck. This design explicitly decouples transient shocks from long-term trends, and utilizes the codebook to capture discrete regime transitions. Notably, we find this constraint also acts as an intrinsic regularizer to ensure optimization stability. Empirical evaluations on standard benchmarks confirm that our approach effectively prevents degenerate solutions and achieves state-of-the-art performance under the early-warning protocol.

Abstracted Shapes as Tokens -- A Generalizable and Interpretable Model for Time-series Classification

Nov 01, 2024

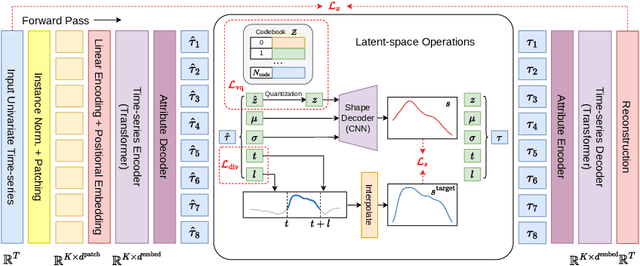

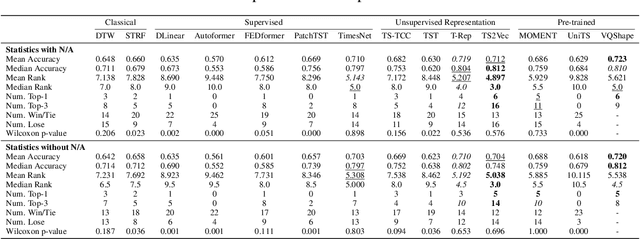

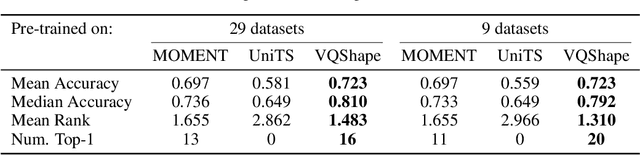

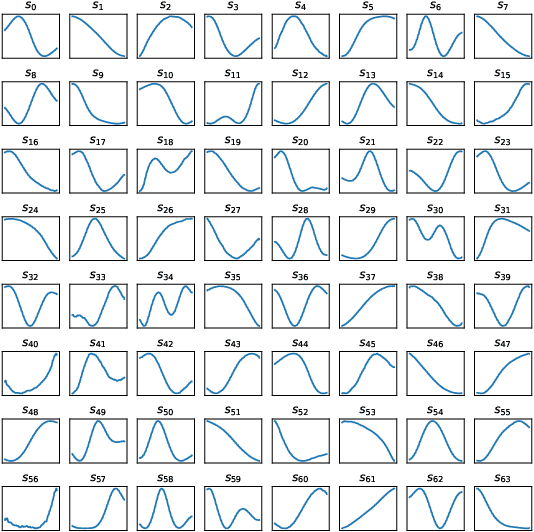

Abstract:In time-series analysis, many recent works seek to provide a unified view and representation for time-series across multiple domains, leading to the development of foundation models for time-series data. Despite diverse modeling techniques, existing models are black boxes and fail to provide insights and explanations about their representations. In this paper, we present VQShape, a pre-trained, generalizable, and interpretable model for time-series representation learning and classification. By introducing a novel representation for time-series data, we forge a connection between the latent space of VQShape and shape-level features. Using vector quantization, we show that time-series from different domains can be described using a unified set of low-dimensional codes, where each code can be represented as an abstracted shape in the time domain. On classification tasks, we show that the representations of VQShape can be utilized to build interpretable classifiers, achieving comparable performance to specialist models. Additionally, in zero-shot learning, VQShape and its codebook can generalize to previously unseen datasets and domains that are not included in the pre-training process. The code and pre-trained weights are available at https://github.com/YunshiWen/VQShape.

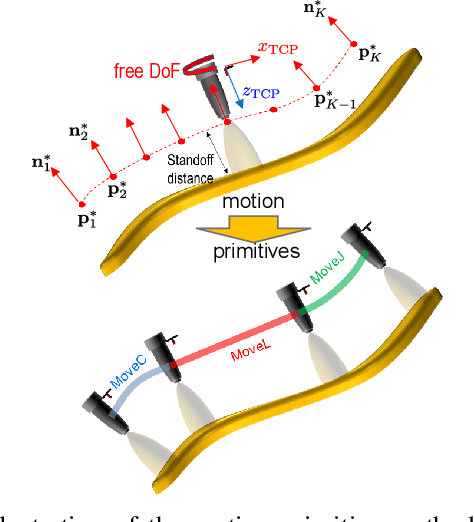

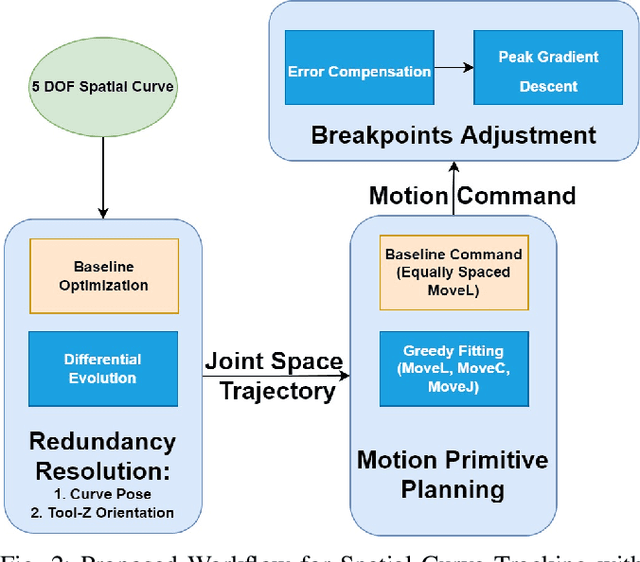

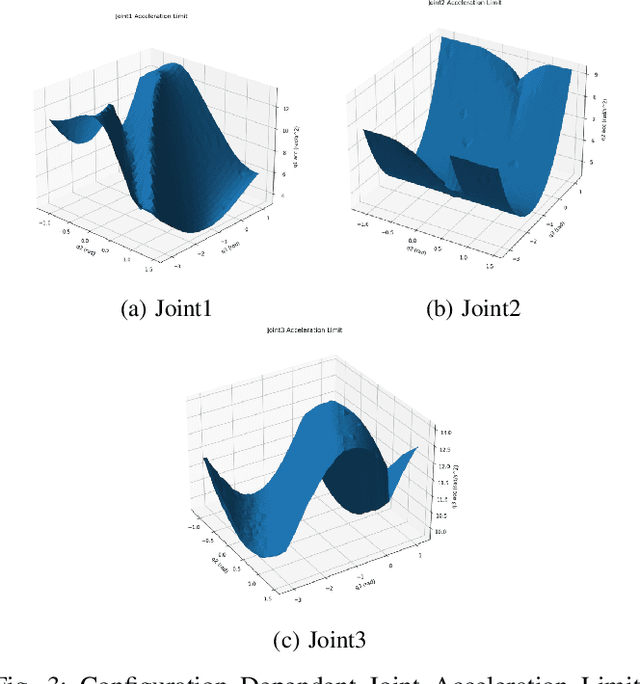

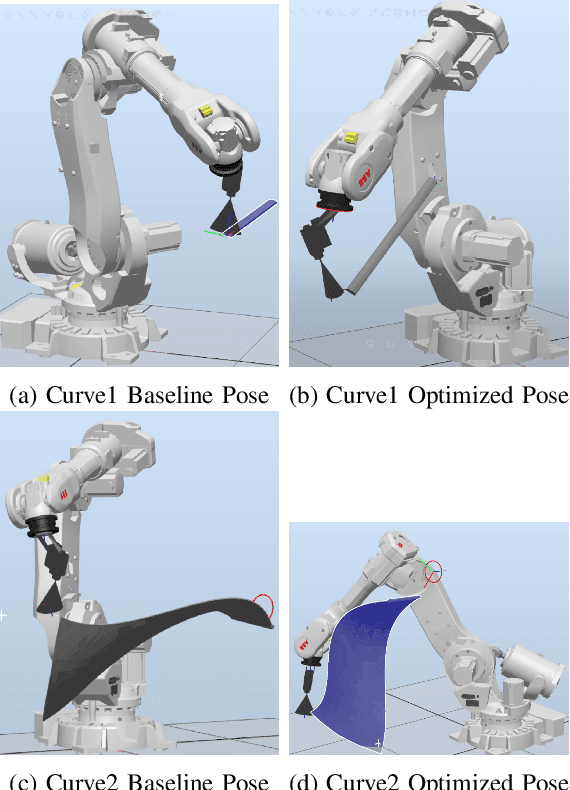

High-Speed High-Accuracy Spatial Curve Tracking Using Motion Primitives in Industrial Robots

Jan 06, 2023

Abstract:Industrial robots are increasingly deployed in applications requiring an end effector tool to closely track a specified path, such as in spraying and welding. Performance and productivity present possibly conflicting objectives: tracking accuracy, path speed, and motion uniformity. Industrial robots are programmed through motion primitives consisting of waypoints connected by pre-defined motion segments, with specified parameters such as path speed and blending zone. The actual executed robot motion depends on the robot joint servo controller and joint motion constraints (velocity, acceleration, etc.) which are largely unknown to the users. Programming a robot to achieve the desired performance today is time-consuming and mostly manual, requiring tuning a large number of coupled parameters in the motion primitives. The performance also depends on the choice of additional parameters: possible redundant degrees of freedom, location of the target curve, and the robot configuration. This paper presents a systematic approach to optimize the robot motion primitives for performance. The approach first selects the static parameters, then the motion primitives, and finally iteratively update the waypoints to minimize the tracking error. The ultimate performance objective is to maximize the path speed subject to the tracking accuracy and speed uniformity constraints over the entire path. We have demonstrated the effectiveness of this approach in simulation for ABB and FANUC robots for two challenging example curves, and experimentally for an ABB robot. Comparing with the baseline using the current industry practice, the optimized performance shows over 200% performance improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge