Yunseong Cho

Melon Fruit Detection and Quality Assessment Using Generative AI-Based Image Data Augmentation

Jul 15, 2024

Abstract:Monitoring and managing the growth and quality of fruits are very important tasks. To effectively train deep learning models like YOLO for real-time fruit detection, high-quality image datasets are essential. However, such datasets are often lacking in agriculture. Generative AI models can help create high-quality images. In this study, we used MidJourney and Firefly tools to generate images of melon greenhouses and post-harvest fruits through text-to-image, pre-harvest image-to-image, and post-harvest image-to-image methods. We evaluated these AIgenerated images using PSNR and SSIM metrics and tested the detection performance of the YOLOv9 model. We also assessed the net quality of real and generated fruits. Our results showed that generative AI could produce images very similar to real ones, especially for post-harvest fruits. The YOLOv9 model detected the generated images well, and the net quality was also measurable. This shows that generative AI can create realistic images useful for fruit detection and quality assessment, indicating its great potential in agriculture. This study highlights the potential of AI-generated images for data augmentation in melon fruit detection and quality assessment and envisions a positive future for generative AI applications in agriculture.

Adaptive Learning-Based Maximum Likelihood and Channel-Coded Detection for Massive MIMO Systems with One-Bit ADCs

Apr 16, 2023Abstract:In this paper, we propose a learning-based detection framework for uplink massive multiple-input and multiple-output (MIMO) systems with one-bit analog-to-digital converters. The learning-based detection only requires counting the occurrences of the quantized outputs of -1 and +1 for estimating a likelihood probability at each antenna. Accordingly, the key advantage of this approach is to perform maximum likelihood detection without explicit channel estimation which has been one of the primary challenges of one-bit quantized systems. The learning in the high signal-to-noise ratio (SNR) regime, however, needs excessive training to estimate the extremely small likelihood probabilities. To address this drawback, we propose a dither-and-learning technique to estimate likelihood functions from dithered signals. First, we add a dithering signal to artificially decrease the SNR and then infer the likelihood function from the quantized dithered signals by using an SNR estimate derived from a deep neural network-based offline estimator. We extend our technique by developing an adaptive dither-and-learning method that updates the dithering power according the patterns observed in the quantized dithered signals. The proposed framework is also applied to state-of-the-art channel-coded MIMO systems by computing a bit-wise and user-wise log-likelihood ratio from the refined likelihood probabilities. Simulation results validate the detection performance of the proposed methods in both uncoded and coded systems.

Adaptive Learning-Based Detection for One-Bit Quantized Massive MIMO Systems

Nov 13, 2022Abstract:We propose an adaptive learning-based framework for uplink massive multiple-input multiple-output (MIMO) systems with one-bit analog-to-digital converters. Learning-based detection does not need to estimate channels, which overcomes a key drawback in one-bit quantized systems. During training, learning-based detection suffers at high signal-to-noise ratio (SNR) because observations will be biased to +1 or -1 which leads to many zero-valued empirical likelihood functions. At low SNR, observations vary frequently in value but the high noise power makes capturing the effect of the channel difficult. To address these drawbacks, we propose an adaptive dithering-and-learning method. During training, received values are mixed with dithering noise whose statistics are known to the base station, and the dithering noise power is updated for each antenna element depending on the observed pattern of the output. We then use the refined probabilities in the one-bit maximum likelihood detection rule. Simulation results validate the detection performance of the proposed method vs. our previous method using fixed dithering noise power as well as zero-forcing and optimal ML detection both of which assume perfect channel knowledge.

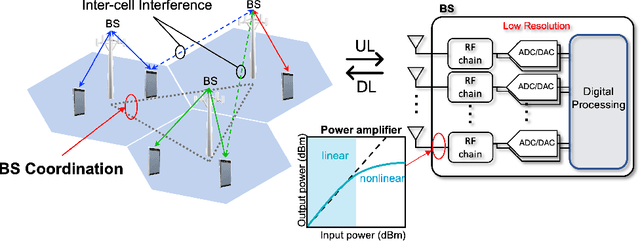

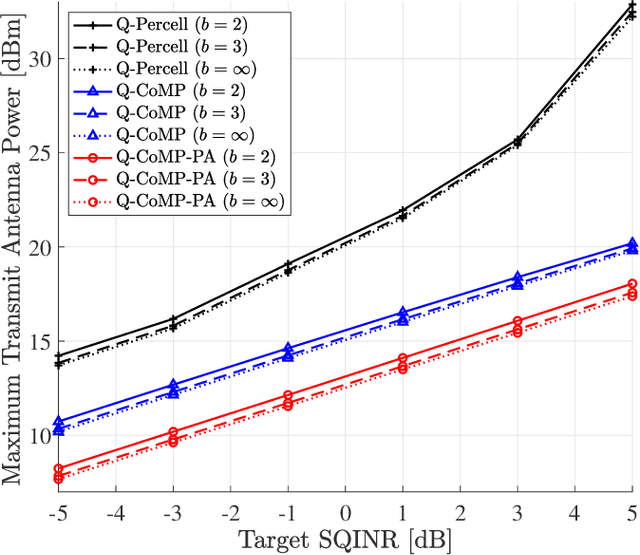

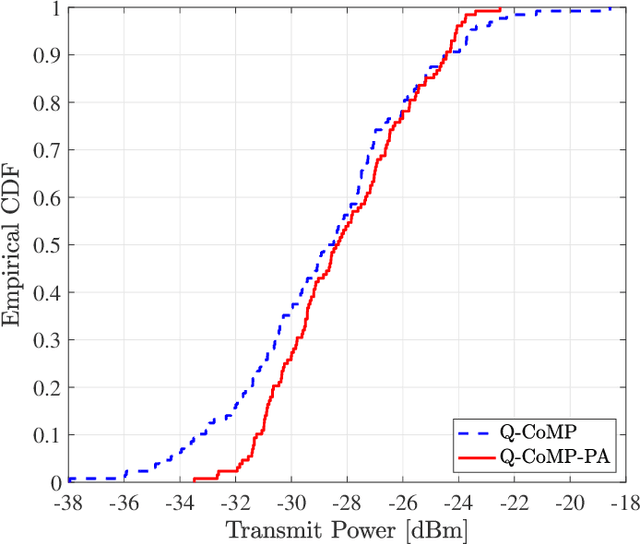

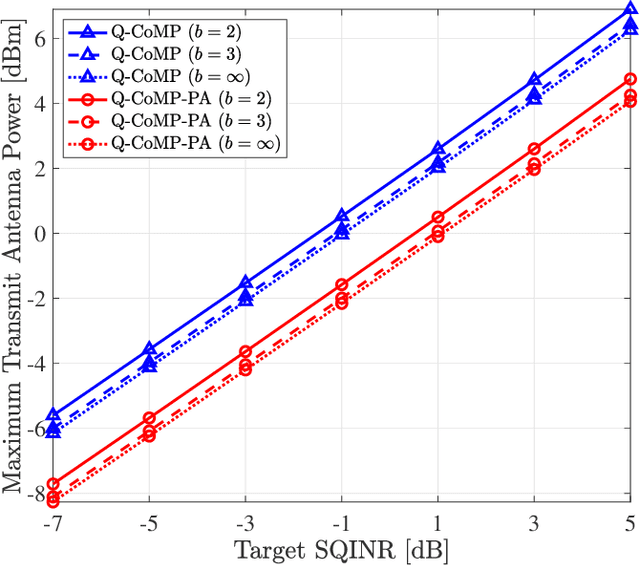

Coordinated Per-Antenna Power Minimization for Multicell Massive MIMO Systems with Low-Resolution Data Converters

Aug 08, 2022

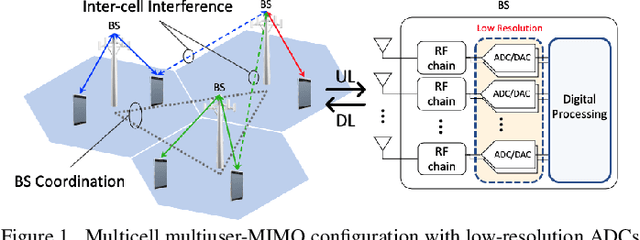

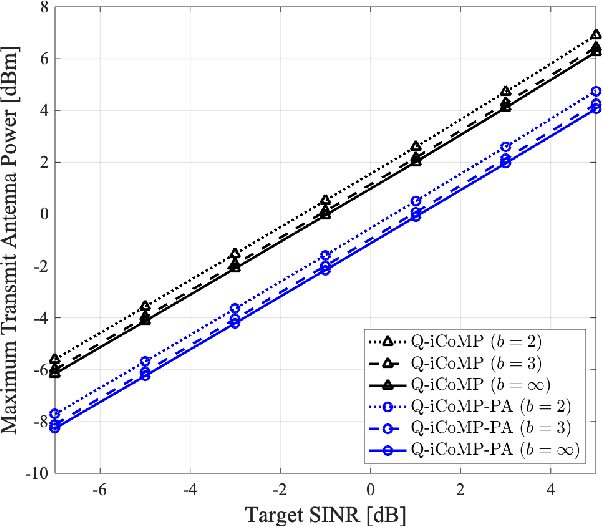

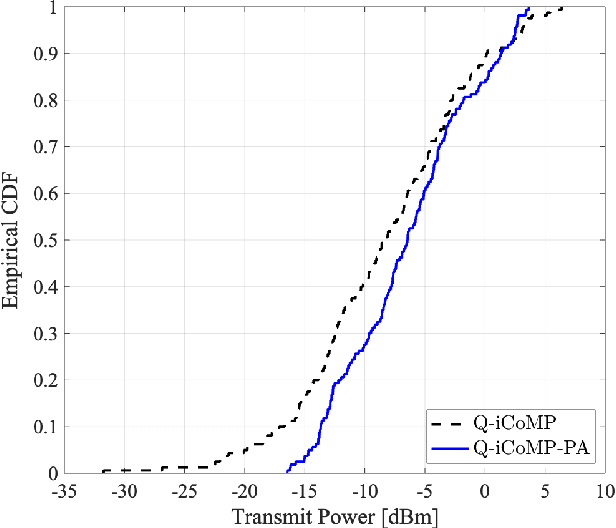

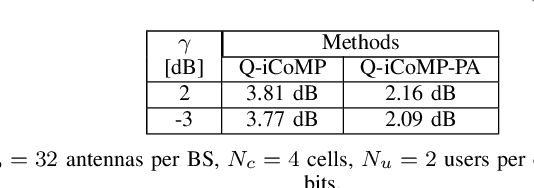

Abstract:In this paper, we investigate multicell-coordinated beamforming for large-scale multiple-input multipleoutput (MIMO) orthogonal frequency-division multiplexing (OFDM) communications with low-resolution data converters. In particular, we seek to minimize the total transmit power of the network under received signal-to-quantization-plus-interference-and-noise ratio constraints while minimizing per-antenna transmit power. Our primary contributions are (1) formulating the quantized downlink (DL) OFDM antenna power minimax problem and deriving its associated dual problem, (2) showing strong duality and interpreting the dual as a virtual quantized uplink (UL) OFDM problem, and (3) developing an iterative minimax algorithm to identify a feasible solution based on the dual problem with performance validation through simulations. Specifically, the dual problem requires joint optimization of virtual UL transmit power and noise covariance matrices. To solve the problem, we first derive the optimal dual solution of the UL problem for given noise covariance matrices. Then, we use the solution to compute the associated DL beamformer. Subsequently, using the DL beamformer we update the UL noise covariance matrices via subgradient projection. Finally, we propose an iterative algorithm by repeating the steps for optimizing DL beamformers. Simulations validate the proposed algorithm in terms of the maximum antenna transmit power and peak-to-average-power ratio.

Coordinated Beamforming in Quantized Massive MIMO Systems with Per-Antenna Constraints

Oct 19, 2021

Abstract:In this work, we present a solution for coordinated beamforming for large-scale downlink (DL) communication systems with low-resolution data converters when employing a per-antenna power constraint that limits the maximum antenna power to alleviate hardware cost. To this end, we formulate and solve the antenna power minimax problem for the coarsely quantized DL system with target signal-to-interference-plus-noise ratio requirements. We show that the associated Lagrangian dual with uncertain noise covariance matrices achieves zero duality gap and that the dual solution can be used to obtain the primal DL solution. Using strong duality, we propose an iterative algorithm to determine the optimal dual solution, which is used to compute the optimal DL beamformer. We further update the noise covariance matrices using the optimal DL solution with an associated subgradient and perform projection onto the feasible domain. Through simulation, we evaluate the proposed method in maximum antenna power consumption and peak-to-average power ratio which are directly related to hardware efficiency.

Low-Complexity Symbol-Level Precoding for MU-MISO Downlink Systems with QAM Signals

Jun 01, 2021

Abstract:This study proposes the construction of a transmit signal for large-scale antenna systems with cost-effective 1-bit digital-to-analog converters in the downlink. Under quadrature-amplitude-modulation constellations, it is still an open problem to overcome a severe error floor problem caused by its nature property. To this end, we first present a feasibility condition which guarantees that each user's noiseless signal is placed in the desired decision region. For robustness to additive noise, we formulate an optimization problem, we then transform the feasibility conditions to cascaded matrix form. We propose a low-complexity algorithm to generate a 1-bit transmit signal based on the proposed optimization problem formulated as a well-defined mixed-integer-linear-programming. Numerical results validate the superiority of the proposed method in terms of detection performance and computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge