Yunpeng Tai

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

Demonstration Augmentation for Zero-shot In-context Learning

Jun 03, 2024

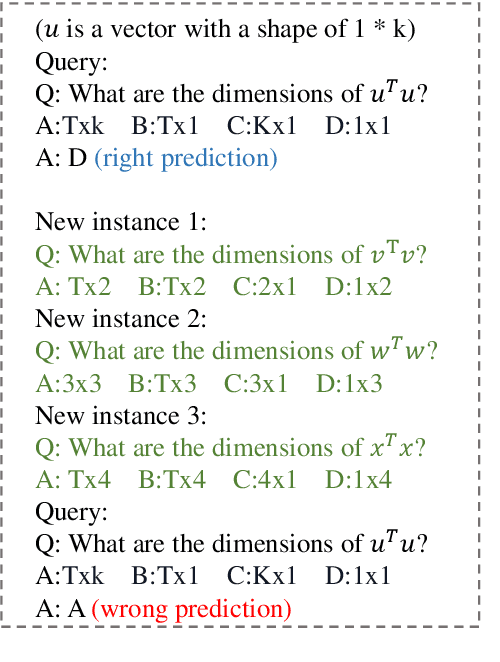

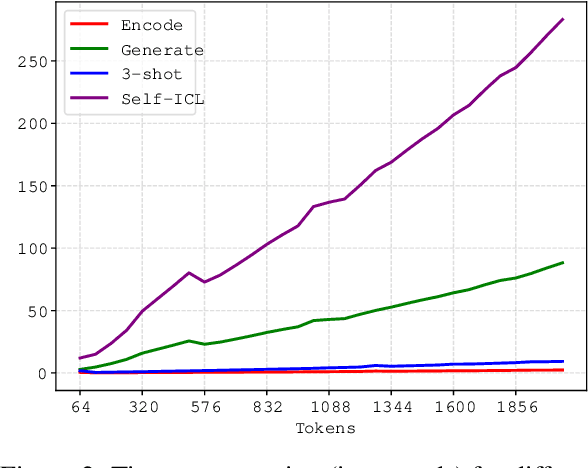

Abstract:Large Language Models (LLMs) have demonstrated an impressive capability known as In-context Learning (ICL), which enables them to acquire knowledge from textual demonstrations without the need for parameter updates. However, many studies have highlighted that the model's performance is sensitive to the choice of demonstrations, presenting a significant challenge for practical applications where we lack prior knowledge of user queries. Consequently, we need to construct an extensive demonstration pool and incorporate external databases to assist the model, leading to considerable time and financial costs. In light of this, some recent research has shifted focus towards zero-shot ICL, aiming to reduce the model's reliance on external information by leveraging their inherent generative capabilities. Despite the effectiveness of these approaches, the content generated by the model may be unreliable, and the generation process is time-consuming. To address these issues, we propose Demonstration Augmentation for In-context Learning (DAIL), which employs the model's previously predicted historical samples as demonstrations for subsequent ones. DAIL brings no additional inference cost and does not rely on the model's generative capabilities. Our experiments reveal that DAIL can significantly improve the model's performance over direct zero-shot inference and can even outperform few-shot ICL without any external information.

A Survey Of Regression Algorithms And Connections With Deep Learning

Apr 26, 2021

Abstract:Regression has attracted immense interest lately due to its effectiveness in tasks like predicting values. And Regression is of widespread use in multiple fields such as Economics, Finance, Business, Biology and so on. While considerable studies have proposed some impressive models, few of them have provided a whole picture regarding how and to what extent Regression has developed. With the aim of aiding beginners in understanding the relationships among different Regression algorithms, this paper characterizes a broad and thoughtful selection of recent regression algorithms, providing an organized and comprehensive overview of existing work and models utilized frequently. In this paper, the relationship between Regression and Deep Learning is also discussed and a conclusion can be drawn that Deep Learning can be more powerful as an combination with Regression models in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge