Yunjie Peng

Deep Learning-based Occluded Person Re-identification: A Survey

Jul 29, 2022

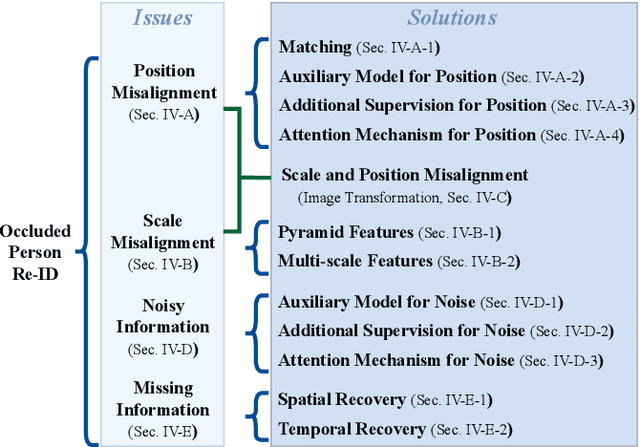

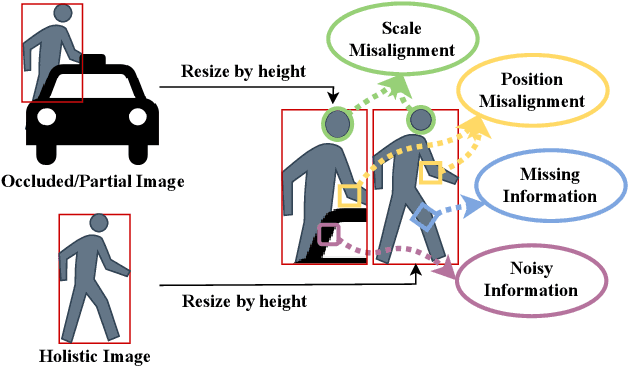

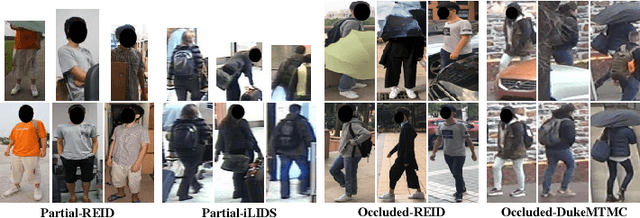

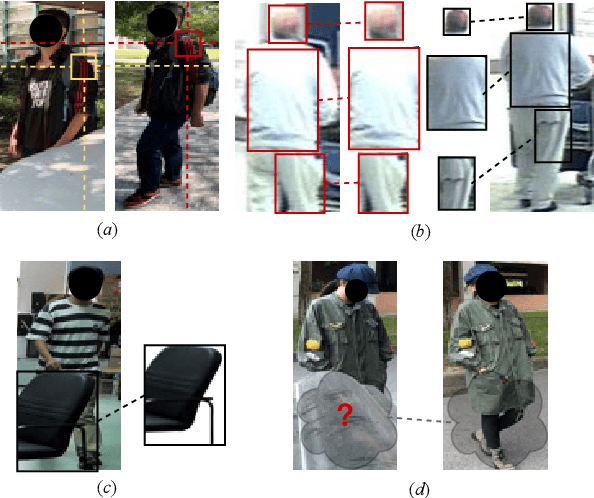

Abstract:Occluded person re-identification (Re-ID) aims at addressing the occlusion problem when retrieving the person of interest across multiple cameras. With the promotion of deep learning technology and the increasing demand for intelligent video surveillance, the frequent occlusion in real-world applications has made occluded person Re-ID draw considerable interest from researchers. A large number of occluded person Re-ID methods have been proposed while there are few surveys that focus on occlusion. To fill this gap and help boost future research, this paper provides a systematic survey of occluded person Re-ID. Through an in-depth analysis of the occlusion in person Re-ID, most existing methods are found to only consider part of the problems brought by occlusion. Therefore, we review occlusion-related person Re-ID methods from the perspective of issues and solutions. We summarize four issues caused by occlusion in person Re-ID, i.e., position misalignment, scale misalignment, noisy information, and missing information. The occlusion-related methods addressing different issues are then categorized and introduced accordingly. After that, we summarize and compare the performance of recent occluded person Re-ID methods on four popular datasets: Partial-ReID, Partial-iLIDS, Occluded-ReID, and Occluded-DukeMTMC. Finally, we provide insights on promising future research directions.

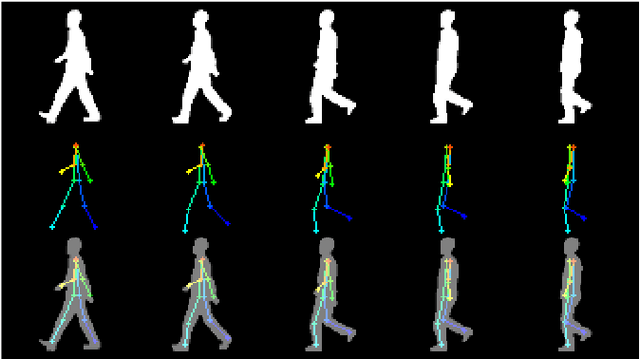

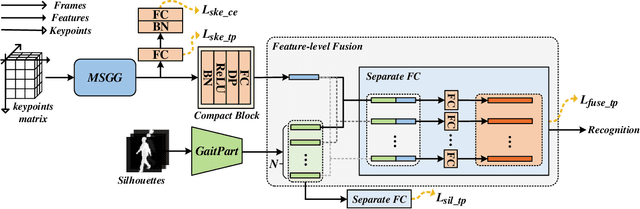

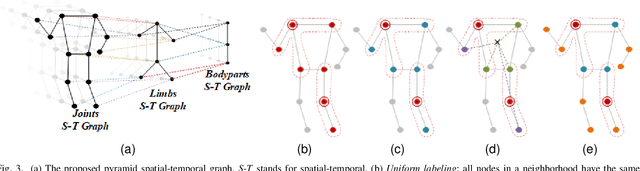

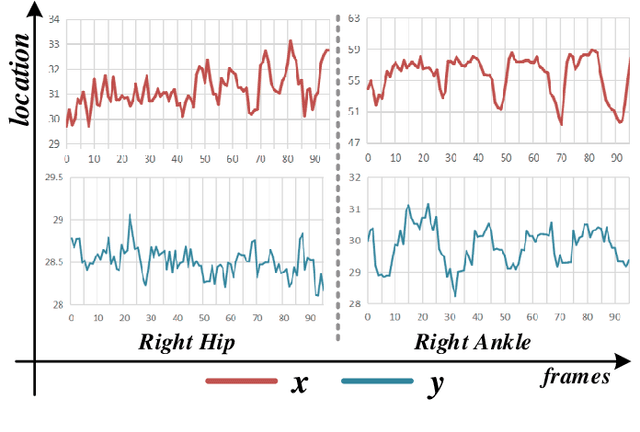

Learning Rich Features for Gait Recognition by Integrating Skeletons and Silhouettes

Oct 26, 2021

Abstract:Gait recognition captures gait patterns from the walking sequence of an individual for identification. Most existing gait recognition methods learn features from silhouettes or skeletons for the robustness to clothing, carrying, and other exterior factors. The combination of the two data modalities, however, is not fully exploited. This paper proposes a simple yet effective bimodal fusion (BiFusion) network, which mines the complementary clues of skeletons and silhouettes, to learn rich features for gait identification. Particularly, the inherent hierarchical semantics of body joints in a skeleton is leveraged to design a novel Multi-scale Gait Graph (MSGG) network for the feature extraction of skeletons. Extensive experiments on CASIA-B and OUMVLP demonstrate both the superiority of the proposed MSGG network in modeling skeletons and the effectiveness of the bimodal fusion for gait recognition. Under the most challenging condition of walking in different clothes on CASIA-B, our method achieves the rank-1 accuracy of 92.1%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge