Yunjie Chen

Enhancing Visual Programming for Visual Reasoning via Probabilistic Graphs

Dec 16, 2025

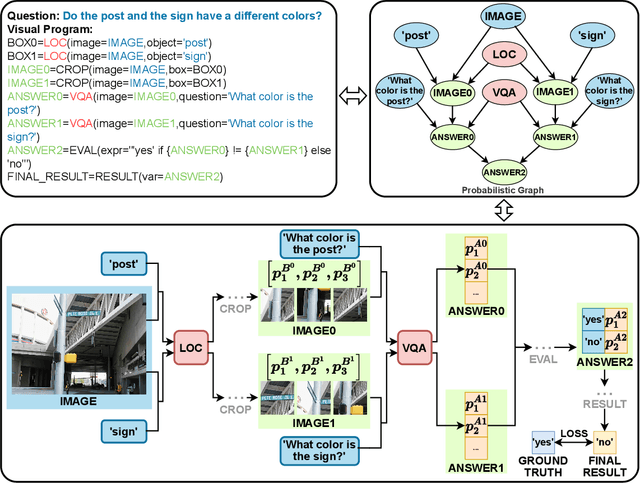

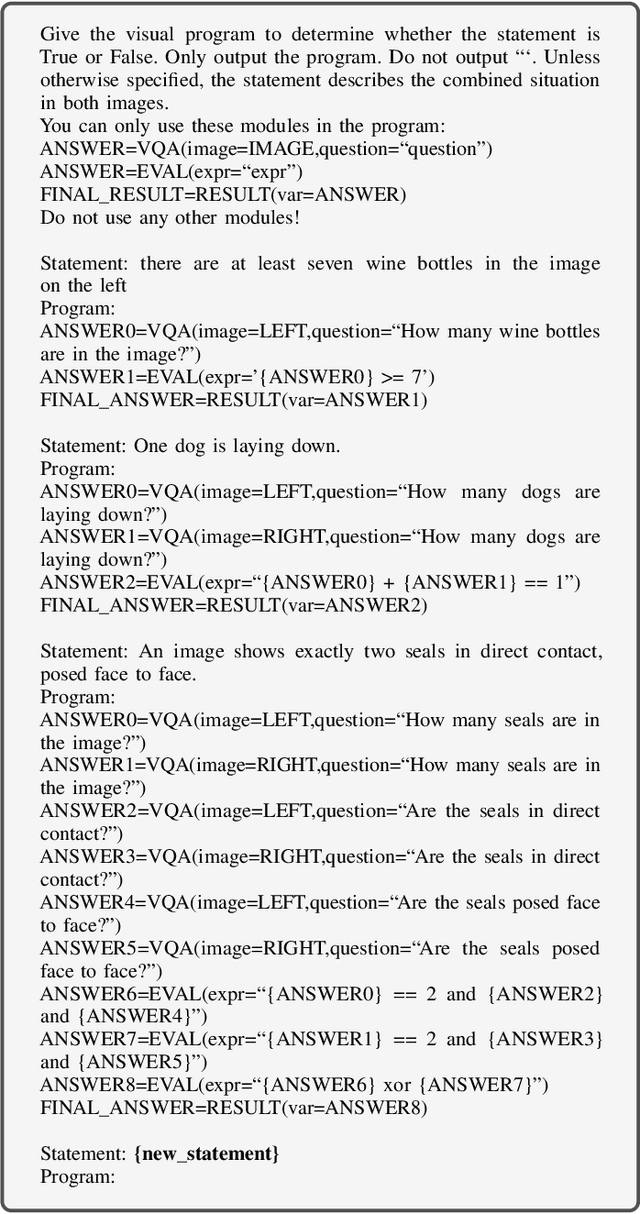

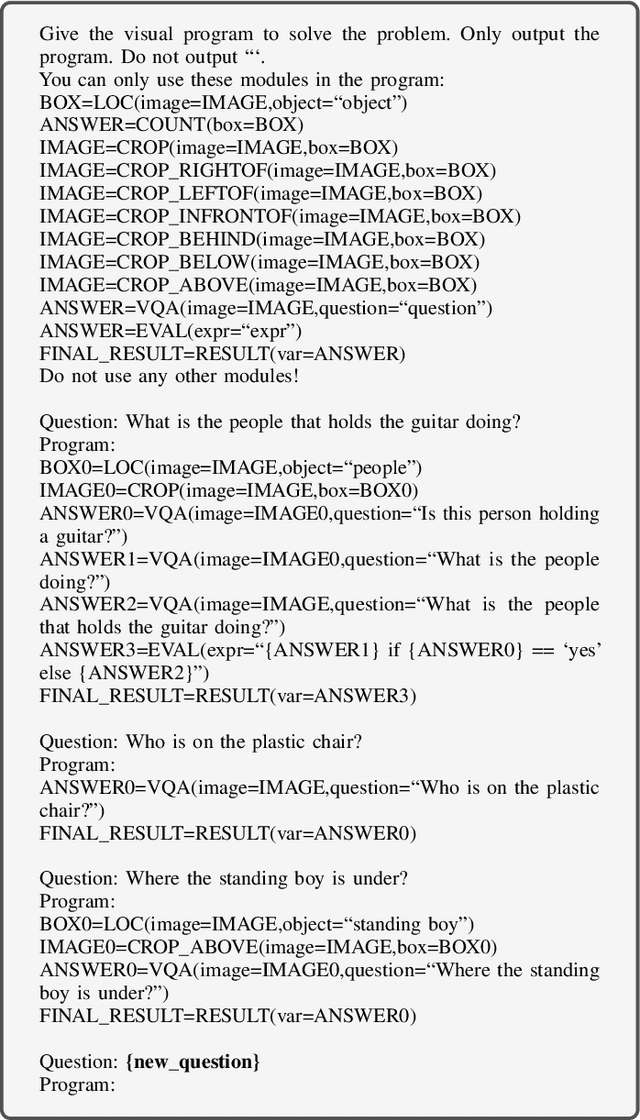

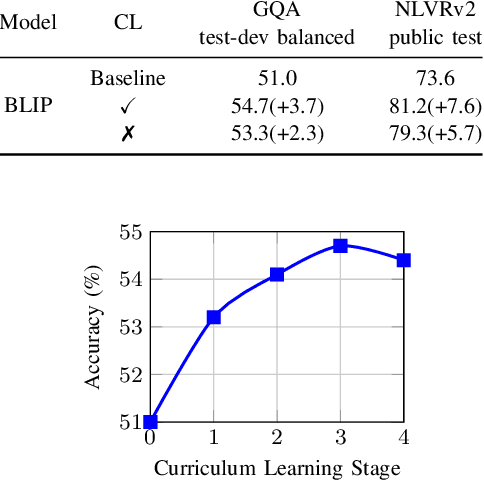

Abstract:Recently, Visual Programming (VP) based on large language models (LLMs) has rapidly developed and demonstrated significant potential in complex Visual Reasoning (VR) tasks. Previous works to enhance VP have primarily focused on improving the quality of LLM-generated visual programs. However, they have neglected to optimize the VP-invoked pre-trained models, which serve as modules for the visual sub-tasks decomposed from the targeted tasks by VP. The difficulty is that there are only final labels of targeted VR tasks rather than labels of sub-tasks. Besides, the non-differentiable nature of VP impedes the direct use of efficient gradient-based optimization methods to leverage final labels for end-to-end learning of the entire VP framework. To overcome these issues, we propose EVPG, a method to Enhance Visual Programming for visual reasoning via Probabilistic Graphs. Specifically, we creatively build a directed probabilistic graph according to the variable dependency relationships during the VP executing process, which reconstructs the non-differentiable VP executing process into a differentiable exact probability inference process on this directed probabilistic graph. As a result, this enables the VP framework to utilize the final labels for efficient, gradient-based optimization in end-to-end supervised learning on targeted VR tasks. Extensive and comprehensive experiments demonstrate the effectiveness and advantages of our EVPG, showing significant performance improvements for VP on three classical complex VR tasks: GQA, NLVRv2, and Open Images.

Convolution Type of Metaplectic Cohen's Distribution Time-Frequency Analysis Theory, Method and Technology

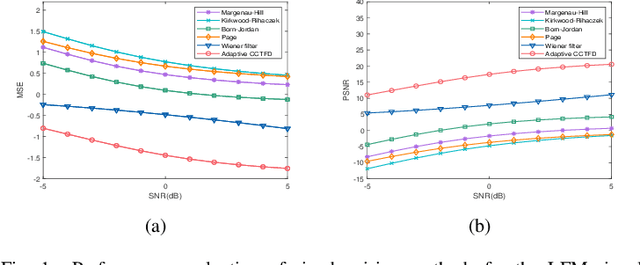

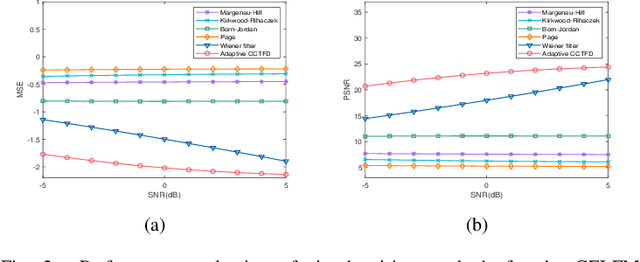

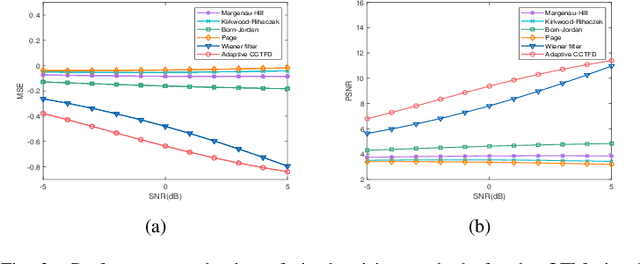

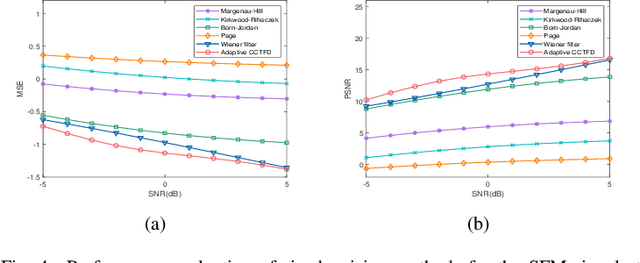

Aug 08, 2024Abstract:The conventional Cohen's distribution can't meet the requirement of additive noises jamming signals high-performance denoising under the condition of low signal-to-noise ratio, it is necessary to integrate the metaplectic transform for non-stationary signal fractional domain time-frequency analysis. In this paper, we blend time-frequency operators and coordinate operator fractionizations to formulate the definition of the metaplectic Wigner distribution, based on which we integrate the generalized metaplectic convolution to address the unified representation issue of the convolution type of metaplectic Cohen's distribution (CMCD), whose special cases and essential properties are also derived. We blend Wiener filter principle and fractional domain filter mechanism of the metaplectic transform to design the least-squares adaptive filter method in the metaplectic Wigner distribution domain, giving birth to the least-squares adaptive filter-based CMCD whose kernel function can be adjusted with the input signal automatically to achieve the minimum mean-square error (MSE) denoising in Wigner distribution domain. We discuss the optimal symplectic matrices selection strategy of the proposed adaptive CMCD through the minimum MSE minimization modeling and solving. Some examples are also carried out to demonstrate that the proposed filtering method outperforms some state-of-the-arts including Wiener filter and fixed kernel functions-based or adaptive Cohen's distribution in noise suppression.

Adaptive Cohen's Class Time-Frequency Distribution

Aug 08, 2024

Abstract:The fixed kernel function-based Cohen's class time-frequency distributions (CCTFDs) allow flexibility in denoising for some specific polluted signals. Due to the limitation of fixed kernel functions, however, from the view point of filtering they fail to automatically adjust the response according to the change of signal to adapt to different signal characteristics. In this letter, we integrate Wiener filter principle and the time-frequency filtering mechanism of CCTFD to design the least-squares adaptive filter method in the Wigner-Ville distribution (WVD) domain, giving birth to the least-squares adaptive filter-based CCTFD whose kernel function can be adjusted with the input signal automatically to achieve the minimum mean-square error denoising in the WVD domain. Some examples are also carried out to demonstrate that the proposed adaptive CCTFD outperforms some state-of-the-arts in noise suppression.

Vestibular schwannoma growth prediction from longitudinal MRI by time conditioned neural fields

Apr 04, 2024Abstract:Vestibular schwannomas (VS) are benign tumors that are generally managed by active surveillance with MRI examination. To further assist clinical decision-making and avoid overtreatment, an accurate prediction of tumor growth based on longitudinal imaging is highly desirable. In this paper, we introduce DeepGrowth, a deep learning method that incorporates neural fields and recurrent neural networks for prospective tumor growth prediction. In the proposed method, each tumor is represented as a signed distance function (SDF) conditioned on a low-dimensional latent code. Unlike previous studies that perform tumor shape prediction directly in the image space, we predict the latent codes instead and then reconstruct future shapes from it. To deal with irregular time intervals, we introduce a time-conditioned recurrent module based on a ConvLSTM and a novel temporal encoding strategy, which enables the proposed model to output varying tumor shapes over time. The experiments on an in-house longitudinal VS dataset showed that the proposed model significantly improved the performance ($\ge 1.6\%$ Dice score and $\ge0.20$ mm 95\% Hausdorff distance), in particular for top 20\% tumors that grow or shrink the most ($\ge 4.6\%$ Dice score and $\ge 0.73$ mm 95\% Hausdorff distance). Our code is available at ~\burl{https://github.com/cyjdswx/DeepGrowth}

CoNeS: Conditional neural fields with shift modulation for multi-sequence MRI translation

Sep 06, 2023Abstract:Multi-sequence magnetic resonance imaging (MRI) has found wide applications in both modern clinical studies and deep learning research. However, in clinical practice, it frequently occurs that one or more of the MRI sequences are missing due to different image acquisition protocols or contrast agent contraindications of patients, limiting the utilization of deep learning models trained on multi-sequence data. One promising approach is to leverage generative models to synthesize the missing sequences, which can serve as a surrogate acquisition. State-of-the-art methods tackling this problem are based on convolutional neural networks (CNN) which usually suffer from spectral biases, resulting in poor reconstruction of high-frequency fine details. In this paper, we propose Conditional Neural fields with Shift modulation (CoNeS), a model that takes voxel coordinates as input and learns a representation of the target images for multi-sequence MRI translation. The proposed model uses a multi-layer perceptron (MLP) instead of a CNN as the decoder for pixel-to-pixel mapping. Hence, each target image is represented as a neural field that is conditioned on the source image via shift modulation with a learned latent code. Experiments on BraTS 2018 and an in-house clinical dataset of vestibular schwannoma patients showed that the proposed method outperformed state-of-the-art methods for multi-sequence MRI translation both visually and quantitatively. Moreover, we conducted spectral analysis, showing that CoNeS was able to overcome the spectral bias issue common in conventional CNN models. To further evaluate the usage of synthesized images in clinical downstream tasks, we tested a segmentation network using the synthesized images at inference.

Local implicit neural representations for multi-sequence MRI translation

Feb 02, 2023

Abstract:In radiological practice, multi-sequence MRI is routinely acquired to characterize anatomy and tissue. However, due to the heterogeneity of imaging protocols and contra-indications to contrast agents, some MRI sequences, e.g. contrast-enhanced T1-weighted image (T1ce), may not be acquired. This creates difficulties for large-scale clinical studies for which heterogeneous datasets are aggregated. Modern deep learning techniques have demonstrated the capability of synthesizing missing sequences from existing sequences, through learning from an extensive multi-sequence MRI dataset. In this paper, we propose a novel MR image translation solution based on local implicit neural representations. We split the available MRI sequences into local patches and assign to each patch a local multi-layer perceptron (MLP) that represents a patch in the T1ce. The parameters of these local MLPs are generated by a hypernetwork based on image features. Experimental results and ablation studies on the BraTS challenge dataset showed that the local MLPs are critical for recovering fine image and tumor details, as they allow for local specialization that is highly important for accurate image translation. Compared to a classical pix2pix model, the proposed method demonstrated visual improvement and significantly improved quantitative scores (MSE 0.86 x 10^-3 vs. 1.02 x 10^-3 and SSIM 94.9 vs 94.3)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge