Yunhua Xiang

On the Optimality of Nuclear-norm-based Matrix Completion for Problems with Smooth Non-linear Structure

May 05, 2021

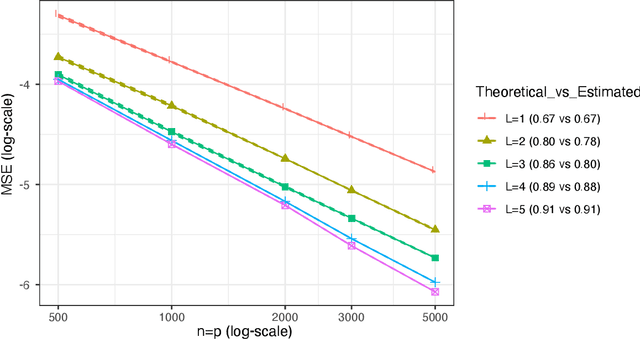

Abstract:Originally developed for imputing missing entries in low rank, or approximately low rank matrices, matrix completion has proven widely effective in many problems where there is no reason to assume low-dimensional linear structure in the underlying matrix, as would be imposed by rank constraints. In this manuscript, we build some theoretical intuition for this behavior. We consider matrices which are not necessarily low-rank, but lie in a low-dimensional non-linear manifold. We show that nuclear-norm penalization is still effective for recovering these matrices when observations are missing completely at random. In particular, we give upper bounds on the rate of convergence as a function of the number of rows, columns, and observed entries in the matrix, as well as the smoothness and dimension of the non-linear embedding. We additionally give a minimax lower bound: This lower bound agrees with our upper bound (up to a logarithmic factor), which shows that nuclear-norm penalization is (up to log terms) minimax rate optimal for these problems.

Statistical Inference Using Mean Shift Denoising

Oct 13, 2016

Abstract:In this paper, we study how the mean shift algorithm can be used to denoise a dataset. We introduce a new framework to analyze the mean shift algorithm as a denoising approach by viewing the algorithm as an operator on a distribution function. We investigate how the mean shift algorithm changes the distribution and show that data points shifted by the mean shift concentrate around high density regions of the underlying density function. By using the mean shift as a denoising method, we enhance the performance of several clustering techniques, improve the power of two-sample tests, and obtain a new method for anomaly detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge