Yuki Saigusa

Imitation Learning for Nonprehensile Manipulation through Self-Supervised Learning Considering Motion Speed

Jun 22, 2022

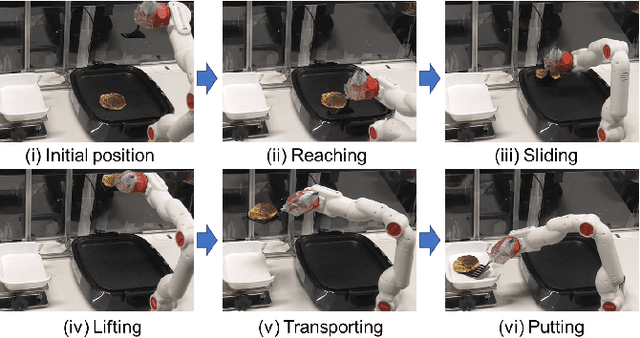

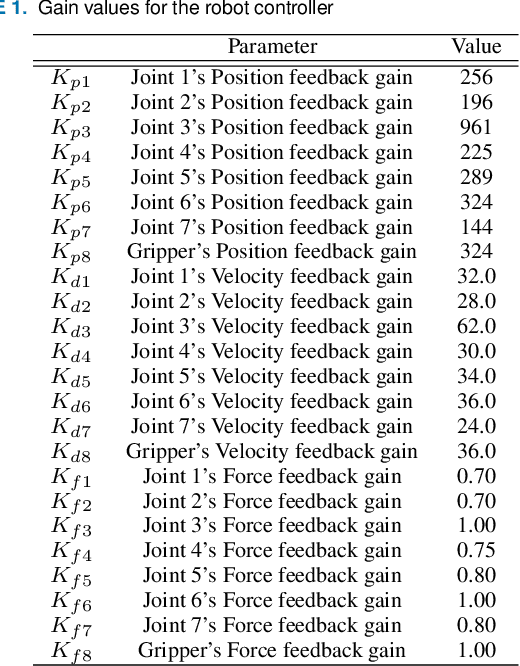

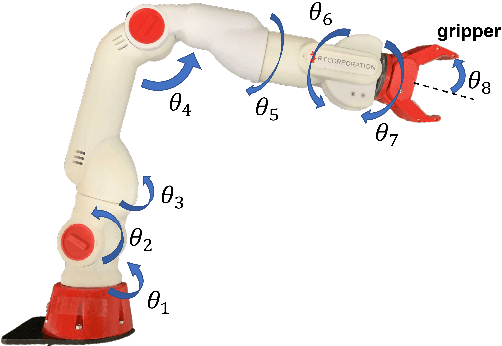

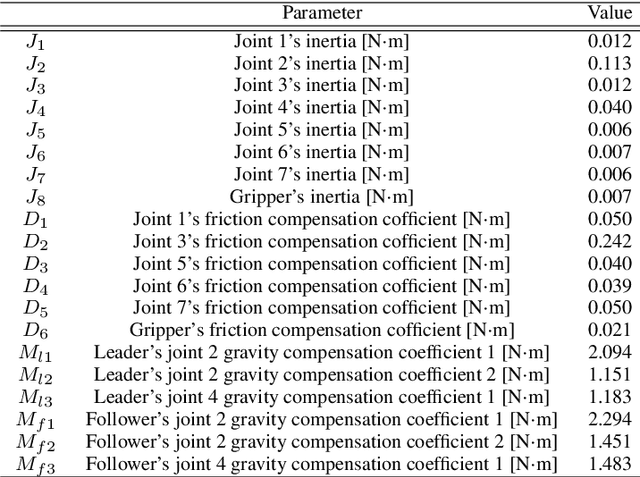

Abstract:Robots are expected to replace menial tasks such as housework. Some of these tasks include nonprehensile manipulation performed without grasping objects. Nonprehensile manipulation is very difficult because it requires considering the dynamics of environments and objects. Therefore imitating complex behaviors requires a large number of human demonstrations. In this study, a self-supervised learning that considers dynamics to achieve variable speed for nonprehensile manipulation is proposed. The proposed method collects and fine-tunes only successful action data obtained during autonomous operations. By fine-tuning the successful data, the robot learns the dynamics among itself, its environment, and objects. We experimented with the task of scooping and transporting pancakes using the neural network model trained on 24 human-collected training data. The proposed method significantly improved the success rate from 40.2% to 85.7%, and succeeded the task more than 75% for other objects.

Imitation learning for variable speed motion generation over multiple actions

Mar 11, 2021

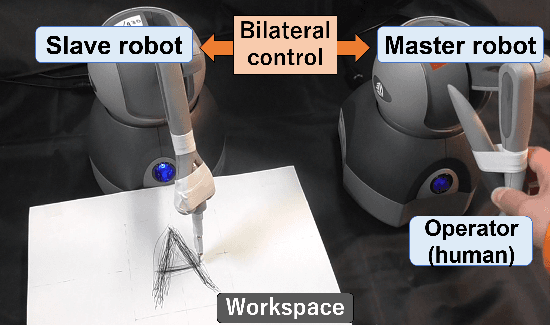

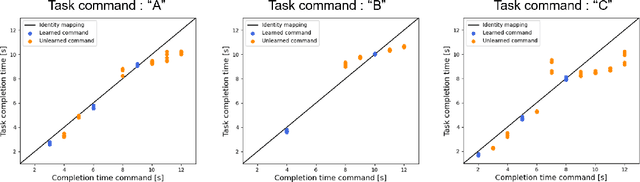

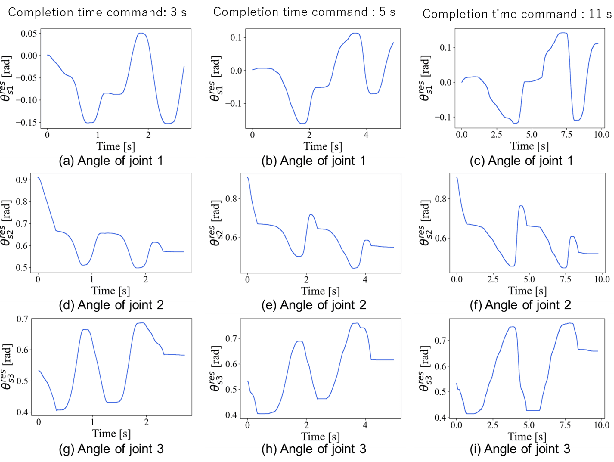

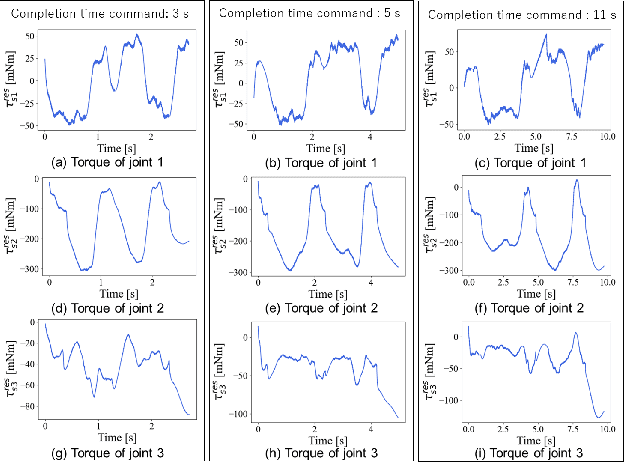

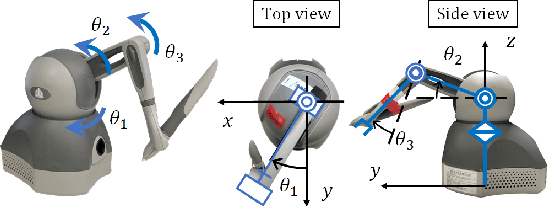

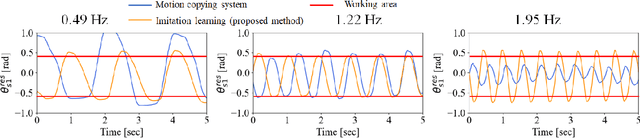

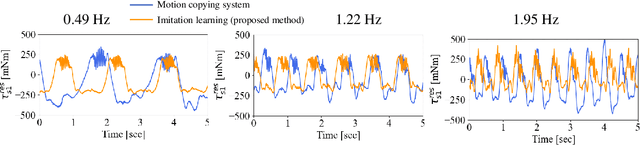

Abstract:Robot motion generation methods using machine learning have been studied in recent years. Bilateral controlbased imitation learning can imitate human motions using force information. By means of this method, variable speed motion generation that considers physical phenomena such as the inertial force and friction can be achieved. Previous research demonstrated that the complex relationship between the force and speed can be learned by using a neural network model. However, the previous study only focused on a simple reciprocating motion. To learn the complex relationship between the force and speed more accurately, it is necessary to learn multiple actions using many joints. In this paper, we propose a variable speed motion generation method for multiple motions. We considered four types of neural network models for the motion generation and determined the best model for multiple motions at variable speeds. Subsequently, we used the best model to evaluate the reproducibility of the task completion time for the input completion time command. The results revealed that the proposed method could change the task completion time according to the specified completion time command in multiple motions.

Imitation Learning for Variable Speed Object Manipulation

Feb 20, 2021

Abstract:To operate in a real-world environment, robots have several requirements including environmental adaptability. Moreover, the desired success rate for the completion of tasks must be achieved. In this regard, end-to-end learning for autonomous operation is currently being investigated. However, the issue of operating speed has not been investigated in detail. Therefore, in this paper, we propose a method for generating variable operating speeds while adapting to perturbations in the environment. When the work speed changes, there is a nonlinear relationship between the operating speed and force (e.g., inertial and frictional forces). However, the proposed method can be adapted to nonlinearities by utilizing minimal motion data. We experimentally evaluated the proposed method for erasing a line using an eraser fixed to the tip of a robot. Furthermore, the proposed method enables a robot to perform a task faster than a human operator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge