Yujin Jeon

A Real-world Display Inverse Rendering Dataset

Aug 20, 2025

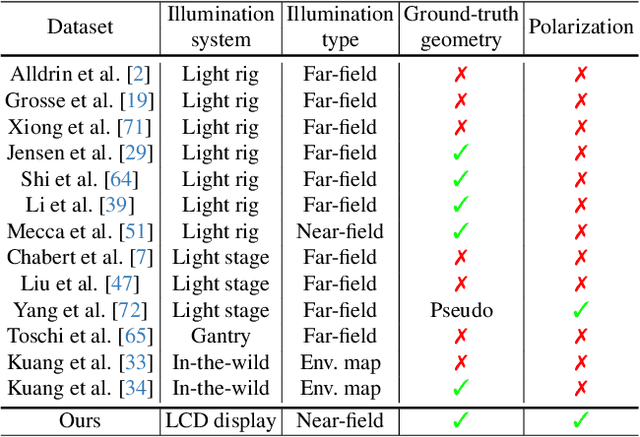

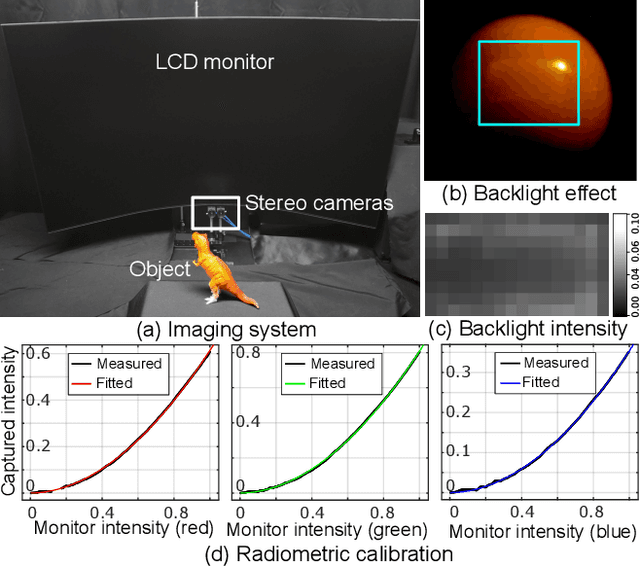

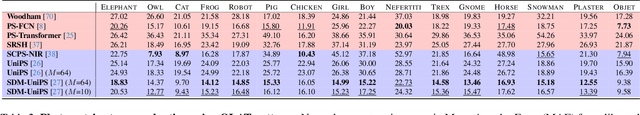

Abstract:Inverse rendering aims to reconstruct geometry and reflectance from captured images. Display-camera imaging systems offer unique advantages for this task: each pixel can easily function as a programmable point light source, and the polarized light emitted by LCD displays facilitates diffuse-specular separation. Despite these benefits, there is currently no public real-world dataset captured using display-camera systems, unlike other setups such as light stages. This absence hinders the development and evaluation of display-based inverse rendering methods. In this paper, we introduce the first real-world dataset for display-based inverse rendering. To achieve this, we construct and calibrate an imaging system comprising an LCD display and stereo polarization cameras. We then capture a diverse set of objects with diverse geometry and reflectance under one-light-at-a-time (OLAT) display patterns. We also provide high-quality ground-truth geometry. Our dataset enables the synthesis of captured images under arbitrary display patterns and different noise levels. Using this dataset, we evaluate the performance of existing photometric stereo and inverse rendering methods, and provide a simple, yet effective baseline for display inverse rendering, outperforming state-of-the-art inverse rendering methods. Code and dataset are available on our project page at https://michaelcsj.github.io/DIR/

HyperCLOVA X Technical Report

Apr 13, 2024Abstract:We introduce HyperCLOVA X, a family of large language models (LLMs) tailored to the Korean language and culture, along with competitive capabilities in English, math, and coding. HyperCLOVA X was trained on a balanced mix of Korean, English, and code data, followed by instruction-tuning with high-quality human-annotated datasets while abiding by strict safety guidelines reflecting our commitment to responsible AI. The model is evaluated across various benchmarks, including comprehensive reasoning, knowledge, commonsense, factuality, coding, math, chatting, instruction-following, and harmlessness, in both Korean and English. HyperCLOVA X exhibits strong reasoning capabilities in Korean backed by a deep understanding of the language and cultural nuances. Further analysis of the inherent bilingual nature and its extension to multilingualism highlights the model's cross-lingual proficiency and strong generalization ability to untargeted languages, including machine translation between several language pairs and cross-lingual inference tasks. We believe that HyperCLOVA X can provide helpful guidance for regions or countries in developing their sovereign LLMs.

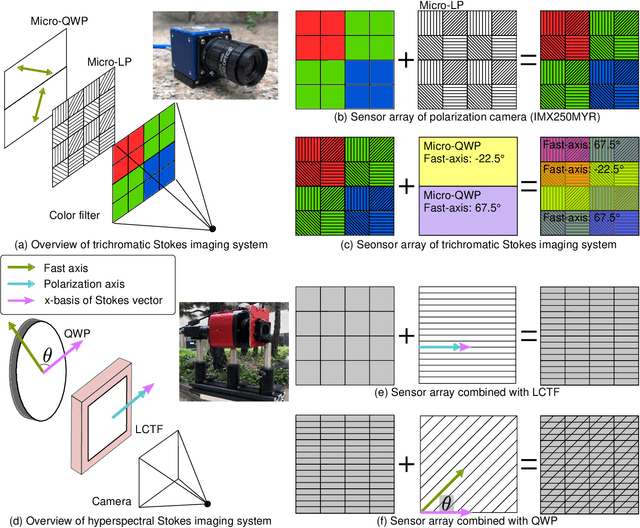

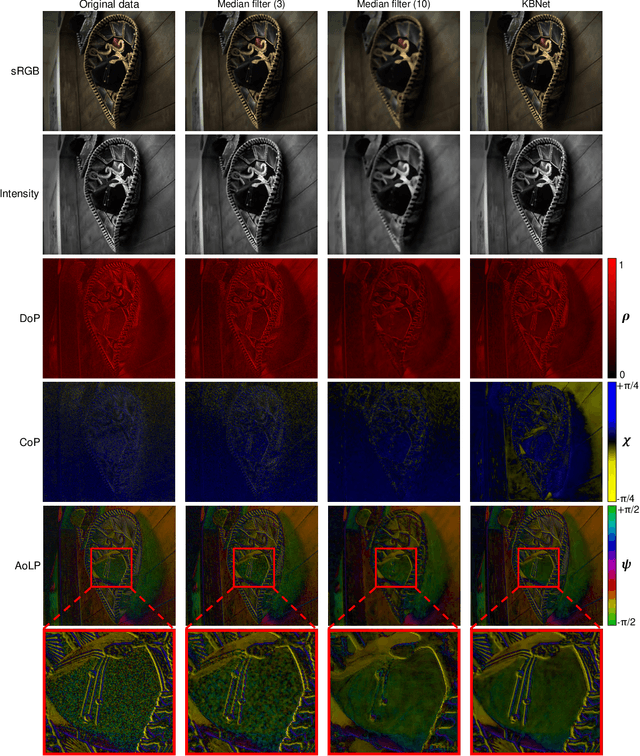

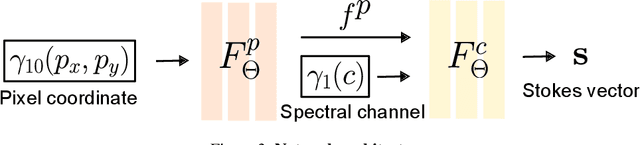

Spectral and Polarization Vision: Spectro-polarimetric Real-world Dataset

Nov 30, 2023

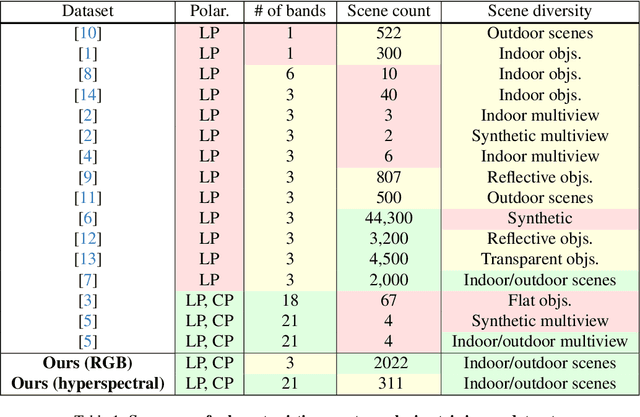

Abstract:Image datasets are essential not only in validating existing methods in computer vision but also in developing new methods. Most existing image datasets focus on trichromatic intensity images to mimic human vision. However, polarization and spectrum, the wave properties of light that animals in harsh environments and with limited brain capacity often rely on, remain underrepresented in existing datasets. Although spectro-polarimetric datasets exist, these datasets have insufficient object diversity, limited illumination conditions, linear-only polarization data, and inadequate image count. Here, we introduce two spectro-polarimetric datasets: trichromatic Stokes images and hyperspectral Stokes images. These novel datasets encompass both linear and circular polarization; they introduce multiple spectral channels; and they feature a broad selection of real-world scenes. With our dataset in hand, we analyze the spectro-polarimetric image statistics, develop efficient representations of such high-dimensional data, and evaluate spectral dependency of shape-from-polarization methods. As such, the proposed dataset promises a foundation for data-driven spectro-polarimetric imaging and vision research. Dataset and code will be publicly available.

Neural 360$^\circ$ Structured Light with Learned Metasurfaces

Jun 27, 2023Abstract:Structured light has proven instrumental in 3D imaging, LiDAR, and holographic light projection. Metasurfaces, comprised of sub-wavelength-sized nanostructures, facilitate 180$^\circ$ field-of-view (FoV) structured light, circumventing the restricted FoV inherent in traditional optics like diffractive optical elements. However, extant metasurface-facilitated structured light exhibits sub-optimal performance in downstream tasks, due to heuristic pattern designs such as periodic dots that do not consider the objectives of the end application. In this paper, we present neural 360$^\circ$ structured light, driven by learned metasurfaces. We propose a differentiable framework, that encompasses a computationally-efficient 180$^\circ$ wave propagation model and a task-specific reconstructor, and exploits both transmission and reflection channels of the metasurface. Leveraging a first-order optimizer within our differentiable framework, we optimize the metasurface design, thereby realizing neural 360$^\circ$ structured light. We have utilized neural 360$^\circ$ structured light for holographic light projection and 3D imaging. Specifically, we demonstrate the first 360$^\circ$ light projection of complex patterns, enabled by our propagation model that can be computationally evaluated 50,000$\times$ faster than the Rayleigh-Sommerfeld propagation. For 3D imaging, we improve depth-estimation accuracy by 5.09$\times$ in RMSE compared to the heuristically-designed structured light. Neural 360$^\circ$ structured light promises robust 360$^\circ$ imaging and display for robotics, extended-reality systems, and human-computer interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge