Junsuk Rho

Learned split-spectrum metalens for obstruction-free broadband imaging in the visible

Jan 27, 2026Abstract:Obstructions such as raindrops, fences, or dust degrade captured images, especially when mechanical cleaning is infeasible. Conventional solutions to obstructions rely on a bulky compound optics array or computational inpainting, which compromise compactness or fidelity. Metalenses composed of subwavelength meta-atoms promise compact imaging, but simultaneous achievement of broadband and obstruction-free imaging remains a challenge, since a metalens that images distant scenes across a broadband spectrum cannot properly defocus near-depth occlusions. Here, we introduce a learned split-spectrum metalens that enables broadband obstruction-free imaging. Our approach divides the spectrum of each RGB channel into pass and stop bands with multi-band spectral filtering and learns the metalens to focus light from far objects through pass bands, while filtering focused near-depth light through stop bands. This optical signal is further enhanced using a neural network. Our learned split-spectrum metalens achieves broadband and obstruction-free imaging with relative PSNR gains of 32.29% and improves object detection and semantic segmentation accuracies with absolute gains of +13.54% mAP, +48.45% IoU, and +20.35% mIoU over a conventional hyperbolic design. This promises robust obstruction-free sensing and vision for space-constrained systems, such as mobile robots, drones, and endoscopes.

An accurate measurement of parametric array using a spurious sound filter topologically equivalent to a half-wavelength resonator

Apr 16, 2025

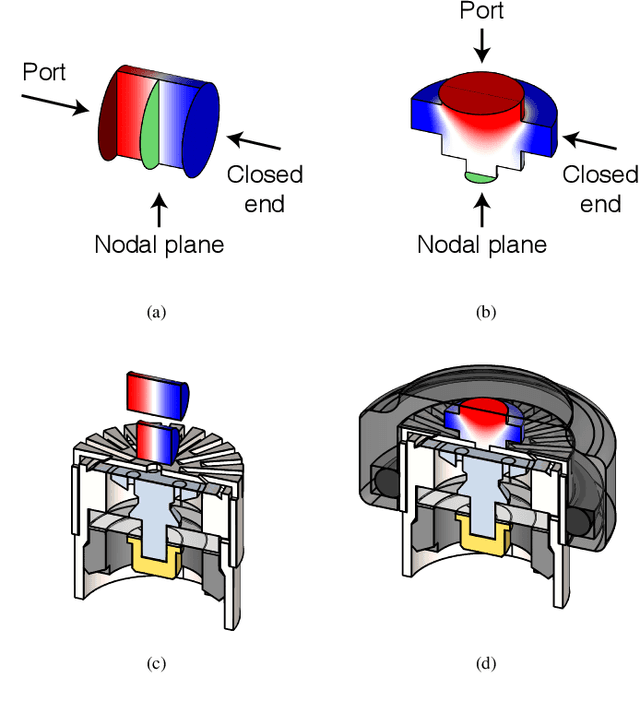

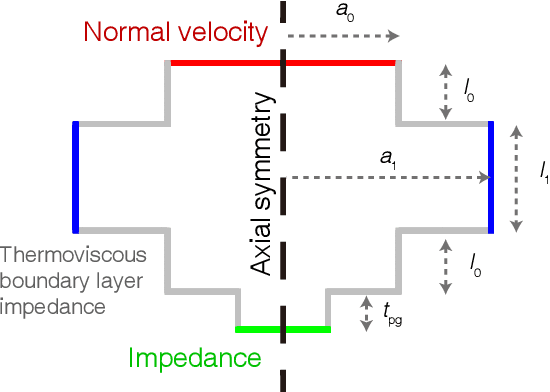

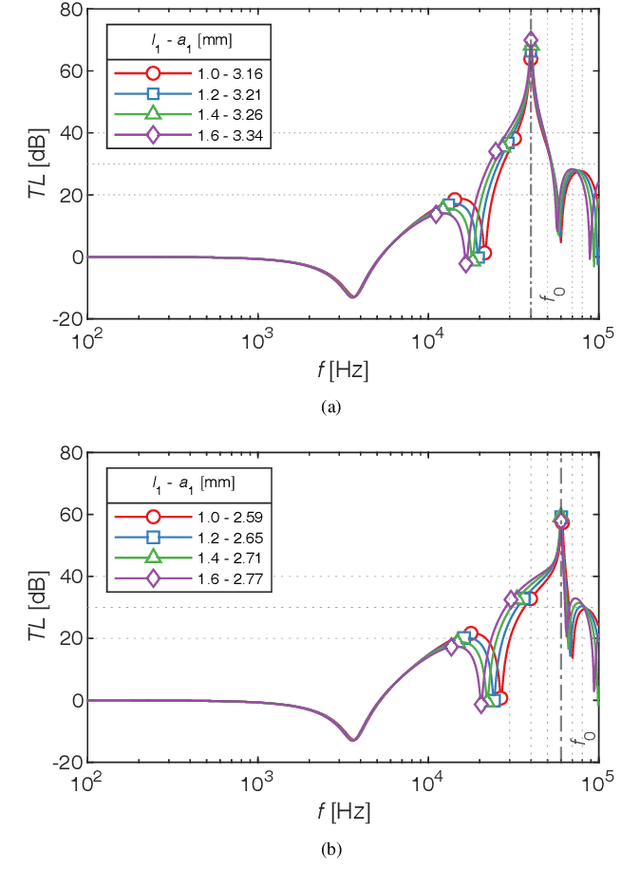

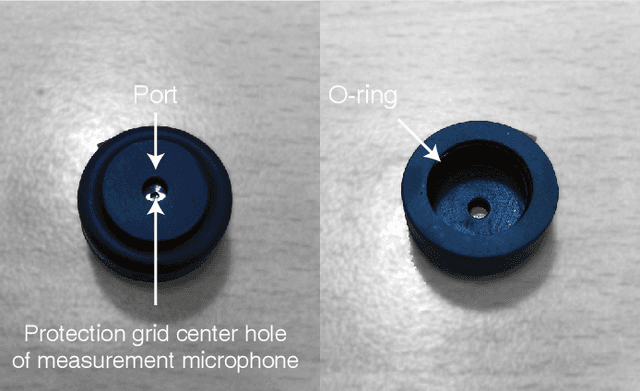

Abstract:Parametric arrays (PA) offer exceptional directivity and compactness compared to conventional loudspeakers, facilitating various acoustic applications. However, accurate measurement of audio signals generated by PA remains challenging due to spurious ultrasonic sounds arising from microphone nonlinearities. Existing filtering methods, including Helmholtz resonators, phononic crystals, polymer films, and grazing incidence techniques, exhibit practical constraints such as size limitations, fabrication complexity, or insufficient attenuation. To address these issues, we propose and demonstrate a novel acoustic filter based on the design of a half-wavelength resonator. The developed filter exploits the nodal plane in acoustic pressure distribution, effectively minimizing microphone exposure to targeted ultrasonic frequencies. Fabrication via stereolithography (SLA) 3D printing ensures high dimensional accuracy, which is crucial for high-frequency acoustic filters. Finite element method (FEM) simulations guided filter optimization for suppression frequencies at 40 kHz and 60 kHz, achieving high transmission loss (TL) around 60 dB. Experimental validations confirm the filter's superior performance in significantly reducing spurious acoustic signals, as reflected in frequency response, beam pattern, and propagation curve measurements. The proposed filter ensures stable and precise acoustic characterization, independent of measurement distances and incidence angles. This new approach not only improves measurement accuracy but also enhances reliability and reproducibility in parametric array research and development.

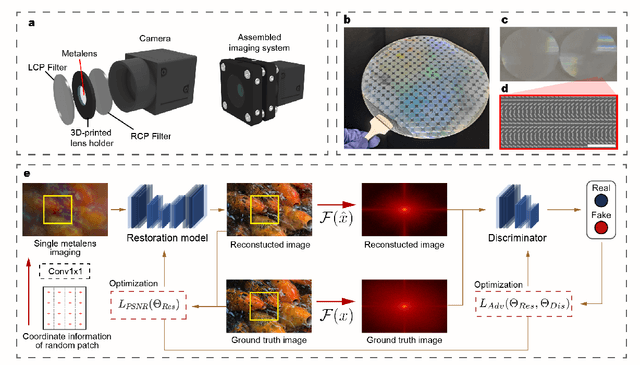

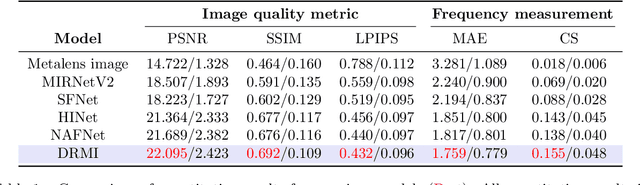

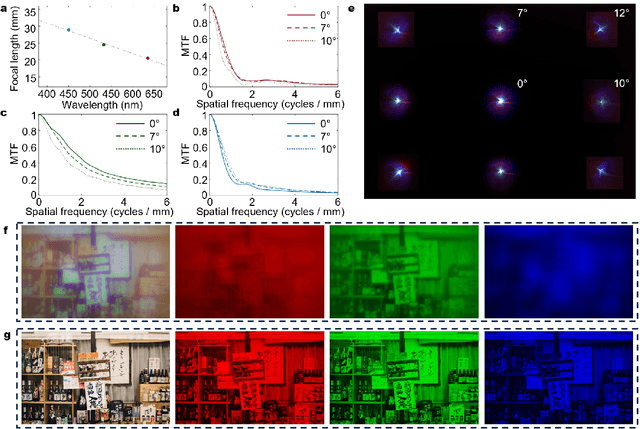

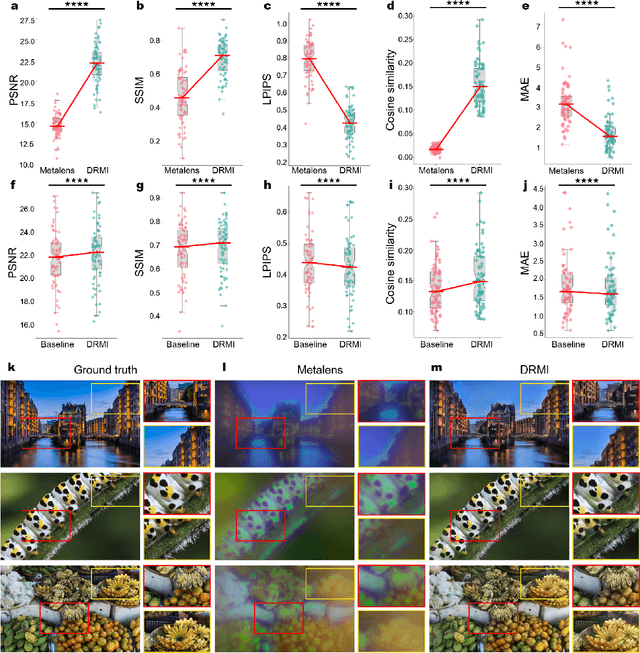

Deep-learning-driven end-to-end metalens imaging

Dec 05, 2023

Abstract:Recent advances in metasurface lenses (metalenses) have shown great potential for opening a new era in compact imaging, photography, light detection and ranging (LiDAR), and virtual reality/augmented reality (VR/AR) applications. However, the fundamental trade-off between broadband focusing efficiency and operating bandwidth limits the performance of broadband metalenses, resulting in chromatic aberration, angular aberration, and a relatively low efficiency. In this study, a deep-learning-based image restoration framework is proposed to overcome these limitations and realize end-to-end metalens imaging, thereby achieving aberration-free full-color imaging for massproduced metalenses with 10-mm diameter. Neural network-assisted metalens imaging achieved a high resolution comparable to that of the ground truth image.

Neural 360$^\circ$ Structured Light with Learned Metasurfaces

Jun 27, 2023Abstract:Structured light has proven instrumental in 3D imaging, LiDAR, and holographic light projection. Metasurfaces, comprised of sub-wavelength-sized nanostructures, facilitate 180$^\circ$ field-of-view (FoV) structured light, circumventing the restricted FoV inherent in traditional optics like diffractive optical elements. However, extant metasurface-facilitated structured light exhibits sub-optimal performance in downstream tasks, due to heuristic pattern designs such as periodic dots that do not consider the objectives of the end application. In this paper, we present neural 360$^\circ$ structured light, driven by learned metasurfaces. We propose a differentiable framework, that encompasses a computationally-efficient 180$^\circ$ wave propagation model and a task-specific reconstructor, and exploits both transmission and reflection channels of the metasurface. Leveraging a first-order optimizer within our differentiable framework, we optimize the metasurface design, thereby realizing neural 360$^\circ$ structured light. We have utilized neural 360$^\circ$ structured light for holographic light projection and 3D imaging. Specifically, we demonstrate the first 360$^\circ$ light projection of complex patterns, enabled by our propagation model that can be computationally evaluated 50,000$\times$ faster than the Rayleigh-Sommerfeld propagation. For 3D imaging, we improve depth-estimation accuracy by 5.09$\times$ in RMSE compared to the heuristically-designed structured light. Neural 360$^\circ$ structured light promises robust 360$^\circ$ imaging and display for robotics, extended-reality systems, and human-computer interactions.

Biomimetic Ultra-Broadband Perfect Absorbers Optimised with Reinforcement Learning

Oct 28, 2019

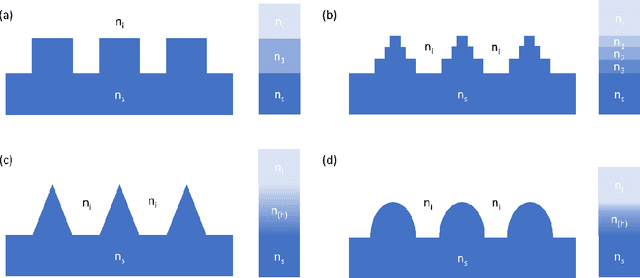

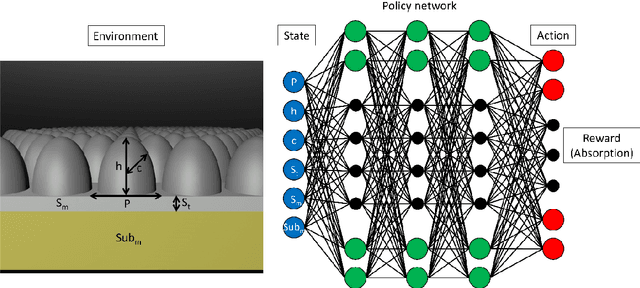

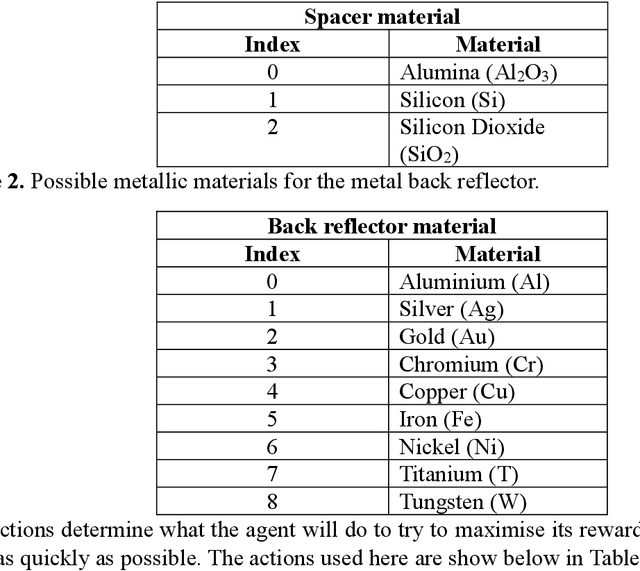

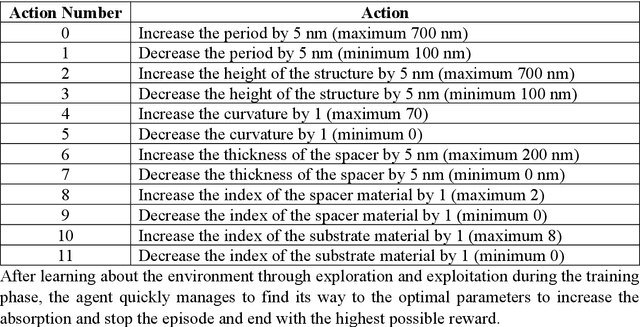

Abstract:By learning the optimal policy with a double deep Q-learning network, we design ultra-broadband, biomimetic, perfect absorbers with various materials, based the structure of a moths eye. All absorbers achieve over 90% average absorption from 400 to 1,600 nm. By training a DDQN with motheye structures made up of chromium, we transfer the learned knowledge to other, similar materials to quickly and efficiently find the optimal parameters from the around 1 billion possible options. The knowledge learned from previous optimisations helps the network to find the best solution for a new material in fewer steps, dramatically increasing the efficiency of finding designs with ultra-broadband absorption.

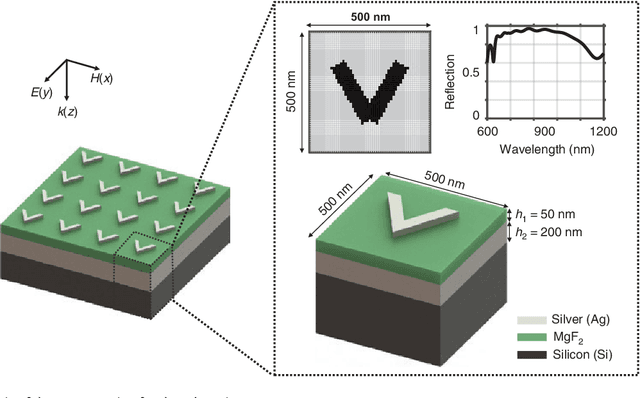

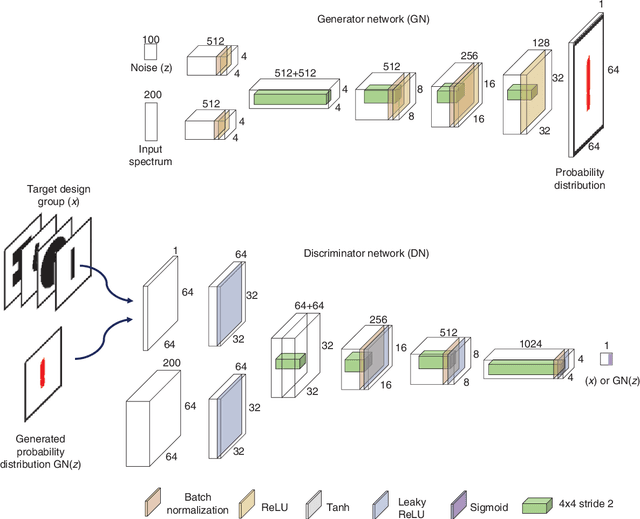

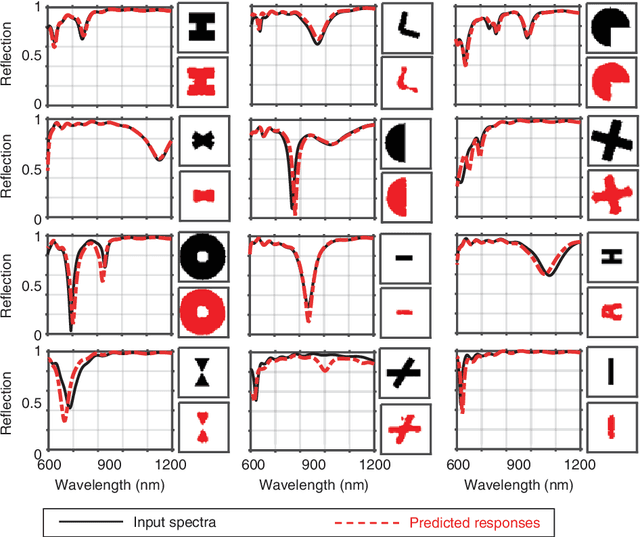

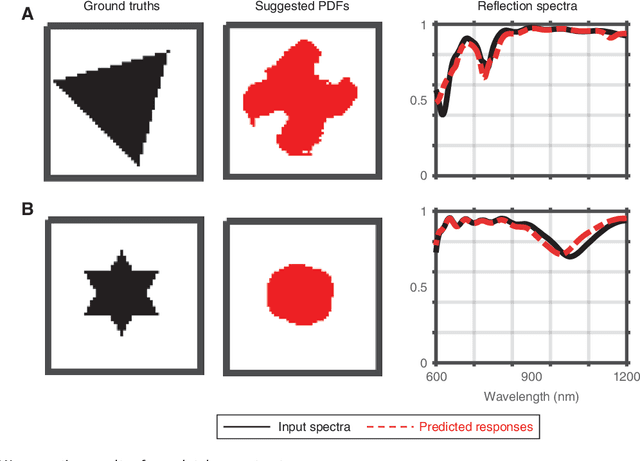

Designing nanophotonic structures using conditional-deep convolutional generative adversarial networks

Mar 20, 2019

Abstract:Data-driven design approaches based on deep-learning have been introduced in nanophotonics to reduce time-consuming iterative simulations which have been a major challenge. Here, we report the first use of conditional deep convolutional generative adversarial networks to design nanophotonic antennae that are not constrained to a predefined shape. For given input reflection spectra, the network generates desirable designs in the form of images; this form allows suggestions of new structures that cannot be represented by structural parameters. Simulation results obtained from the generated designs agreed well with the input reflection spectrum. This method opens new avenues towards the development of nanophotonics by providing a fast and convenient approach to design complex nanophotonic structures that have desired optical properties.

Finding the best design parameters for optical nanostructures using reinforcement learning

Oct 18, 2018

Abstract:Recently, a novel machine learning model has emerged in the field of reinforcement learning known as deep Q-learning. This model is capable of finding the best possible solution in systems consisting of millions of choices, without ever experiencing it before, and has been used to beat the best human minds at complex games such as, Go and chess, which both have a huge number of possible decisions and outcomes for each move. With a human-level intelligence, it has been solved the problems that no other machine learning model could do before. Here, we show the steps needed for implementing this model on an optical problem. We investigated the colour generation by dielectric nanostructures and show that this model can find geometrical properties that can generate a much deeper red, green and blue colours compared to the ones found by human researchers. This technique can easily be extended to predict and find the best design parameters for other optical structures.

Predicting resonant properties of plasmonic structures by deep learning

Apr 19, 2018

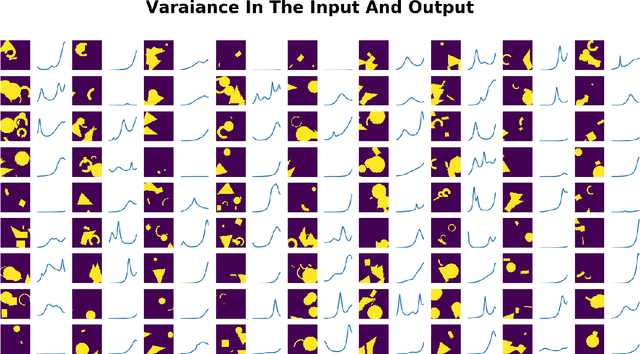

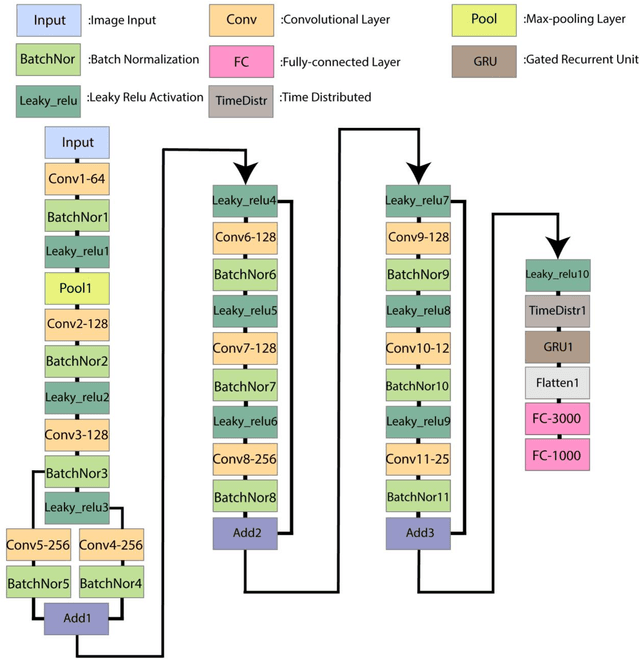

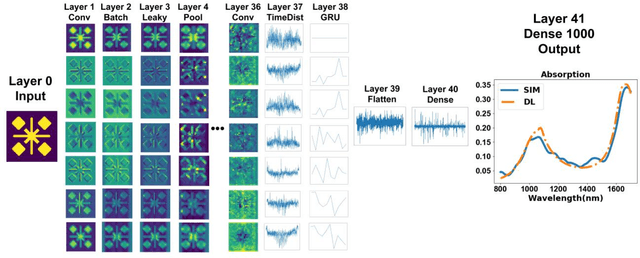

Abstract:Deep learning can be used to extract meaningful results from images. In this paper, we used convolutional neural networks combined with recurrent neural networks on images of plasmonic structures and extract absorption data form them. To provide the required data for the model we did 100,000 simulations with similar setups and random structures. By designing a deep network we could find a model that could predict the absorption of any structure with similar setup. We used convolutional neural networks to get the spatial information from the images and we used recurrent neural networks to help the model find the relationship between the spatial information obtained from convolutional neural network model. With this design we could reach a very low loss in predicting the absorption compared to the results obtained from numerical simulation in a very short time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge