Yuexin Xuan

DeepTracer: Tracing Stolen Model via Deep Coupled Watermarks

Nov 12, 2025Abstract:Model watermarking techniques can embed watermark information into the protected model for ownership declaration by constructing specific input-output pairs. However, existing watermarks are easily removed when facing model stealing attacks, and make it difficult for model owners to effectively verify the copyright of stolen models. In this paper, we analyze the root cause of the failure of current watermarking methods under model stealing scenarios and then explore potential solutions. Specifically, we introduce a robust watermarking framework, DeepTracer, which leverages a novel watermark samples construction method and a same-class coupling loss constraint. DeepTracer can incur a high-coupling model between watermark task and primary task that makes adversaries inevitably learn the hidden watermark task when stealing the primary task functionality. Furthermore, we propose an effective watermark samples filtering mechanism that elaborately select watermark key samples used in model ownership verification to enhance the reliability of watermarks. Extensive experiments across multiple datasets and models demonstrate that our method surpasses existing approaches in defending against various model stealing attacks, as well as watermark attacks, and achieves new state-of-the-art effectiveness and robustness.

Buster: Incorporating Backdoor Attacks into Text Encoder to Mitigate NSFW Content Generation

Dec 10, 2024

Abstract:In the digital age, the proliferation of deep learning models has led to significant concerns about the generation of Not Safe for Work (NSFW) content. Existing defense methods primarily involve model fine-tuning and post-hoc content moderation. However, these approaches often lack scalability in eliminating harmful content, degrade the quality of benign image generation, or incur high inference costs. To tackle these challenges, we propose an innovative framework called \textbf{Buster}, which injects backdoor attacks into the text encoder to prevent NSFW content generation. Specifically, Buster leverages deep semantic information rather than explicit prompts as triggers, redirecting NSFW prompts towards targeted benign prompts. This approach demonstrates exceptional resilience and scalability in mitigating NSFW content. Remarkably, Buster fine-tunes the text encoder of Text-to-Image models within just five minutes, showcasing high efficiency. Our extensive experiments reveal that Buster outperforms all other baselines, achieving superior NSFW content removal rate while preserving the quality of harmless images.

Federated Learning with Limited Node Labels

Jun 18, 2024

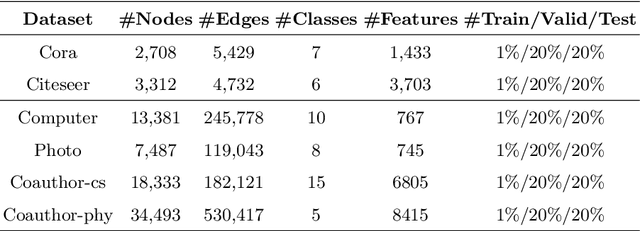

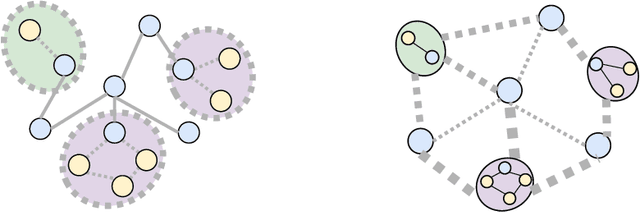

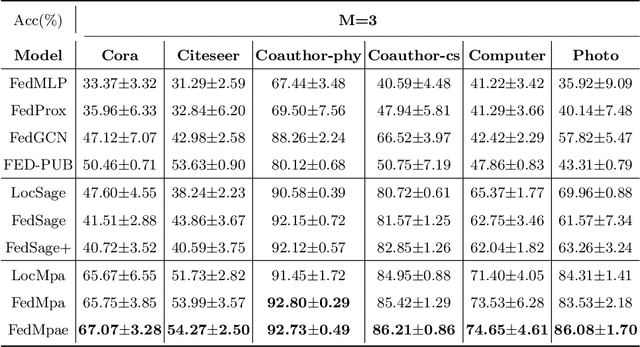

Abstract:Subgraph federated learning (SFL) is a research methodology that has gained significant attention for its potential to handle distributed graph-structured data. In SFL, the local model comprises graph neural networks (GNNs) with a partial graph structure. However, some SFL models have overlooked the significance of missing cross-subgraph edges, which can lead to local GNNs being unable to message-pass global representations to other parties' GNNs. Moreover, existing SFL models require substantial labeled data, which limits their practical applications. To overcome these limitations, we present a novel SFL framework called FedMpa that aims to learn cross-subgraph node representations. FedMpa first trains a multilayer perceptron (MLP) model using a small amount of data and then propagates the federated feature to the local structures. To further improve the embedding representation of nodes with local subgraphs, we introduce the FedMpae method, which reconstructs the local graph structure with an innovation view that applies pooling operation to form super-nodes. Our extensive experiments on six graph datasets demonstrate that FedMpa is highly effective in node classification. Furthermore, our ablation experiments verify the effectiveness of FedMpa.

Practical and General Backdoor Attacks against Vertical Federated Learning

Jun 19, 2023Abstract:Federated learning (FL), which aims to facilitate data collaboration across multiple organizations without exposing data privacy, encounters potential security risks. One serious threat is backdoor attacks, where an attacker injects a specific trigger into the training dataset to manipulate the model's prediction. Most existing FL backdoor attacks are based on horizontal federated learning (HFL), where the data owned by different parties have the same features. However, compared to HFL, backdoor attacks on vertical federated learning (VFL), where each party only holds a disjoint subset of features and the labels are only owned by one party, are rarely studied. The main challenge of this attack is to allow an attacker without access to the data labels, to perform an effective attack. To this end, we propose BadVFL, a novel and practical approach to inject backdoor triggers into victim models without label information. BadVFL mainly consists of two key steps. First, to address the challenge of attackers having no knowledge of labels, we introduce a SDD module that can trace data categories based on gradients. Second, we propose a SDP module that can improve the attack's effectiveness by enhancing the decision dependency between the trigger and attack target. Extensive experiments show that BadVFL supports diverse datasets and models, and achieves over 93% attack success rate with only 1% poisoning rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge