Yuerong Li

OTAS: Unsupervised Boundary Detection for Object-Centric Temporal Action Segmentation

Sep 12, 2023

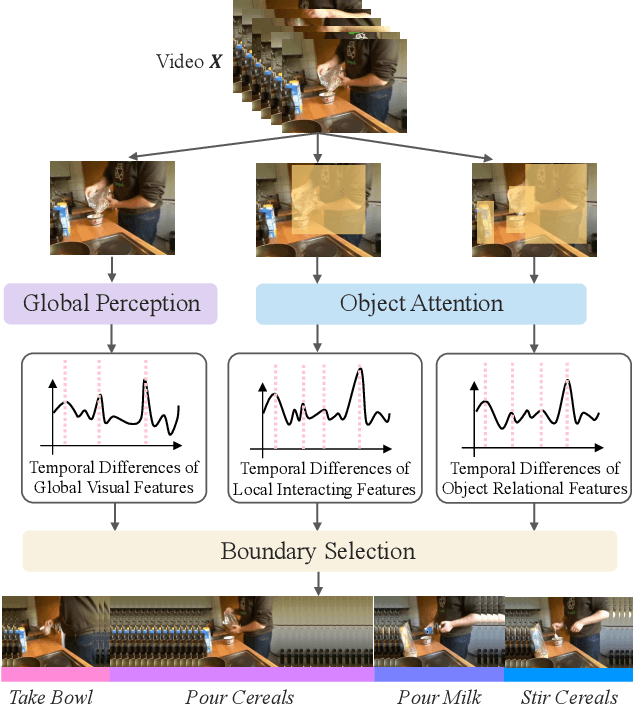

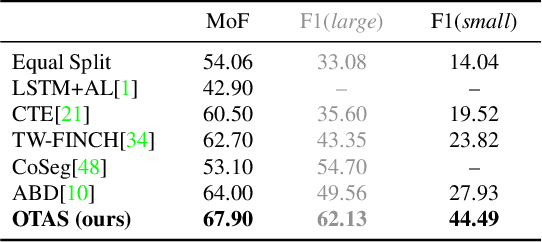

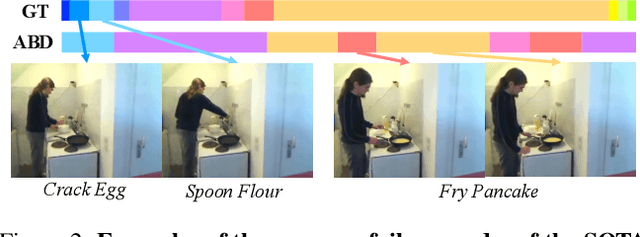

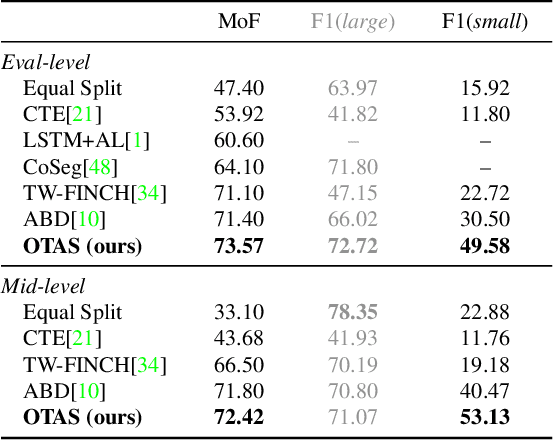

Abstract:Temporal action segmentation is typically achieved by discovering the dramatic variances in global visual descriptors. In this paper, we explore the merits of local features by proposing the unsupervised framework of Object-centric Temporal Action Segmentation (OTAS). Broadly speaking, OTAS consists of self-supervised global and local feature extraction modules as well as a boundary selection module that fuses the features and detects salient boundaries for action segmentation. As a second contribution, we discuss the pros and cons of existing frame-level and boundary-level evaluation metrics. Through extensive experiments, we find OTAS is superior to the previous state-of-the-art method by $41\%$ on average in terms of our recommended F1 score. Surprisingly, OTAS even outperforms the ground-truth human annotations in the user study. Moreover, OTAS is efficient enough to allow real-time inference.

On The Energy Statistics of Feature Maps in Pruning of Neural Networks with Skip-Connections

Jan 26, 2022

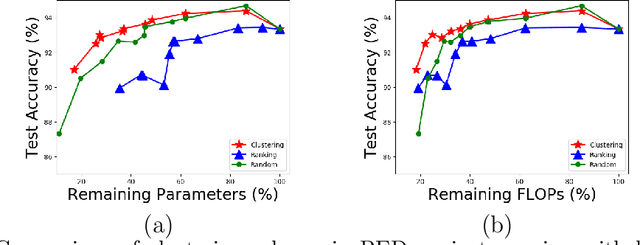

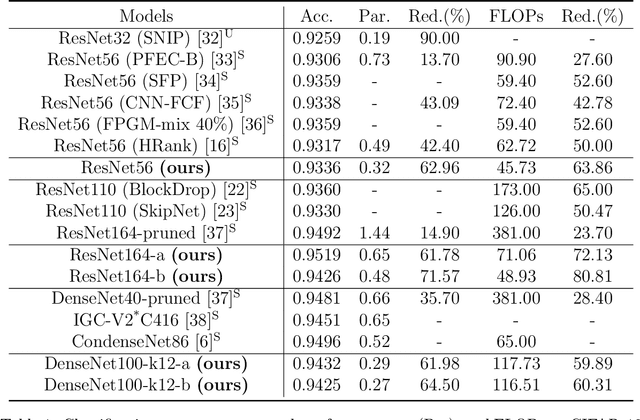

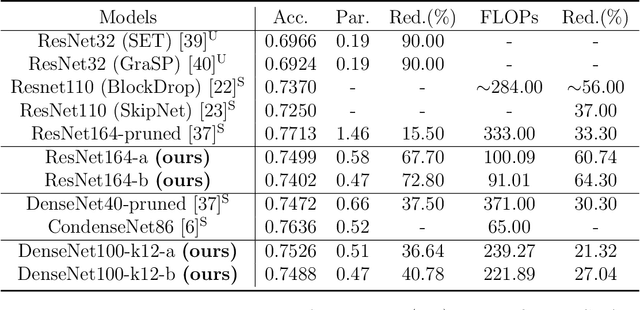

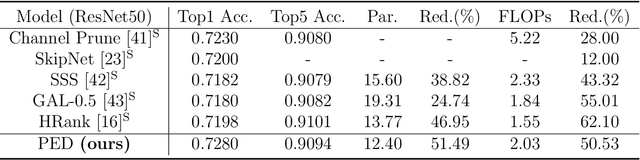

Abstract:We propose a new structured pruning framework for compressing Deep Neural Networks (DNNs) with skip connections, based on measuring the statistical dependency of hidden layers and predicted outputs. The dependence measure defined by the energy statistics of hidden layers serves as a model-free measure of information between the feature maps and the output of the network. The estimated dependence measure is subsequently used to prune a collection of redundant and uninformative layers. Model-freeness of our measure guarantees that no parametric assumptions on the feature map distribution are required, making it computationally appealing for very high dimensional feature space in DNNs. Extensive numerical experiments on various architectures show the efficacy of the proposed pruning approach with competitive performance to state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge