Youngmin Ro

Emulating Self-attention with Convolution for Efficient Image Super-Resolution

Mar 09, 2025Abstract:In this paper, we tackle the high computational overhead of transformers for lightweight image super-resolution. (SR). Motivated by the observations of self-attention's inter-layer repetition, we introduce a convolutionized self-attention module named Convolutional Attention (ConvAttn) that emulates self-attention's long-range modeling capability and instance-dependent weighting with a single shared large kernel and dynamic kernels. By utilizing the ConvAttn module, we significantly reduce the reliance on self-attention and its involved memory-bound operations while maintaining the representational capability of transformers. Furthermore, we overcome the challenge of integrating flash attention into the lightweight SR regime, effectively mitigating self-attention's inherent memory bottleneck. We scale up window size to 32$\times$32 with flash attention rather than proposing an intricated self-attention module, significantly improving PSNR by 0.31dB on Urban100$\times$2 while reducing latency and memory usage by 16$\times$ and 12.2$\times$. Building on these approaches, our proposed network, termed Emulating Self-attention with Convolution (ESC), notably improves PSNR by 0.27 dB on Urban100$\times$4 compared to HiT-SRF, reducing the latency and memory usage by 3.7$\times$ and 6.2$\times$, respectively. Extensive experiments demonstrate that our ESC maintains the ability for long-range modeling, data scalability, and the representational power of transformers despite most self-attentions being replaced by the ConvAttn module.

SoRA: Singular Value Decomposed Low-Rank Adaptation for Domain Generalizable Representation Learning

Dec 05, 2024

Abstract:Domain generalization (DG) aims to adapt a model using one or multiple source domains to ensure robust performance in unseen target domains. Recently, Parameter-Efficient Fine-Tuning (PEFT) of foundation models has shown promising results in the context of DG problem. Nevertheless, existing PEFT methods still struggle to strike a balance between preserving generalizable components of the pre-trained model and learning task-specific features. To gain insights into the distribution of generalizable components, we begin by analyzing the pre-trained weights through the lens of singular value decomposition. Building on these insights, we introduce Singular Value Decomposed Low-Rank Adaptation (SoRA), an approach that selectively tunes minor singular components while keeping the residual parts frozen. SoRA effectively retains the generalization ability of the pre-trained model while efficiently acquiring task-specific skills. Furthermore, we freeze domain-generalizable blocks and employ an annealing weight decay strategy, thereby achieving an optimal balance in the delicate trade-off between generalizability and discriminability. SoRA attains state-of-the-art results on multiple benchmarks that span both domain generalized semantic segmentation to domain generalized object detection. In addition, our methods introduce no additional inference overhead or regularization loss, maintain compatibility with any backbone or head, and are designed to be versatile, allowing easy integration into a wide range of tasks.

Implicit Grid Convolution for Multi-Scale Image Super-Resolution

Aug 19, 2024Abstract:Recently, Super-Resolution (SR) achieved significant performance improvement by employing neural networks. Most SR methods conventionally train a single model for each targeted scale, which increases redundancy in training and deployment in proportion to the number of scales targeted. This paper challenges this conventional fixed-scale approach. Our preliminary analysis reveals that, surprisingly, encoders trained at different scales extract similar features from images. Furthermore, the commonly used scale-specific upsampler, Sub-Pixel Convolution (SPConv), exhibits significant inter-scale correlations. Based on these observations, we propose a framework for training multiple integer scales simultaneously with a single model. We use a single encoder to extract features and introduce a novel upsampler, Implicit Grid Convolution~(IGConv), which integrates SPConv at all scales within a single module to predict multiple scales. Our extensive experiments demonstrate that training multiple scales with a single model reduces the training budget and stored parameters by one-third while achieving equivalent inference latency and comparable performance. Furthermore, we propose IGConv$^{+}$, which addresses spectral bias and input-independent upsampling and uses ensemble prediction to improve performance. As a result, SRFormer-IGConv$^{+}$ achieves a remarkable 0.25dB improvement in PSNR at Urban100$\times$4 while reducing the training budget, stored parameters, and inference cost compared to the existing SRFormer.

MetaMixer Is All You Need

Jun 04, 2024

Abstract:Transformer, composed of self-attention and Feed-Forward Network, has revolutionized the landscape of network design across various vision tasks. FFN is a versatile operator seamlessly integrated into nearly all AI models to effectively harness rich representations. Recent works also show that FFN functions like key-value memories. Thus, akin to the query-key-value mechanism within self-attention, FFN can be viewed as a memory network, where the input serves as query and the two projection weights operate as keys and values, respectively. We hypothesize that the importance lies in query-key-value framework itself rather than in self-attention. To verify this, we propose converting self-attention into a more FFN-like efficient token mixer with only convolutions while retaining query-key-value framework, namely FFNification. Specifically, FFNification replaces query-key and attention coefficient-value interactions with large kernel convolutions and adopts GELU activation function instead of softmax. The derived token mixer, FFNified attention, serves as key-value memories for detecting locally distributed spatial patterns, and operates in the opposite dimension to the ConvNeXt block within each corresponding sub-operation of the query-key-value framework. Building upon the above two modules, we present a family of Fast-Forward Networks. Our FFNet achieves remarkable performance improvements over previous state-of-the-art methods across a wide range of tasks. The strong and general performance of our proposed method validates our hypothesis and leads us to introduce MetaMixer, a general mixer architecture that does not specify sub-operations within the query-key-value framework. We show that using only simple operations like convolution and GELU in the MetaMixer can achieve superior performance.

Partial Large Kernel CNNs for Efficient Super-Resolution

Apr 18, 2024Abstract:Recently, in the super-resolution (SR) domain, transformers have outperformed CNNs with fewer FLOPs and fewer parameters since they can deal with long-range dependency and adaptively adjust weights based on instance. In this paper, we demonstrate that CNNs, although less focused on in the current SR domain, surpass Transformers in direct efficiency measures. By incorporating the advantages of Transformers into CNNs, we aim to achieve both computational efficiency and enhanced performance. However, using a large kernel in the SR domain, which mainly processes large images, incurs a large computational overhead. To overcome this, we propose novel approaches to employing the large kernel, which can reduce latency by 86\% compared to the naive large kernel, and leverage an Element-wise Attention module to imitate instance-dependent weights. As a result, we introduce Partial Large Kernel CNNs for Efficient Super-Resolution (PLKSR), which achieves state-of-the-art performance on four datasets at a scale of $\times$4, with reductions of 68.1\% in latency and 80.2\% in maximum GPU memory occupancy compared to SRFormer-light.

Arbitrary-Scale Downscaling of Tidal Current Data Using Implicit Continuous Representation

Jan 31, 2024Abstract:Numerical models have long been used to understand geoscientific phenomena, including tidal currents, crucial for renewable energy production and coastal engineering. However, their computational cost hinders generating data of varying resolutions. As an alternative, deep learning-based downscaling methods have gained traction due to their faster inference speeds. But most of them are limited to only inference fixed scale and overlook important characteristics of target geoscientific data. In this paper, we propose a novel downscaling framework for tidal current data, addressing its unique characteristics, which are dissimilar to images: heterogeneity and local dependency. Moreover, our framework can generate any arbitrary-scale output utilizing a continuous representation model. Our proposed framework demonstrates significantly improved flow velocity predictions by 93.21% (MSE) and 63.85% (MAE) compared to the Baseline model while achieving a remarkable 33.2% reduction in FLOPs.

SHViT: Single-Head Vision Transformer with Memory Efficient Macro Design

Jan 29, 2024Abstract:Recently, efficient Vision Transformers have shown great performance with low latency on resource-constrained devices. Conventionally, they use 4x4 patch embeddings and a 4-stage structure at the macro level, while utilizing sophisticated attention with multi-head configuration at the micro level. This paper aims to address computational redundancy at all design levels in a memory-efficient manner. We discover that using larger-stride patchify stem not only reduces memory access costs but also achieves competitive performance by leveraging token representations with reduced spatial redundancy from the early stages. Furthermore, our preliminary analyses suggest that attention layers in the early stages can be substituted with convolutions, and several attention heads in the latter stages are computationally redundant. To handle this, we introduce a single-head attention module that inherently prevents head redundancy and simultaneously boosts accuracy by parallelly combining global and local information. Building upon our solutions, we introduce SHViT, a Single-Head Vision Transformer that obtains the state-of-the-art speed-accuracy tradeoff. For example, on ImageNet-1k, our SHViT-S4 is 3.3x, 8.1x, and 2.4x faster than MobileViTv2 x1.0 on GPU, CPU, and iPhone12 mobile device, respectively, while being 1.3% more accurate. For object detection and instance segmentation on MS COCO using Mask-RCNN head, our model achieves performance comparable to FastViT-SA12 while exhibiting 3.8x and 2.0x lower backbone latency on GPU and mobile device, respectively.

Dynamic Mobile-Former: Strengthening Dynamic Convolution with Attention and Residual Connection in Kernel Space

Apr 13, 2023

Abstract:We introduce Dynamic Mobile-Former(DMF), maximizes the capabilities of dynamic convolution by harmonizing it with efficient operators.Our Dynamic MobileFormer effectively utilizes the advantages of Dynamic MobileNet (MobileNet equipped with dynamic convolution) using global information from light-weight attention.A Transformer in Dynamic Mobile-Former only requires a few randomly initialized tokens to calculate global features, making it computationally efficient.And a bridge between Dynamic MobileNet and Transformer allows for bidirectional integration of local and global features.We also simplify the optimization process of vanilla dynamic convolution by splitting the convolution kernel into an input-agnostic kernel and an input-dependent kernel.This allows for optimization in a wider kernel space, resulting in enhanced capacity.By integrating lightweight attention and enhanced dynamic convolution, our Dynamic Mobile-Former achieves not only high efficiency, but also strong performance.We benchmark the Dynamic Mobile-Former on a series of vision tasks, and showcase that it achieves impressive performance on image classification, COCO detection, and instanace segmentation.For example, our DMF hits the top-1 accuracy of 79.4% on ImageNet-1K, much higher than PVT-Tiny by 4.3% with only 1/4 FLOPs.Additionally,our proposed DMF-S model performed well on challenging vision datasets such as COCO, achieving a 39.0% mAP,which is 1% higher than that of the Mobile-Former 508M model, despite using 3 GFLOPs less computations.Code and models are available at https://github.com/ysj9909/DMF

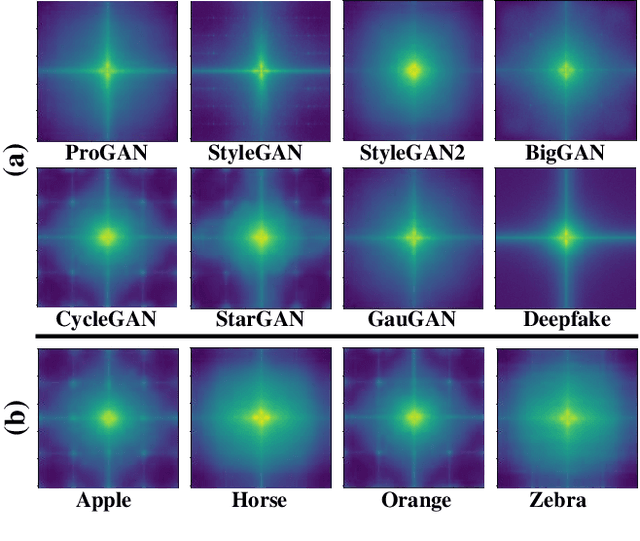

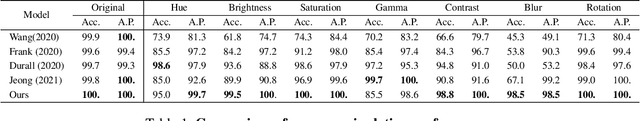

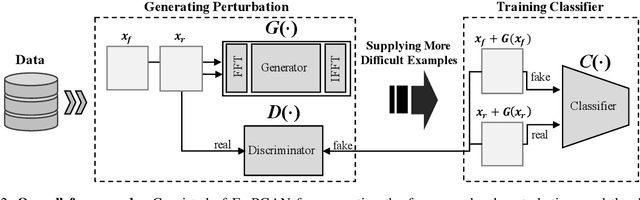

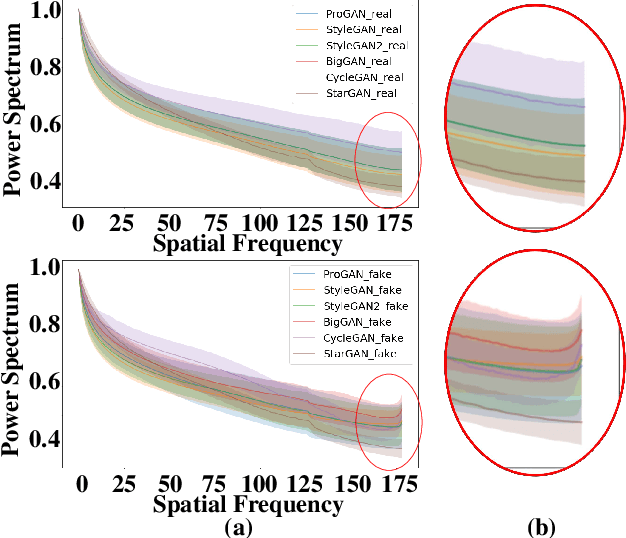

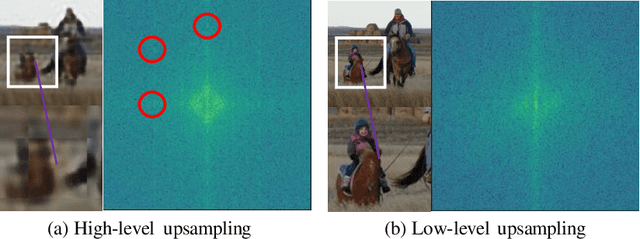

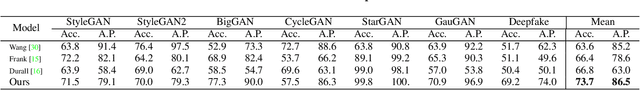

FrePGAN: Robust Deepfake Detection Using Frequency-level Perturbations

Feb 07, 2022

Abstract:Various deepfake detectors have been proposed, but challenges still exist to detect images of unknown categories or GAN models outside of the training settings. Such issues arise from the overfitting issue, which we discover from our own analysis and the previous studies to originate from the frequency-level artifacts in generated images. We find that ignoring the frequency-level artifacts can improve the detector's generalization across various GAN models, but it can reduce the model's performance for the trained GAN models. Thus, we design a framework to generalize the deepfake detector for both the known and unseen GAN models. Our framework generates the frequency-level perturbation maps to make the generated images indistinguishable from the real images. By updating the deepfake detector along with the training of the perturbation generator, our model is trained to detect the frequency-level artifacts at the initial iterations and consider the image-level irregularities at the last iterations. For experiments, we design new test scenarios varying from the training settings in GAN models, color manipulations, and object categories. Numerous experiments validate the state-of-the-art performance of our deepfake detector.

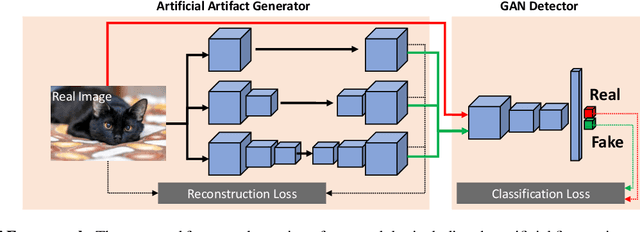

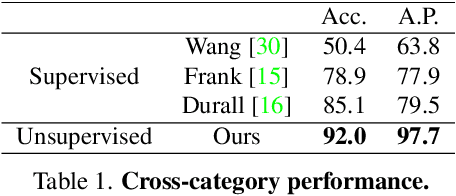

Self-supervised GAN Detector

Nov 12, 2021

Abstract:Although the recent advancement in generative models brings diverse advantages to society, it can also be abused with malicious purposes, such as fraud, defamation, and fake news. To prevent such cases, vigorous research is conducted to distinguish the generated images from the real images, but challenges still remain to distinguish the unseen generated images outside of the training settings. Such limitations occur due to data dependency arising from the model's overfitting issue to the training data generated by specific GANs. To overcome this issue, we adopt a self-supervised scheme to propose a novel framework. Our proposed method is composed of the artificial fingerprint generator reconstructing the high-quality artificial fingerprints of GAN images for detailed analysis, and the GAN detector distinguishing GAN images by learning the reconstructed artificial fingerprints. To improve the generalization of the artificial fingerprint generator, we build multiple autoencoders with different numbers of upconvolution layers. With numerous ablation studies, the robust generalization of our method is validated by outperforming the generalization of the previous state-of-the-art algorithms, even without utilizing the GAN images of the training dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge