Young-Hee Lee

CoVOR-SLAM: Cooperative SLAM using Visual Odometry and Ranges for Multi-Robot Systems

Nov 21, 2023

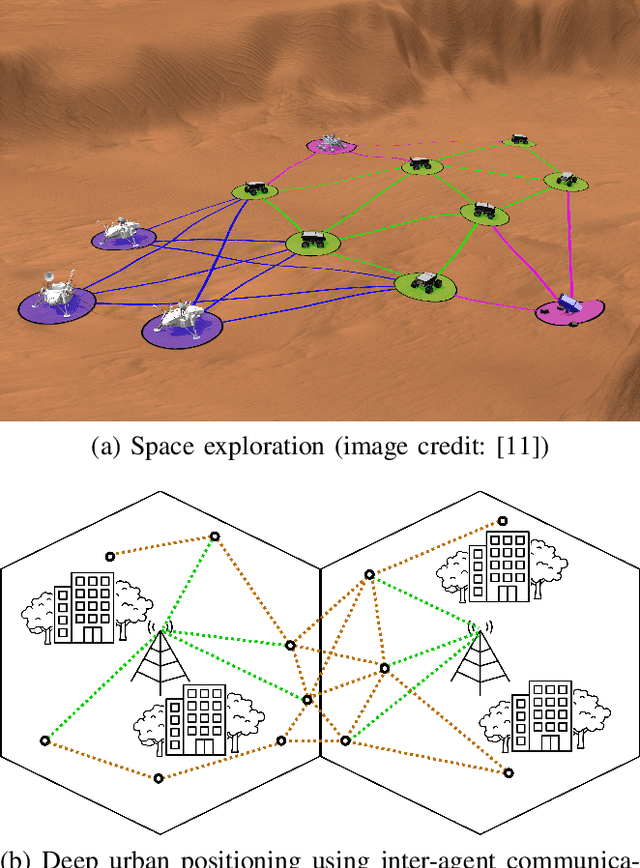

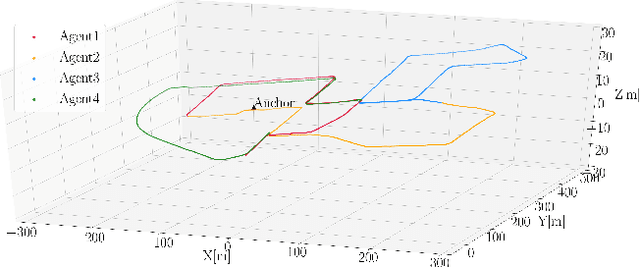

Abstract:A swarm of robots has advantages over a single robot, since it can explore larger areas much faster and is more robust to single-point failures. Accurate relative positioning is necessary to successfully carry out a collaborative mission without collisions. When Visual Simultaneous Localization and Mapping (VSLAM) is used to estimate the poses of each robot, inter-agent loop closing is widely applied to reduce the relative positioning errors. This technique can mitigate errors using the feature points commonly observed by different robots. However, it requires significant computing and communication capabilities to detect inter-agent loops, and to process the data transmitted by multiple agents. In this paper, we propose Collaborative SLAM using Visual Odometry and Range measurements (CoVOR-SLAM) to overcome this challenge. In the framework of CoVOR-SLAM, robots only need to exchange pose estimates, covariances (uncertainty) of the estimates, and range measurements between robots. Since CoVOR-SLAM does not require to associate visual features and map points observed by different agents, the computational and communication loads are significantly reduced. The required range measurements can be obtained using pilot signals of the communication system, without requiring complex additional infrastructure. We tested CoVOR-SLAM using real images as well as real ultra-wideband-based ranges obtained with two rovers. In addition, CoVOR-SLAM is evaluated with a larger scale multi-agent setup exploiting public image datasets and ranges generated using a realistic simulation. The results show that CoVOR-SLAM can accurately estimate the robots' poses, requiring much less computational power and communication capabilities than the inter-agent loop closing technique.

Vertiport Navigation Requirements and Multisensor Architecture Considerations for Urban Air Mobility

Nov 13, 2023

Abstract:Communication, Navigation and Surveillance (CNS) technologies are key enablers for future safe operation of drones in urban environments. However, the design of navigation technologies for these new applications is more challenging compared to e.g., civil aviation. On the one hand, the use cases and operations in urban environments are expected to have stringent requirements in terms of accuracy, integrity, continuity and availability. On the other hand, airborne sensors may not be based on high-quality equipment as in civil aviation and solutions need to rely on tighter multisensor solutions, whose safety is difficult to assess. In this work, we first provide some initial navigation requirements related to precision approach operations based on recently proposed vertiport designs. Then, we provide an overview of a possible multisensor navigation architecture solution able to support these types of operations and we comment on the challenges of each of the subsystems. Finally, initial proof of concept for some navigation sensor subsystems is presented based on flight trials performed during the German Aerospace Center (DLR) project HorizonUAM.

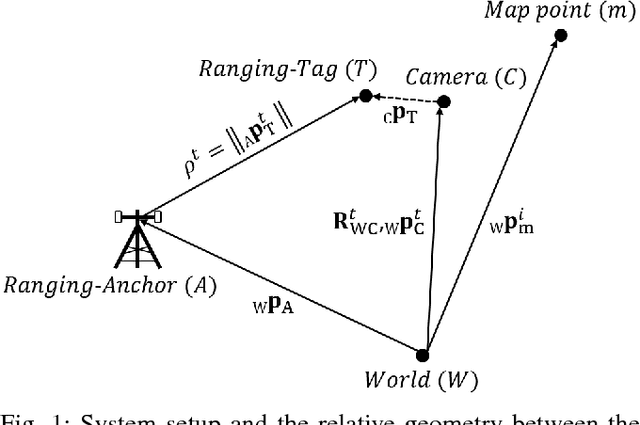

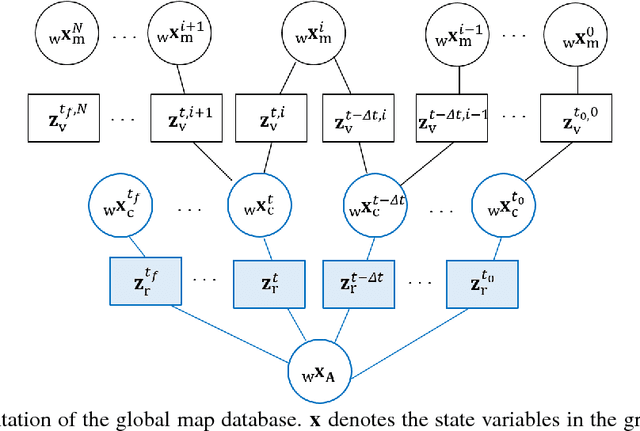

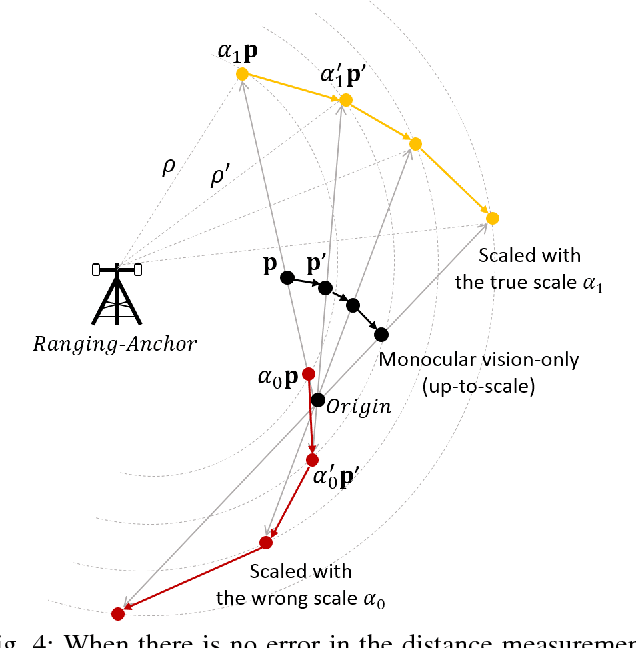

Fusion of Monocular Vision and Radio-based Ranging for Global Scale Estimation and Drift Mitigation

Oct 02, 2018

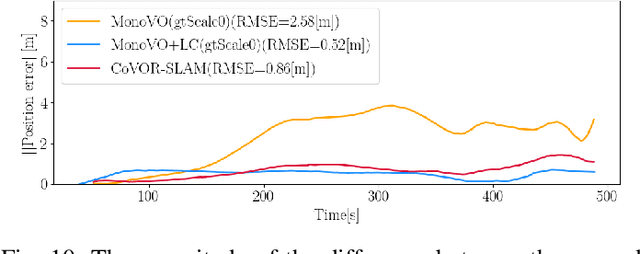

Abstract:Monocular vision-based Simultaneous Localization and Mapping (SLAM) is used for various purposes due to its advantages in cost, simple setup, as well as availability in the environments where navigation with satellites is not effective. However, camera motion and map points can be estimated only up to a global scale factor with monocular vision. Moreover, estimation error accumulates over time without bound, if the camera cannot detect the previously observed map points for closing a loop. We propose an innovative approach to estimate a global scale factor and reduce drifts in monocular vision-based localization with an additional single ranging link. Our method can be easily integrated with the back-end of monocular visual SLAM methods. We demonstrate our algorithm with real datasets collected on a rover, and show the evaluation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge