Gabriele Giorgi

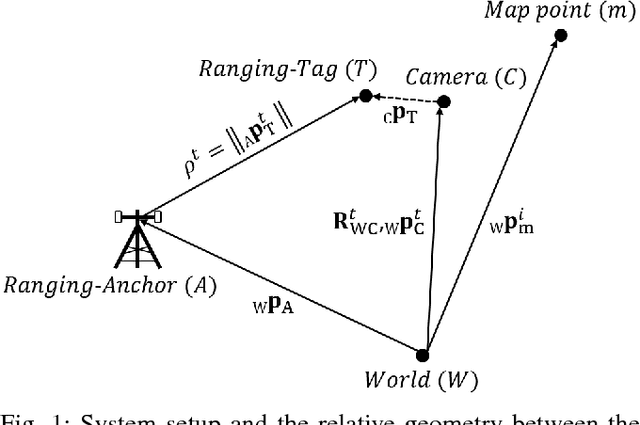

Fusion of Monocular Vision and Radio-based Ranging for Global Scale Estimation and Drift Mitigation

Oct 02, 2018

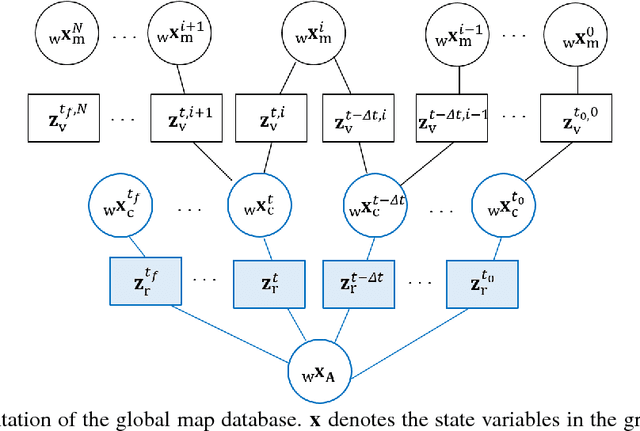

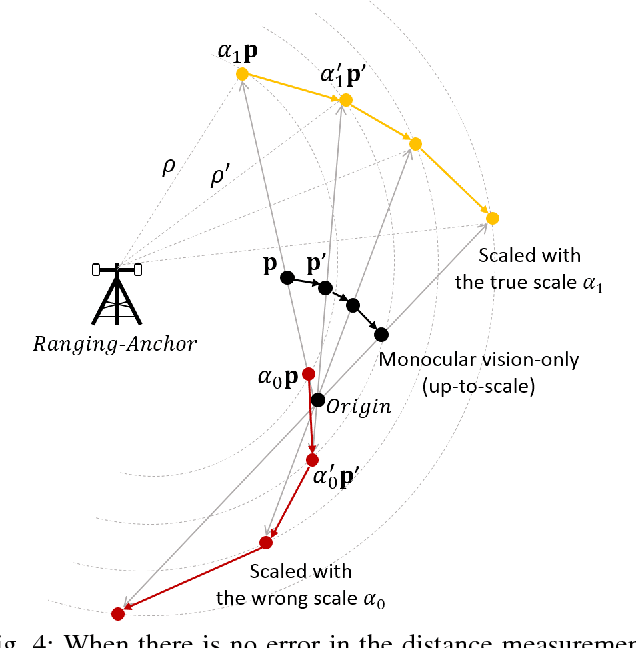

Abstract:Monocular vision-based Simultaneous Localization and Mapping (SLAM) is used for various purposes due to its advantages in cost, simple setup, as well as availability in the environments where navigation with satellites is not effective. However, camera motion and map points can be estimated only up to a global scale factor with monocular vision. Moreover, estimation error accumulates over time without bound, if the camera cannot detect the previously observed map points for closing a loop. We propose an innovative approach to estimate a global scale factor and reduce drifts in monocular vision-based localization with an additional single ranging link. Our method can be easily integrated with the back-end of monocular visual SLAM methods. We demonstrate our algorithm with real datasets collected on a rover, and show the evaluation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge