You-Yi Jau

ELLIPSDF: Joint Object Pose and Shape Optimization with a Bi-level Ellipsoid and Signed Distance Function Description

Aug 01, 2021

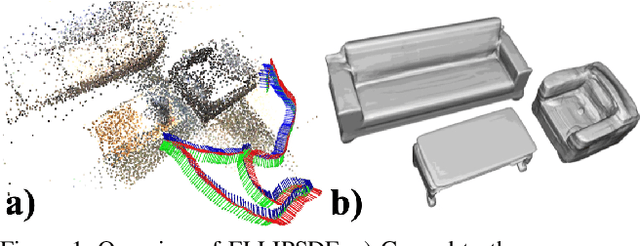

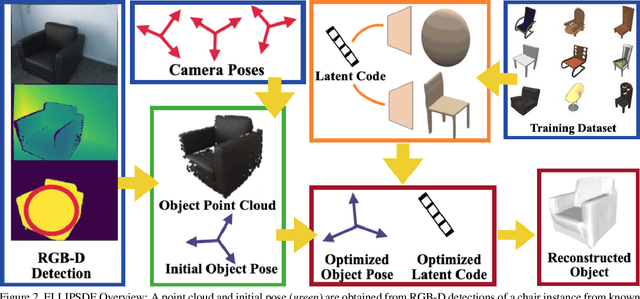

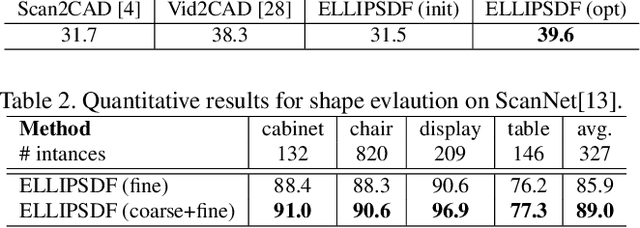

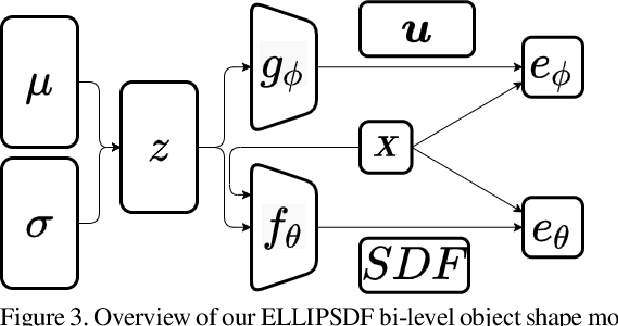

Abstract:Autonomous systems need to understand the semantics and geometry of their surroundings in order to comprehend and safely execute object-level task specifications. This paper proposes an expressive yet compact model for joint object pose and shape optimization, and an associated optimization algorithm to infer an object-level map from multi-view RGB-D camera observations. The model is expressive because it captures the identities, positions, orientations, and shapes of objects in the environment. It is compact because it relies on a low-dimensional latent representation of implicit object shape, allowing onboard storage of large multi-category object maps. Different from other works that rely on a single object representation format, our approach has a bi-level object model that captures both the coarse level scale as well as the fine level shape details. Our approach is evaluated on the large-scale real-world ScanNet dataset and compared against state-of-the-art methods.

Deep Keypoint-Based Camera Pose Estimation with Geometric Constraints

Jul 29, 2020

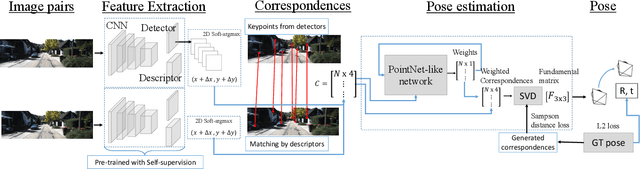

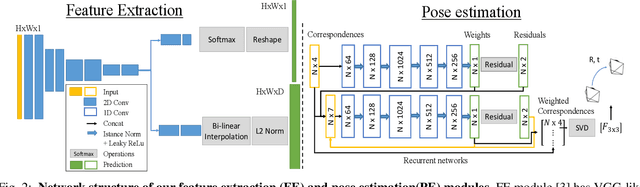

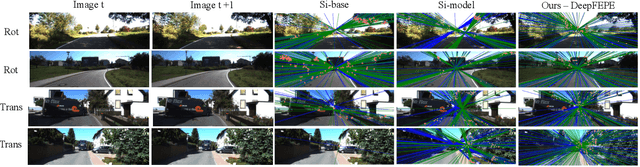

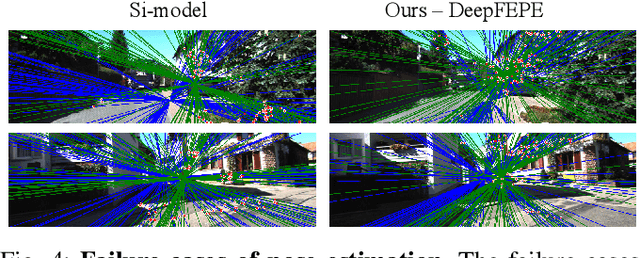

Abstract:Estimating relative camera poses from consecutive frames is a fundamental problem in visual odometry (VO) and simultaneous localization and mapping (SLAM), where classic methods consisting of hand-crafted features and sampling-based outlier rejection have been a dominant choice for over a decade. Although multiple works propose to replace these modules with learning-based counterparts, most have not yet been as accurate, robust and generalizable as conventional methods. In this paper, we design an end-to-end trainable framework consisting of learnable modules for detection, feature extraction, matching and outlier rejection, while directly optimizing for the geometric pose objective. We show both quantitatively and qualitatively that pose estimation performance may be achieved on par with the classic pipeline. Moreover, we are able to show by end-to-end training, the key components of the pipeline could be significantly improved, which leads to better generalizability to unseen datasets compared to existing learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge