Yoshiyuki Ohmura

Why Consciousness Should Explain Physical Phenomena: Toward a Testable Theory

Nov 19, 2025Abstract:The reductionist approach commonly employed in scientific methods presupposes that both macro and micro phenomena can be explained by micro-level laws alone. This assumption implies intra-level causal closure, rendering all macro phenomena epiphenomenal. However, the integrative nature of consciousness suggests that it is a macro phenomenon. To ensure scientific testability and reject epiphenomenalism, the reductionist assumption of intra-level causal closure must be rejected. This implies that even neural-level behavior cannot be explained by observable neural-level laws alone. Therefore, a new methodology is necessary to acknowledge the causal efficacy of macro-level phenomena. We model the brain as operating under dual laws at different levels. This model includes hypothetical macro-level psychological laws that are not determined solely by micro-level neural laws, as well as the causal effects from macro to micro levels. In this study, we propose a constructive approach that explains both mental and physical phenomena through the interaction between these two sets of laws.

Feature-Based Lie Group Transformer for Real-World Applications

Jun 06, 2025Abstract:The main goal of representation learning is to acquire meaningful representations from real-world sensory inputs without supervision. Representation learning explains some aspects of human development. Various neural network (NN) models have been proposed that acquire empirically good representations. However, the formulation of a good representation has not been established. We recently proposed a method for categorizing changes between a pair of sensory inputs. A unique feature of this approach is that transformations between two sensory inputs are learned to satisfy algebraic structural constraints. Conventional representation learning often assumes that disentangled independent feature axes is a good representation; however, we found that such a representation cannot account for conditional independence. To overcome this problem, we proposed a new method using group decomposition in Galois algebra theory. Although this method is promising for defining a more general representation, it assumes pixel-to-pixel translation without feature extraction, and can only process low-resolution images with no background, which prevents real-world application. In this study, we provide a simple method to apply our group decomposition theory to a more realistic scenario by combining feature extraction and object segmentation. We replace pixel translation with feature translation and formulate object segmentation as grouping features under the same transformation. We validated the proposed method on a practical dataset containing both real-world object and background. We believe that our model will lead to a better understanding of human development of object recognition in the real world.

Learning Conditionally Independent Transformations using Normal Subgroups in Group Theory

Apr 06, 2025Abstract:Humans develop certain cognitive abilities to recognize objects and their transformations without explicit supervision, highlighting the importance of unsupervised representation learning. A fundamental challenge in unsupervised representation learning is to separate different transformations in learned feature representations. Although algebraic approaches have been explored, a comprehensive theoretical framework remains underdeveloped. Existing methods decompose transformations based on algebraic independence, but these methods primarily focus on commutative transformations and do not extend to cases where transformations are conditionally independent but noncommutative. To extend current representation learning frameworks, we draw inspiration from Galois theory, where the decomposition of groups through normal subgroups provides an approach for the analysis of structured transformations. Normal subgroups naturally extend commutativity under certain conditions and offer a foundation for the categorization of transformations, even when they do not commute. In this paper, we propose a novel approach that leverages normal subgroups to enable the separation of conditionally independent transformations, even in the absence of commutativity. Through experiments on geometric transformations in images, we show that our method successfully categorizes conditionally independent transformations, such as rotation and translation, in an unsupervised manner, suggesting a close link between group decomposition via normal subgroups and transformation categorization in representation learning.

Enhancing Reusability of Learned Skills for Robot Manipulation via Gaze and Bottleneck

Feb 26, 2025Abstract:Autonomous agents capable of diverse object manipulations should be able to acquire a wide range of manipulation skills with high reusability. Although advances in deep learning have made it increasingly feasible to replicate the dexterity of human teleoperation in robots, generalizing these acquired skills to previously unseen scenarios remains a significant challenge. In this study, we propose a novel algorithm, Gaze-based Bottleneck-aware Robot Manipulation (GazeBot), which enables high reusability of the learned motions even when the object positions and end-effector poses differ from those in the provided demonstrations. By leveraging gaze information and motion bottlenecks, both crucial features for object manipulation, GazeBot achieves high generalization performance compared with state-of-the-art imitation learning methods, without sacrificing its dexterity and reactivity. Furthermore, the training process of GazeBot is entirely data-driven once a demonstration dataset with gaze data is provided. Videos and code are available at https://crumbyrobotics.github.io/gazebot.

Unsupervised categorization of similarity measures

Feb 12, 2025Abstract:In general, objects can be distinguished on the basis of their features, such as color or shape. In particular, it is assumed that similarity judgments about such features can be processed independently in different metric spaces. However, the unsupervised categorization mechanism of metric spaces corresponding to object features remains unknown. Here, we show that the artificial neural network system can autonomously categorize metric spaces through representation learning to satisfy the algebraic independence between neural networks, and project sensory information onto multiple high-dimensional metric spaces to independently evaluate the differences and similarities between features. Conventional methods often constrain the axes of the latent space to be mutually independent or orthogonal. However, the independent axes are not suitable for categorizing metric spaces. High-dimensional metric spaces that are independent of each other are not uniquely determined by the mutually independent axes, because any combination of independent axes can form mutually independent spaces. In other words, the mutually independent axes cannot be used to naturally categorize different feature spaces, such as color space and shape space. Therefore, constraining the axes to be mutually independent makes it difficult to categorize high-dimensional metric spaces. To overcome this problem, we developed a method to constrain only the spaces to be mutually independent and not the composed axes to be independent. Our theory provides general conditions for the unsupervised categorization of independent metric spaces, thus advancing the mathematical theory of functional differentiation of neural networks.

Understanding via Gaze: Gaze-based Task Decomposition for Imitation Learning of Robot Manipulation

Jan 25, 2025Abstract:In imitation learning for robotic manipulation, decomposing object manipulation tasks into multiple semantic actions is essential. This decomposition enables the reuse of learned skills in varying contexts and the combination of acquired skills to perform novel tasks, rather than merely replicating demonstrated motions. Gaze, an evolutionary tool for understanding ongoing events, plays a critical role in human object manipulation, where it strongly correlates with motion planning. In this study, we propose a simple yet robust task decomposition method based on gaze transitions. We hypothesize that an imitation agent's gaze control, fixating on specific landmarks and transitioning between them, naturally segments demonstrated manipulations into sub-tasks. Notably, our method achieves consistent task decomposition across all demonstrations, which is desirable in contexts such as machine learning. Using teleoperation, a common modality in imitation learning for robotic manipulation, we collected demonstration data for various tasks, applied our segmentation method, and evaluated the characteristics and consistency of the resulting sub-tasks. Furthermore, through extensive testing across a wide range of hyperparameter variations, we demonstrated that the proposed method possesses the robustness necessary for application to different robotic systems.

Multi-task robot data for dual-arm fine manipulation

Jan 26, 2024Abstract:In the field of robotic manipulation, deep imitation learning is recognized as a promising approach for acquiring manipulation skills. Additionally, learning from diverse robot datasets is considered a viable method to achieve versatility and adaptability. In such research, by learning various tasks, robots achieved generality across multiple objects. However, such multi-task robot datasets have mainly focused on single-arm tasks that are relatively imprecise, not addressing the fine-grained object manipulation that robots are expected to perform in the real world. This paper introduces a dataset of diverse object manipulations that includes dual-arm tasks and/or tasks requiring fine manipulation. To this end, we have generated dataset with 224k episodes (150 hours, 1,104 language instructions) which includes dual-arm fine tasks such as bowl-moving, pencil-case opening or banana-peeling, and this data is publicly available. Additionally, this dataset includes visual attention signals as well as dual-action labels, a signal that separates actions into a robust reaching trajectory and precise interaction with objects, and language instructions to achieve robust and precise object manipulation. We applied the dataset to our Dual-Action and Attention (DAA), a model designed for fine-grained dual arm manipulation tasks and robust against covariate shifts. The model was tested with over 7k total trials in real robot manipulation tasks, demonstrating its capability in fine manipulation. The dataset is available at https://sites.google.com/view/multi-task-fine.

Ablation Study to Clarify the Mechanism of Object Segmentation in Multi-Object Representation Learning

Oct 05, 2023

Abstract:Multi-object representation learning aims to represent complex real-world visual input using the composition of multiple objects. Representation learning methods have often used unsupervised learning to segment an input image into individual objects and encode these objects into each latent vector. However, it is not clear how previous methods have achieved the appropriate segmentation of individual objects. Additionally, most of the previous methods regularize the latent vectors using a Variational Autoencoder (VAE). Therefore, it is not clear whether VAE regularization contributes to appropriate object segmentation. To elucidate the mechanism of object segmentation in multi-object representation learning, we conducted an ablation study on MONet, which is a typical method. MONet represents multiple objects using pairs that consist of an attention mask and the latent vector corresponding to the attention mask. Each latent vector is encoded from the input image and attention mask. Then, the component image and attention mask are decoded from each latent vector. The loss function of MONet consists of 1) the sum of reconstruction losses between the input image and decoded component image, 2) the VAE regularization loss of the latent vector, and 3) the reconstruction loss of the attention mask to explicitly encode shape information. We conducted an ablation study on these three loss functions to investigate the effect on segmentation performance. Our results showed that the VAE regularization loss did not affect segmentation performance and the others losses did affect it. Based on this result, we hypothesize that it is important to maximize the attention mask of the image region best represented by a single latent vector corresponding to the attention mask. We confirmed this hypothesis by evaluating a new loss function with the same mechanism as the hypothesis.

An algebraic theory to discriminate qualia in the brain

May 31, 2023

Abstract:The mind-brain problem is to bridge relations between in higher mental events and in lower neural events. To address this, some mathematical models have been proposed to explain how the brain can represent the discriminative structure of qualia, but they remain unresolved due to a lack of validation methods. To understand the qualia discrimination mechanism, we need to ask how the brain autonomously develops such a mathematical structure using the constructive approach. Here we show that a brain model that learns to satisfy an algebraic independence between neural networks separates metric spaces corresponding to qualia types. We formulate the algebraic independence to link it to the other-qualia-type invariant transformation, a familiar formulation of the permanence of perception. The learning of algebraic independence proposed here explains downward causation, i.e. the macro-level relationship has the causal power over its components, because algebra is the macro-level relationship that is irreducible to a law of neurons, and a self-evaluation of algebra is used to control neurons. The downward causation is required to explain a causal role of mental events on neural events, suggesting that learning algebraic structure between neural networks can contribute to the further development of a mathematical theory of consciousness.

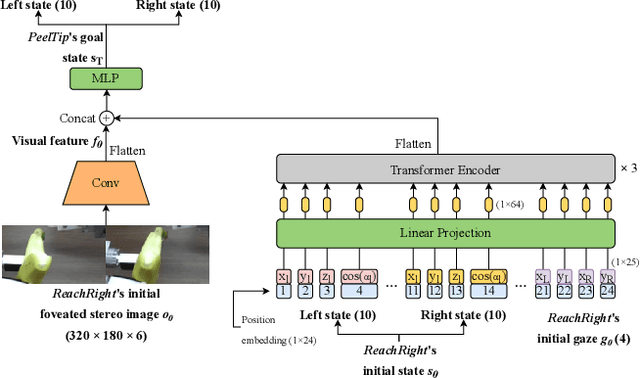

Robot peels banana with goal-conditioned dual-action deep imitation learning

Mar 18, 2022

Abstract:A long-horizon dexterous robot manipulation task of deformable objects, such as banana peeling, is problematic because of difficulties in object modeling and a lack of knowledge about stable and dexterous manipulation skills. This paper presents a goal-conditioned dual-action deep imitation learning (DIL) which can learn dexterous manipulation skills using human demonstration data. Previous DIL methods map the current sensory input and reactive action, which easily fails because of compounding errors in imitation learning caused by recurrent computation of actions. The proposed method predicts reactive action when the precise manipulation of the target object is required (local action) and generates the entire trajectory when the precise manipulation is not required. This dual-action formulation effectively prevents compounding error with the trajectory-based global action while respond to unexpected changes in the target object with the reactive local action. Furthermore, in this formulation, both global/local actions are conditioned by a goal state which is defined as the last step of each subtask, for robust policy prediction. The proposed method was tested in the real dual-arm robot and successfully accomplished the banana peeling task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge