Robot peels banana with goal-conditioned dual-action deep imitation learning

Paper and Code

Mar 18, 2022

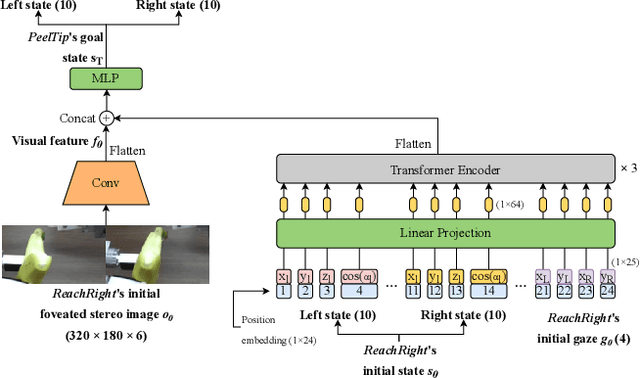

A long-horizon dexterous robot manipulation task of deformable objects, such as banana peeling, is problematic because of difficulties in object modeling and a lack of knowledge about stable and dexterous manipulation skills. This paper presents a goal-conditioned dual-action deep imitation learning (DIL) which can learn dexterous manipulation skills using human demonstration data. Previous DIL methods map the current sensory input and reactive action, which easily fails because of compounding errors in imitation learning caused by recurrent computation of actions. The proposed method predicts reactive action when the precise manipulation of the target object is required (local action) and generates the entire trajectory when the precise manipulation is not required. This dual-action formulation effectively prevents compounding error with the trajectory-based global action while respond to unexpected changes in the target object with the reactive local action. Furthermore, in this formulation, both global/local actions are conditioned by a goal state which is defined as the last step of each subtask, for robust policy prediction. The proposed method was tested in the real dual-arm robot and successfully accomplished the banana peeling task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge