Yoshiki Sato

Characterization of Locality in Spin States and Forced Moves for Optimizations

Dec 05, 2023

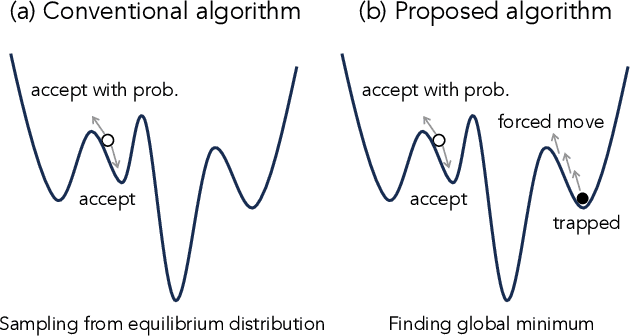

Abstract:Ising formulations are widely utilized to solve combinatorial optimization problems, and a variety of quantum or semiconductor-based hardware has recently been made available. In combinatorial optimization problems, the existence of local minima in energy landscapes is problematic to use to seek the global minimum. We note that the aim of the optimization is not to obtain exact samplings from the Boltzmann distribution, and there is thus no need to satisfy detailed balance conditions. In light of this fact, we develop an algorithm to get out of the local minima efficiently while it does not yield the exact samplings. For this purpose, we utilize a feature that characterizes locality in the current state, which is easy to obtain with a type of specialized hardware. Furthermore, as the proposed algorithm is based on a rejection-free algorithm, the computational cost is low. In this work, after presenting the details of the proposed algorithm, we report the results of numerical experiments that demonstrate the effectiveness of the proposed feature and algorithm.

An Accurate Graph Generative Model with Tunable Features

Sep 03, 2023Abstract:A graph is a very common and powerful data structure used for modeling communication and social networks. Models that generate graphs with arbitrary features are important basic technologies in repeated simulations of networks and prediction of topology changes. Although existing generative models for graphs are useful for providing graphs similar to real-world graphs, graph generation models with tunable features have been less explored in the field. Previously, we have proposed GraphTune, a generative model for graphs that continuously tune specific graph features of generated graphs while maintaining most of the features of a given graph dataset. However, the tuning accuracy of graph features in GraphTune has not been sufficient for practical applications. In this paper, we propose a method to improve the accuracy of GraphTune by adding a new mechanism to feed back errors of graph features of generated graphs and by training them alternately and independently. Experiments on a real-world graph dataset showed that the features in the generated graphs are accurately tuned compared with conventional models.

GraphTune: A Learning-based Graph Generative Model with Tunable Structural Features

Jan 27, 2022

Abstract:Generative models for graphs have been actively studied for decades, and they have a wide range of applications. Recently, learning-based graph generation that reproduces real-world graphs has gradually attracted the attention of many researchers. Several generative models that utilize modern machine learning technologies have been proposed, though a conditional generation of general graphs is less explored in the field. In this paper, we propose a generative model that allows us to tune a value of a global-level structural feature as a condition. Our model called GraphTune enables to tune a value of any structural feature of generated graphs using Long Short Term Memory (LSTM) and Conditional Variational AutoEncoder (CVAE). We performed comparative evaluations of GraphTune and conventional models with a real graph dataset. The evaluations show that GraphTune enables to clearly tune a value of a global-level structural feature compared to the conventional models.

A Tunable Model for Graph Generation Using LSTM and Conditional VAE

Apr 15, 2021

Abstract:With the development of graph applications, generative models for graphs have been more crucial. Classically, stochastic models that generate graphs with a pre-defined probability of edges and nodes have been studied. Recently, some models that reproduce the structural features of graphs by learning from actual graph data using machine learning have been studied. However, in these conventional studies based on machine learning, structural features of graphs can be learned from data, but it is not possible to tune features and generate graphs with specific features. In this paper, we propose a generative model that can tune specific features, while learning structural features of a graph from data. With a dataset of graphs with various features generated by a stochastic model, we confirm that our model can generate a graph with specific features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge