Yongshuo Zhu

Semantic-CD: Remote Sensing Image Semantic Change Detection towards Open-vocabulary Setting

Jan 12, 2025Abstract:Remote sensing image semantic change detection is a method used to analyze remote sensing images, aiming to identify areas of change as well as categorize these changes within images of the same location taken at different times. Traditional change detection methods often face challenges in generalizing across semantic categories in practical scenarios. To address this issue, we introduce a novel approach called Semantic-CD, specifically designed for semantic change detection in remote sensing images. This method incorporates the open vocabulary semantics from the vision-language foundation model, CLIP. By utilizing CLIP's extensive vocabulary knowledge, our model enhances its ability to generalize across categories and improves segmentation through fully decoupled multi-task learning, which includes both binary change detection and semantic change detection tasks. Semantic-CD consists of four main components: a bi-temporal CLIP visual encoder for extracting features from bi-temporal images, an open semantic prompter for creating semantic cost volume maps with open vocabulary, a binary change detection decoder for generating binary change detection masks, and a semantic change detection decoder for producing semantic labels. Experimental results on the SECOND dataset demonstrate that Semantic-CD achieves more accurate masks and reduces semantic classification errors, illustrating its effectiveness in applying semantic priors from vision-language foundation models to SCD tasks.

Semantic-CC: Boosting Remote Sensing Image Change Captioning via Foundational Knowledge and Semantic Guidance

Jul 19, 2024

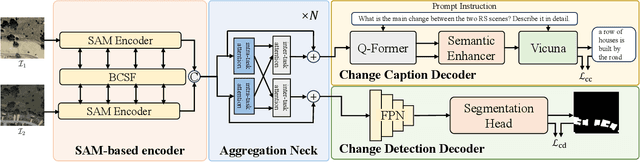

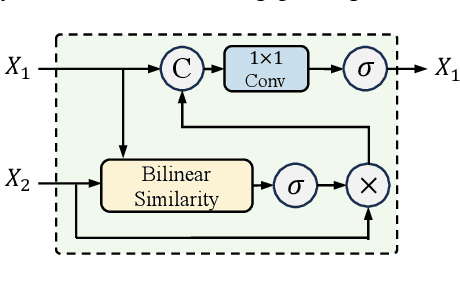

Abstract:Remote sensing image change captioning (RSICC) aims to articulate the changes in objects of interest within bi-temporal remote sensing images using natural language. Given the limitations of current RSICC methods in expressing general features across multi-temporal and spatial scenarios, and their deficiency in providing granular, robust, and precise change descriptions, we introduce a novel change captioning (CC) method based on the foundational knowledge and semantic guidance, which we term Semantic-CC. Semantic-CC alleviates the dependency of high-generalization algorithms on extensive annotations by harnessing the latent knowledge of foundation models, and it generates more comprehensive and accurate change descriptions guided by pixel-level semantics from change detection (CD). Specifically, we propose a bi-temporal SAM-based encoder for dual-image feature extraction; a multi-task semantic aggregation neck for facilitating information interaction between heterogeneous tasks; a straightforward multi-scale change detection decoder to provide pixel-level semantic guidance; and a change caption decoder based on the large language model (LLM) to generate change description sentences. Moreover, to ensure the stability of the joint training of CD and CC, we propose a three-stage training strategy that supervises different tasks at various stages. We validate the proposed method on the LEVIR-CC and LEVIR-CD datasets. The experimental results corroborate the complementarity of CD and CC, demonstrating that Semantic-CC can generate more accurate change descriptions and achieve optimal performance across both tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge