Yonatan Vaizman

Optimizing Wi-Fi Channel Selection in a Dense Neighborhood

Nov 07, 2022

Abstract:In dense neighborhoods, there are often dozens of homes in close proximity. This can either be a tight city-block with many single-family homes (SFHs), or a multiple dwelling units (MDU) complex (such as a big apartment building or condominium). Each home in such a neighborhood (either a SFH or a single unit in a MDU complex) has its own Wi-Fi access point (AP). Because there are few (typically 2 or 3) non-overlapping radio channels for Wi-Fi, neighboring homes may find themselves sharing a channel and competing over airtime, which may cause bad experience of slow internet (long latency, buffering while streaming movies, etc.). Wi-Fi optimization over all the APs in a dense neighborhood is highly desired to provide the best user experience. We present a method for Wi-Fi channel selection in a centralized way for all the APs in a dense neighborhood. We describe how to use recent observations to estimate the potential-pain matrix - for each pair of APs, how much Wi-Fi-pain would they cause each other if they were on the same channel. We formulate an optimization problem - finding a channel allocation (which channel each home should use) that minimizes the total Wi-Fi-pain in the neighborhood. We design an optimization algorithm that uses gradient descent over a neural network to solve the optimization problem. We describe initial results from offline experiments comparing our optimization solver to an off-the-shelf mixed-integer-programming solver. In our experiments we show that the off-the-shelf solver manages to find a better (lower total pain) solution on the train data (from the recent days), but our neural-network solver generalizes better - it finds a solution that achieves lower total pain for the test data (tomorrow).

* We discussed this work in the 2022 Fall Technical Forum as part of SCTE Cable-Tec Expo. This paper was published in SCTE Technical Journal. For citing this work, please cite the original publication

MapiFi: Using Wi-Fi Signals to Map Home Devices

Feb 09, 2022

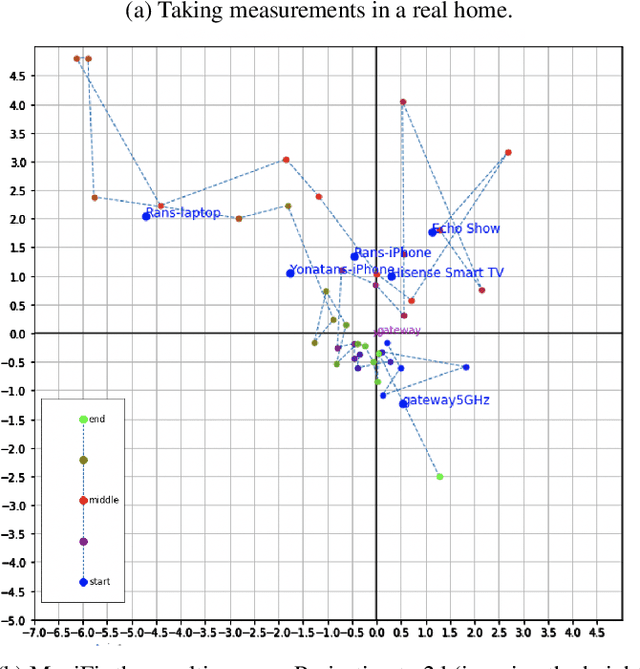

Abstract:Imagine a map of your home with all of your connected devices (computers, TVs, voice control devices, printers, security cameras, etc.), in their location. You could then easily group devices into user-profiles, monitor Wi-Fi quality and activity in different areas of your home, and even locate a lost tablet in your home. MapiFi is a method to generate that map of the devices in a home. The first part of MapiFi involves the user (either a technician or the resident) walking around the home with a mobile device that listens to Wi-Fi radio channels. The mobile device detects Wi-Fi packets that come from all of the home's devices that connect to the gateway and measures their signal strengths (ignoring the content of the packets). The second part is an algorithm that uses all the signal-strength measurements to estimate the locations of all the devices in the home. Then, MapiFi visualizes the home's space as a coordinate system with devices marked as points in this space. A patent has been filed based on this technology. This paper was published in SCTE Technical Journal (see published paper at https://wagtail-prod-storage.s3.amazonaws.com/documents/SCTE_Technical_Journal_V1N3.pdf).

* 6 pages, 4 figures, published in SCTE Technical Journal, patent pending at US Patent and Trademark Office

Recognizing Detailed Human Context In-the-Wild from Smartphones and Smartwatches

Sep 30, 2017

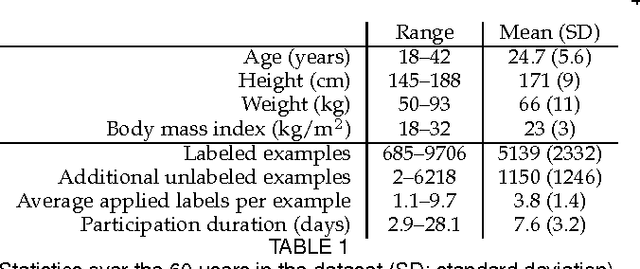

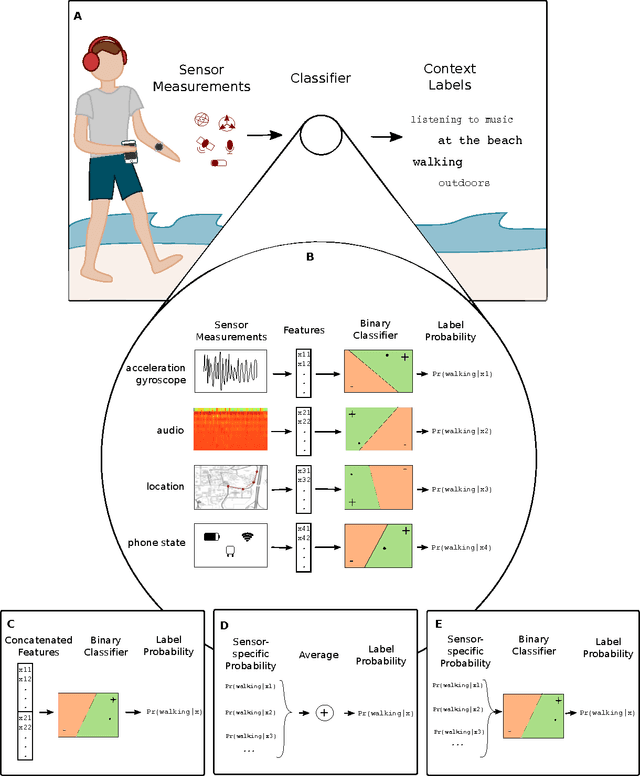

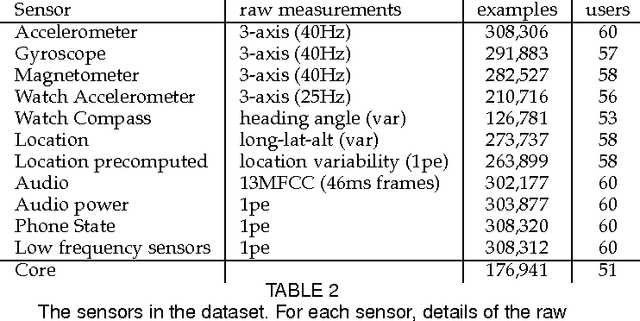

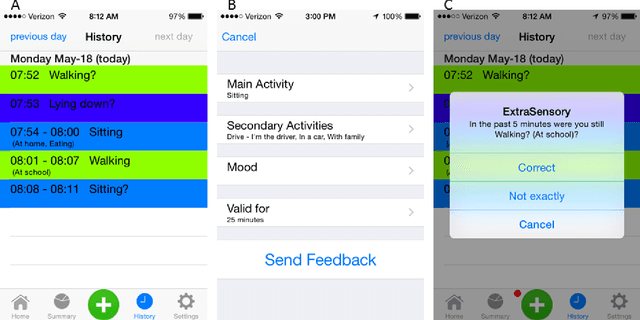

Abstract:The ability to automatically recognize a person's behavioral context can contribute to health monitoring, aging care and many other domains. Validating context recognition in-the-wild is crucial to promote practical applications that work in real-life settings. We collected over 300k minutes of sensor data with context labels from 60 subjects. Unlike previous studies, our subjects used their own personal phone, in any way that was convenient to them, and engaged in their routine in their natural environments. Unscripted behavior and unconstrained phone usage resulted in situations that are harder to recognize. We demonstrate how fusion of multi-modal sensors is important for resolving such cases. We present a baseline system, and encourage researchers to use our public dataset to compare methods and improve context recognition in-the-wild.

* This paper was accepted and is to appear in IEEE Pervasive Computing, vol. 16, no. 4, October-December 2017, pp. 62-74

Codebook based Audio Feature Representation for Music Information Retrieval

Dec 19, 2013

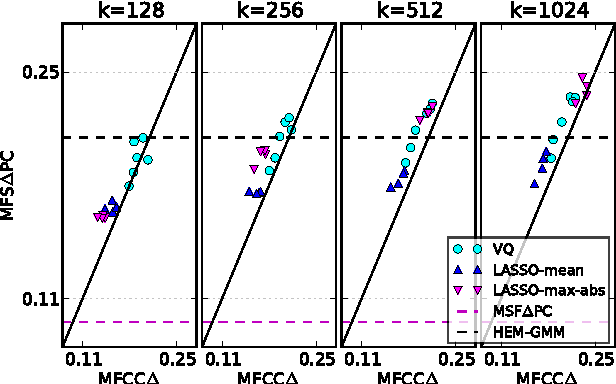

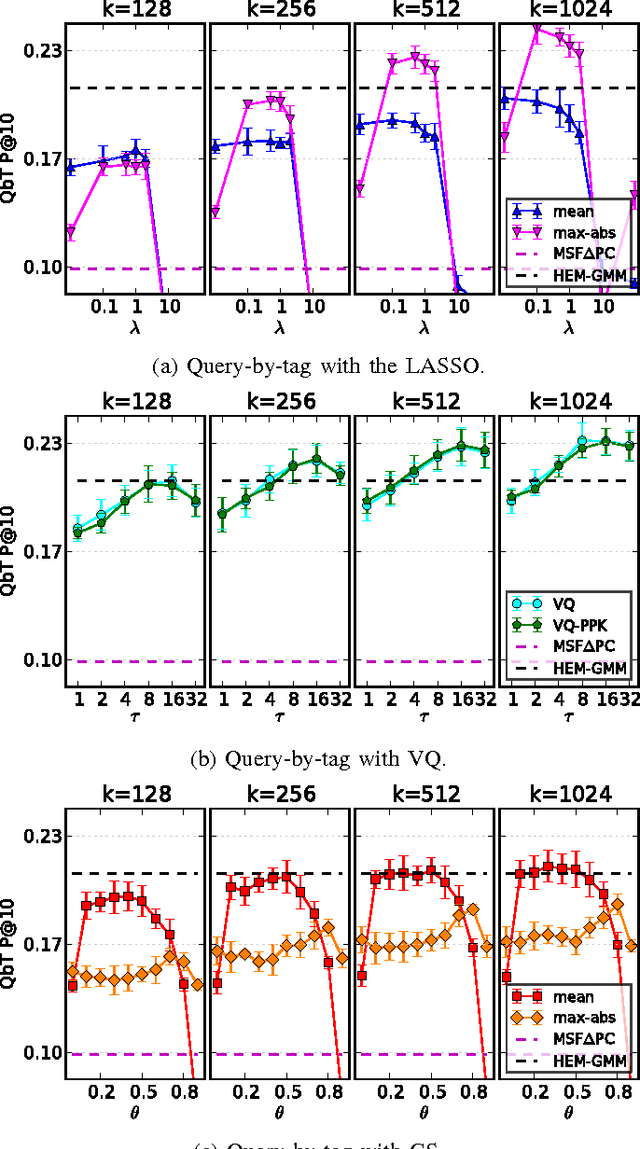

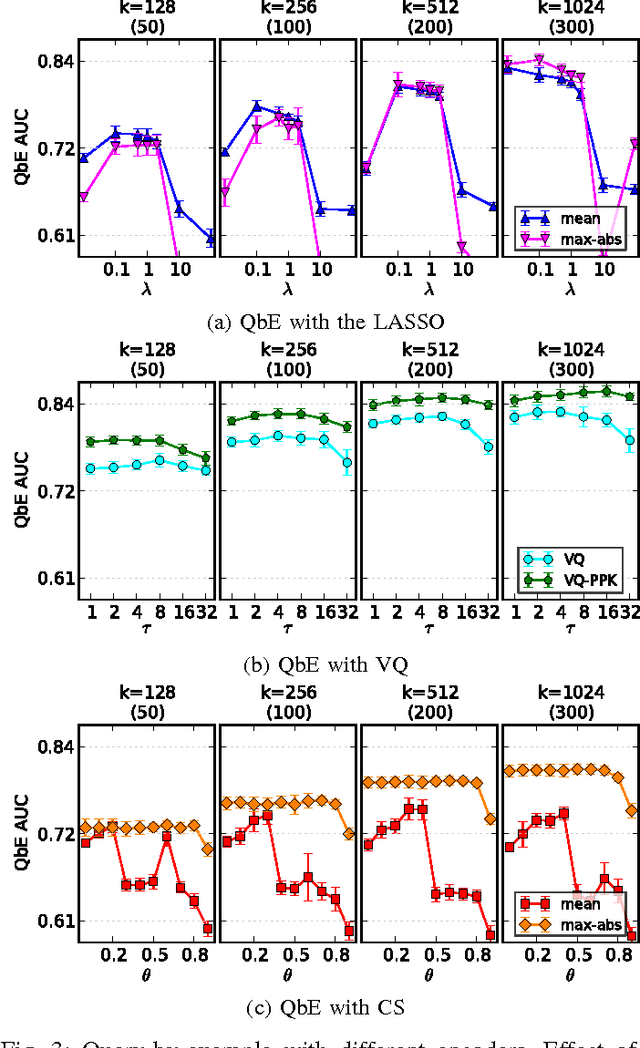

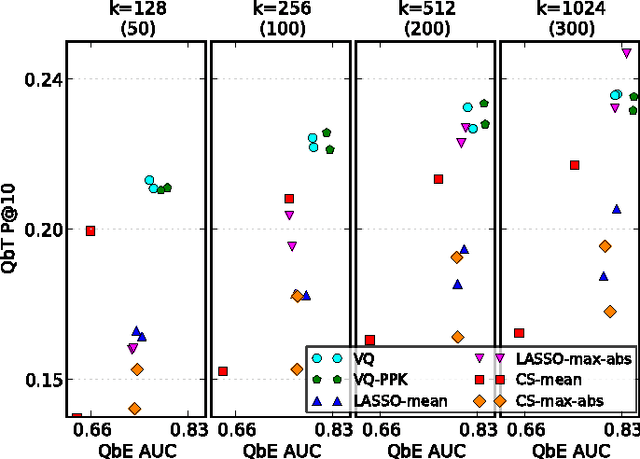

Abstract:Digital music has become prolific in the web in recent decades. Automated recommendation systems are essential for users to discover music they love and for artists to reach appropriate audience. When manual annotations and user preference data is lacking (e.g. for new artists) these systems must rely on \emph{content based} methods. Besides powerful machine learning tools for classification and retrieval, a key component for successful recommendation is the \emph{audio content representation}. Good representations should capture informative musical patterns in the audio signal of songs. These representations should be concise, to enable efficient (low storage, easy indexing, fast search) management of huge music repositories, and should also be easy and fast to compute, to enable real-time interaction with a user supplying new songs to the system. Before designing new audio features, we explore the usage of traditional local features, while adding a stage of encoding with a pre-computed \emph{codebook} and a stage of pooling to get compact vectorial representations. We experiment with different encoding methods, namely \emph{the LASSO}, \emph{vector quantization (VQ)} and \emph{cosine similarity (CS)}. We evaluate the representations' quality in two music information retrieval applications: query-by-tag and query-by-example. Our results show that concise representations can be used for successful performance in both applications. We recommend using top-$\tau$ VQ encoding, which consistently performs well in both applications, and requires much less computation time than the LASSO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge