Katherine Ellis

Recognizing Detailed Human Context In-the-Wild from Smartphones and Smartwatches

Sep 30, 2017

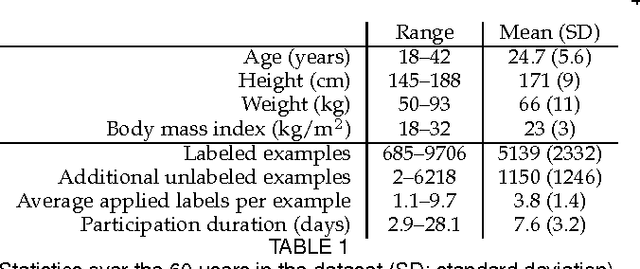

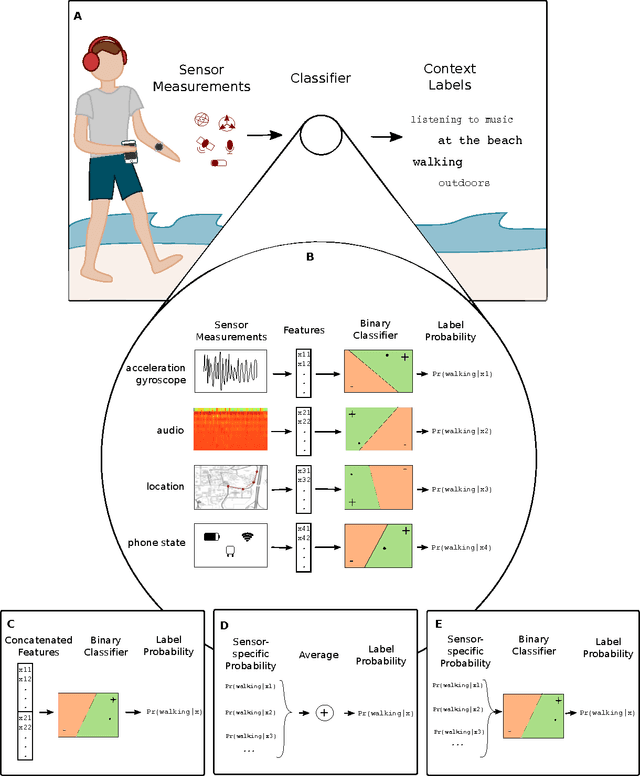

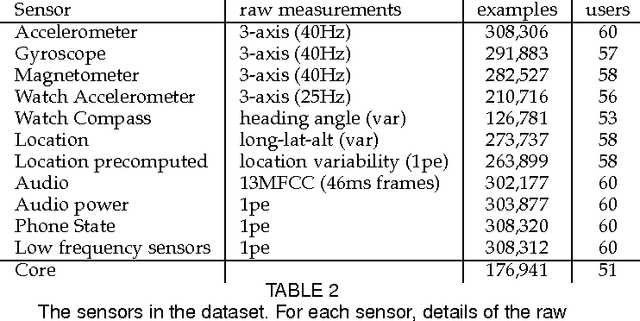

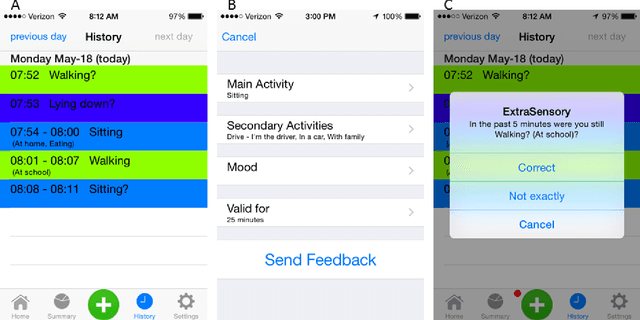

Abstract:The ability to automatically recognize a person's behavioral context can contribute to health monitoring, aging care and many other domains. Validating context recognition in-the-wild is crucial to promote practical applications that work in real-life settings. We collected over 300k minutes of sensor data with context labels from 60 subjects. Unlike previous studies, our subjects used their own personal phone, in any way that was convenient to them, and engaged in their routine in their natural environments. Unscripted behavior and unconstrained phone usage resulted in situations that are harder to recognize. We demonstrate how fusion of multi-modal sensors is important for resolving such cases. We present a baseline system, and encourage researchers to use our public dataset to compare methods and improve context recognition in-the-wild.

* This paper was accepted and is to appear in IEEE Pervasive Computing, vol. 16, no. 4, October-December 2017, pp. 62-74

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge