Yohan Chatelain

Department of Computer Science and Software Engineering, Concordia University, Montréal, QC, Canada

Conservative & Aggressive NaNs Accelerate U-Nets for Neuroimaging

Jan 23, 2026Abstract:Deep learning models for neuroimaging increasingly rely on large architectures, making efficiency a persistent concern despite advances in hardware. Through an analysis of numerical uncertainty of convolutional neural networks (CNNs), we observe that many operations are applied to values dominated by numerical noise and have negligible influence on model outputs. In some models, up to two-thirds of convolution operations appear redundant. We introduce Conservative & Aggressive NaNs, two novel variants of max pooling and unpooling that identify numerically unstable voxels and replace them with NaNs, allowing subsequent layers to skip computations on irrelevant data. Both methods are implemented within PyTorch and require no architectural changes. We evaluate these approaches on four CNN models spanning neuroimaging and image classification tasks. For inputs containing at least 50% NaNs, we observe consistent runtime improvements; for data with more than two-thirds NaNs )common in several neuroimaging settings) we achieve an average inference speedup of 1.67x. Conservative NaNs reduces convolution operations by an average of 30% across models and datasets, with no measurable performance degradation, and can skip up to 64.64% of convolutions in specific layers. Aggressive NaNs can skip up to 69.30% of convolutions but may occasionally affect performance. Overall, these methods demonstrate that numerical uncertainty can be exploited to reduce redundant computation and improve inference efficiency in CNNs.

Uncertain but Useful: Leveraging CNN Variability into Data Augmentation

Sep 05, 2025Abstract:Deep learning (DL) is rapidly advancing neuroimaging by achieving state-of-the-art performance with reduced computation times. Yet the numerical stability of DL models -- particularly during training -- remains underexplored. While inference with DL is relatively stable, training introduces additional variability primarily through iterative stochastic optimization. We investigate this training-time variability using FastSurfer, a CNN-based whole-brain segmentation pipeline. Controlled perturbations are introduced via floating point perturbations and random seeds. We find that: (i) FastSurfer exhibits higher variability compared to that of a traditional neuroimaging pipeline, suggesting that DL inherits and is particularly susceptible to sources of instability present in its predecessors; (ii) ensembles generated with perturbations achieve performance similar to an unperturbed baseline; and (iii) variability effectively produces ensembles of numerical model families that can be repurposed for downstream applications. As a proof of concept, we demonstrate that numerical ensembles can be used as a data augmentation strategy for brain age regression. These findings position training-time variability not only as a reproducibility concern but also as a resource that can be harnessed to improve robustness and enable new applications in neuroimaging.

Numerical Uncertainty in Linear Registration: An Experimental Study

Aug 01, 2025Abstract:While linear registration is a critical step in MRI preprocessing pipelines, its numerical uncertainty is understudied. Using Monte-Carlo Arithmetic (MCA) simulations, we assessed the most commonly used linear registration tools within major software packages (SPM, FSL, and ANTs) across multiple image similarity measures, two brain templates, and both healthy control (HC, n=50) and Parkinson's Disease (PD, n=50) cohorts. Our findings highlight the influence of linear registration tools and similarity measures on numerical stability. Among the evaluated tools and with default similarity measures, SPM exhibited the highest stability. FSL and ANTs showed greater and similar ranges of variability, with ANTs demonstrating particular sensitivity to numerical perturbations that occasionally led to registration failure. Furthermore, no significant differences were observed between healthy and PD cohorts, suggesting that numerical stability analyses obtained with healthy subjects may generalise to clinical populations. Finally, we also demonstrated how numerical uncertainty measures may support automated quality control (QC) of linear registration results. Overall, our experimental results characterize the numerical stability of linear registration experimentally and can serve as a basis for future uncertainty analyses.

Numerical Uncertainty of Convolutional Neural Networks Inference for Structural Brain MRI Analysis

Aug 03, 2023Abstract:This paper investigates the numerical uncertainty of Convolutional Neural Networks (CNNs) inference for structural brain MRI analysis. It applies Random Rounding -- a stochastic arithmetic technique -- to CNN models employed in non-linear registration (SynthMorph) and whole-brain segmentation (FastSurfer), and compares the resulting numerical uncertainty to the one measured in a reference image-processing pipeline (FreeSurfer recon-all). Results obtained on 32 representative subjects show that CNN predictions are substantially more accurate numerically than traditional image-processing results (non-linear registration: 19 vs 13 significant bits on average; whole-brain segmentation: 0.99 vs 0.92 S{\o}rensen-Dice score on average), which suggests a better reproducibility of CNN results across execution environments.

Numerical Stability of DeepGOPlus Inference

Dec 13, 2022Abstract:Convolutional neural networks (CNNs) are currently among the most widely-used neural networks available and achieve state-of-the-art performance for many problems. While originally applied to computer vision tasks, CNNs work well with any data with a spatial relationship, besides images, and have been applied to different fields. However, recent works have highlighted how CNNs, like other deep learning models, are sensitive to noise injection which can jeopardise their performance. This paper quantifies the numerical uncertainty of the floating point arithmetic inaccuracies of the inference stage of DeepGOPlus, a CNN that predicts protein function, in order to determine its numerical stability. In addition, this paper investigates the possibility to use reduced-precision floating point formats for DeepGOPlus inference to reduce memory consumption and latency. This is achieved with Monte Carlo Arithmetic, a technique that experimentally quantifies floating point operation errors and VPREC, a tool that emulates results with customizable floating point precision formats. Focus is placed on the inference stage as it is the main deliverable of the DeepGOPlus model that will be used across environments and therefore most likely be subjected to the most amount of noise. Furthermore, studies have shown that the inference stage is the part of the model which is most disposed to being scaled down in terms of reduced precision. All in all, it has been found that the numerical uncertainty of the DeepGOPlus CNN is very low at its current numerical precision format, but the model cannot currently be reduced to a lower precision that might render it more lightweight.

Data Augmentation Through Monte Carlo Arithmetic Leads to More Generalizable Classification in Connectomics

Sep 20, 2021Abstract:Machine learning models are commonly applied to human brain imaging datasets in an effort to associate function or structure with behaviour, health, or other individual phenotypes. Such models often rely on low-dimensional maps generated by complex processing pipelines. However, the numerical instabilities inherent to pipelines limit the fidelity of these maps and introduce computational bias. Monte Carlo Arithmetic, a technique for introducing controlled amounts of numerical noise, was used to perturb a structural connectome estimation pipeline, ultimately producing a range of plausible networks for each sample. The variability in the perturbed networks was captured in an augmented dataset, which was then used for an age classification task. We found that resampling brain networks across a series of such numerically perturbed outcomes led to improved performance in all tested classifiers, preprocessing strategies, and dimensionality reduction techniques. Importantly, we find that this benefit does not hinge on a large number of perturbations, suggesting that even minimally perturbing a dataset adds meaningful variance which can be captured in the subsequently designed models.

Accurate simulation of operating system updates in neuroimaging using Monte-Carlo arithmetic

Aug 06, 2021

Abstract:Operating system (OS) updates introduce numerical perturbations that impact the reproducibility of computational pipelines. In neuroimaging, this has important practical implications on the validity of computational results, particularly when obtained in systems such as high-performance computing clusters where the experimenter does not control software updates. We present a framework to reproduce the variability induced by OS updates in controlled conditions. We hypothesize that OS updates impact computational pipelines mainly through numerical perturbations originating in mathematical libraries, which we simulate using Monte-Carlo arithmetic in a framework called "fuzzy libmath" (FL). We applied this methodology to pre-processing pipelines of the Human Connectome Project, a flagship open-data project in neuroimaging. We found that FL-perturbed pipelines accurately reproduce the variability induced by OS updates and that this similarity is only mildly dependent on simulation parameters. Importantly, we also found between-subject differences were preserved in both cases, though the between-run variability was of comparable magnitude for both FL and OS perturbations. We found the numerical precision in the HCP pre-processed images to be relatively low, with less than 8 significant bits among the 24 available, which motivates further investigation of the numerical stability of components in the tested pipeline. Overall, our results establish that FL accurately simulates results variability due to OS updates, and is a practical framework to quantify numerical uncertainty in neuroimaging.

Reducing numerical precision preserves classification accuracy in Mondrian Forests

Jun 28, 2021

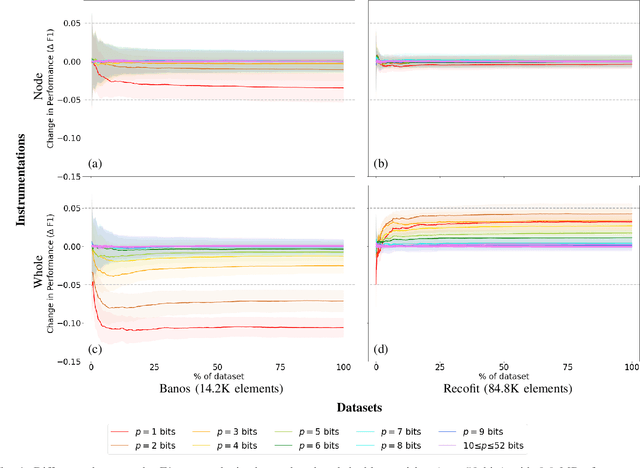

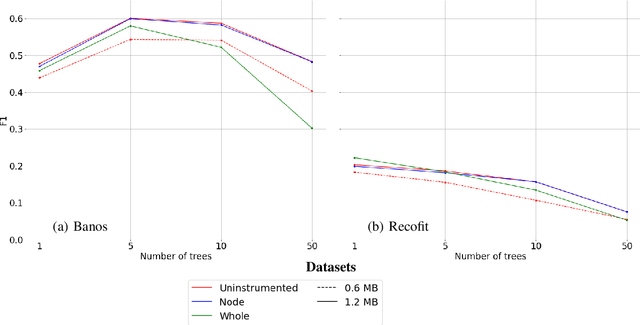

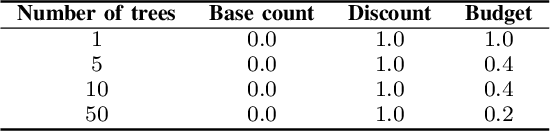

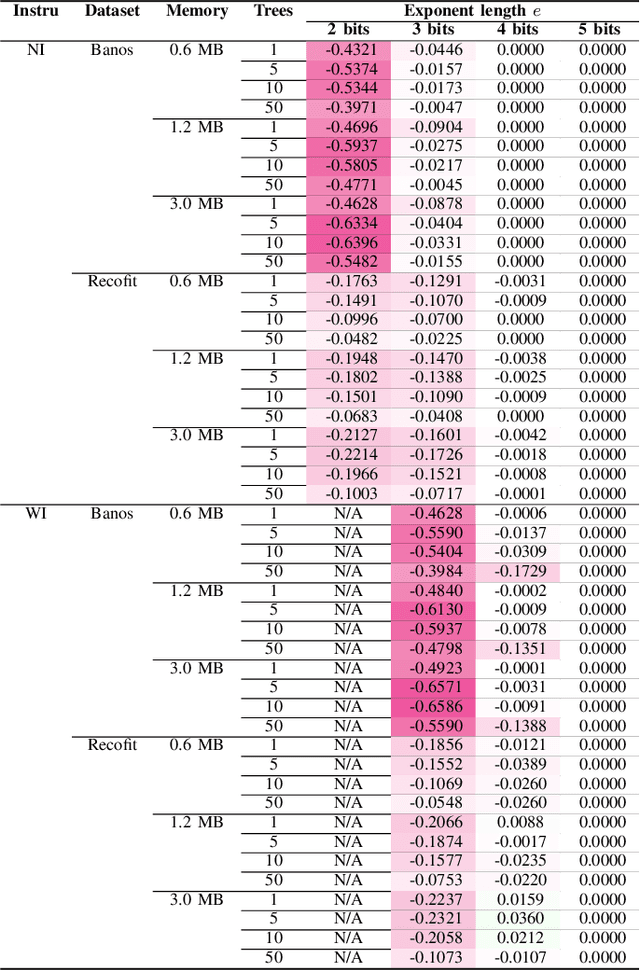

Abstract:Mondrian Forests are a powerful data stream classification method, but their large memory footprint makes them ill-suited for low-resource platforms such as connected objects. We explored using reduced-precision floating-point representations to lower memory consumption and evaluated its effect on classification performance. We applied the Mondrian Forest implementation provided by OrpailleCC, a C++ collection of data stream algorithms, to two canonical datasets in human activity recognition: Recofit and Banos \emph{et al}. Results show that the precision of floating-point values used by tree nodes can be reduced from 64 bits to 8 bits with no significant difference in F1 score. In some cases, reduced precision was shown to improve classification performance, presumably due to its regularization effect. We conclude that numerical precision is a relevant hyperparameter in the Mondrian Forest, and that commonly-used double precision values may not be necessary for optimal performance. Future work will evaluate the generalizability of these findings to other data stream classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge