Yizhi Mao

A Batched Multi-Armed Bandit Approach to News Headline Testing

Aug 25, 2019

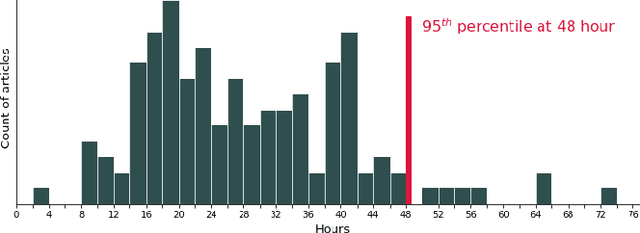

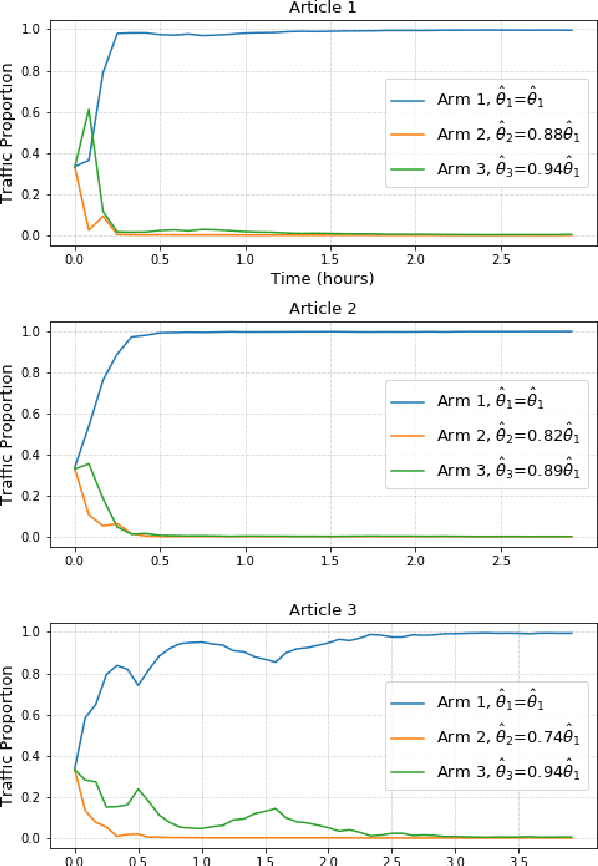

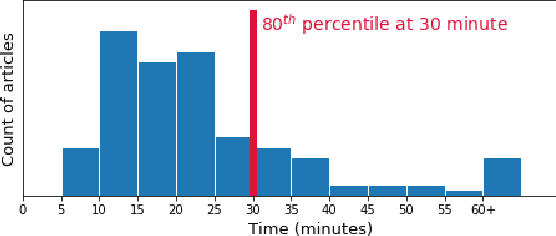

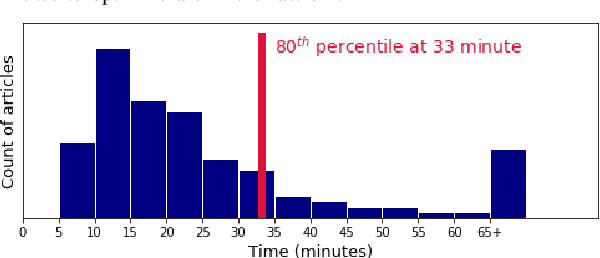

Abstract:Optimizing news headlines is important for publishers and media sites. A compelling headline will increase readership, user engagement and social shares. At Yahoo Front Page, headline testing is carried out using a test-rollout strategy: we first allocate equal proportion of the traffic to each headline variation for a defined testing period, and then shift all future traffic to the best-performing variation. In this paper, we introduce a multi-armed bandit (MAB) approach with batched Thompson Sampling (bTS) to dynamically test headlines for news articles. This method is able to gradually allocate traffic towards optimal headlines while testing. We evaluate the bTS method based on empirical impressions/clicks data and simulated user responses. The result shows that the bTS method is robust, converges accurately and quickly to the optimal headline, and outperforms the test-rollout strategy by 3.69% in terms of clicks.

Predicting Different Types of Conversions with Multi-Task Learning in Online Advertising

Jul 24, 2019

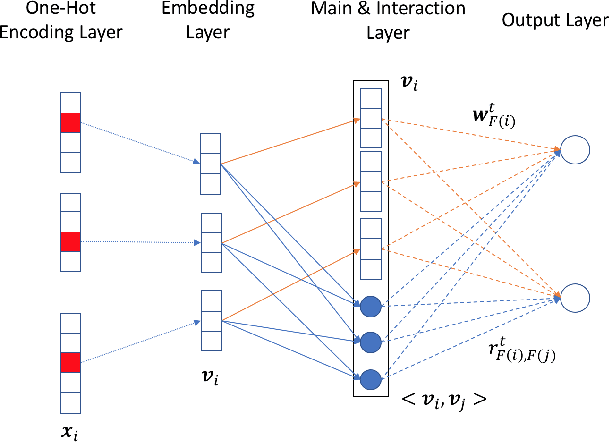

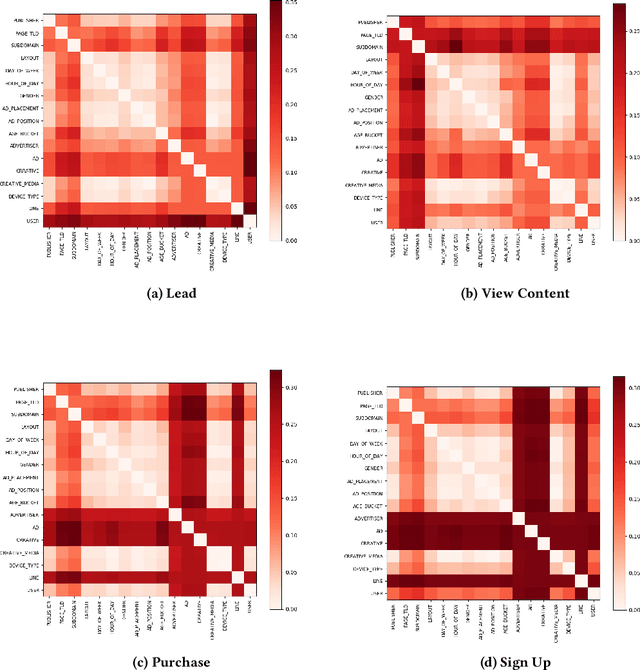

Abstract:Conversion prediction plays an important role in online advertising since Cost-Per-Action (CPA) has become one of the primary campaign performance objectives in the industry. Unlike click prediction, conversions have different types in nature, and each type may be associated with different decisive factors. In this paper, we formulate conversion prediction as a multi-task learning problem, so that the prediction models for different types of conversions can be learned together. These models share feature representations, but have their specific parameters, providing the benefit of information-sharing across all tasks. We then propose Multi-Task Field-weighted Factorization Machine (MT-FwFM) to solve these tasks jointly. Our experiment results show that, compared with two state-of-the-art models, MT-FwFM improve the AUC by 0.74% and 0.84% on two conversion types, and the weighted AUC across all conversion types is also improved by 0.50%.

* SIGKDD

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge