Yizheng Zhu

Secure and Verifiable Data Collaboration with Low-Cost Zero-Knowledge Proofs

Nov 26, 2023

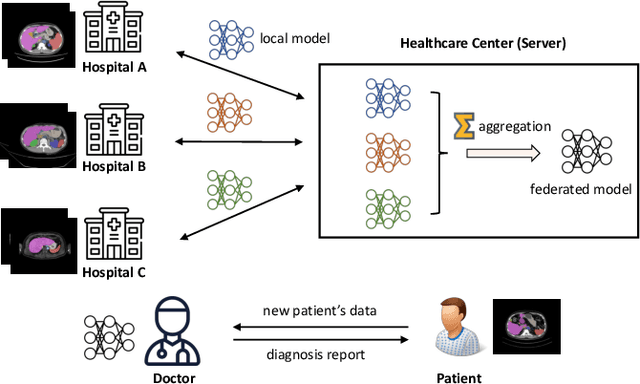

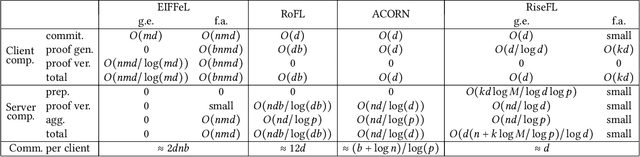

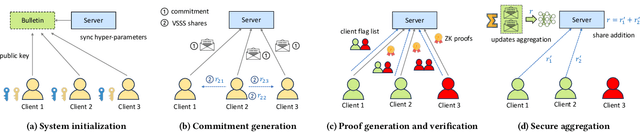

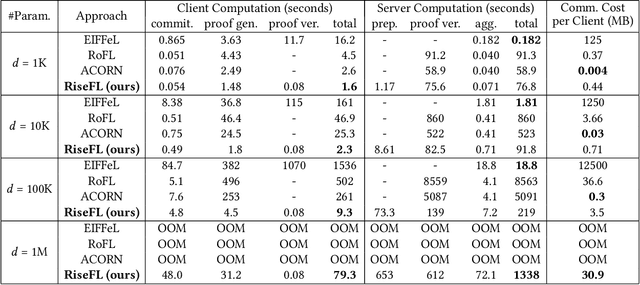

Abstract:Organizations are increasingly recognizing the value of data collaboration for data analytics purposes. Yet, stringent data protection laws prohibit the direct exchange of raw data. To facilitate data collaboration, federated Learning (FL) emerges as a viable solution, which enables multiple clients to collaboratively train a machine learning (ML) model under the supervision of a central server while ensuring the confidentiality of their raw data. However, existing studies have unveiled two main risks: (i) the potential for the server to infer sensitive information from the client's uploaded updates (i.e., model gradients), compromising client input privacy, and (ii) the risk of malicious clients uploading malformed updates to poison the FL model, compromising input integrity. Recent works utilize secure aggregation with zero-knowledge proofs (ZKP) to guarantee input privacy and integrity in FL. Nevertheless, they suffer from extremely low efficiency and, thus, are impractical for real deployment. In this paper, we propose a novel and highly efficient solution RiseFL for secure and verifiable data collaboration, ensuring input privacy and integrity simultaneously.Firstly, we devise a probabilistic integrity check method that significantly reduces the cost of ZKP generation and verification. Secondly, we design a hybrid commitment scheme to satisfy Byzantine robustness with improved performance. Thirdly, we theoretically prove the security guarantee of the proposed solution. Extensive experiments on synthetic and real-world datasets suggest that our solution is effective and is highly efficient in both client computation and communication. For instance, RiseFL is up to 28x, 53x and 164x faster than three state-of-the-art baselines ACORN, RoFL and EIFFeL for the client computation.

Skellam Mixture Mechanism: a Novel Approach to Federated Learning with Differential Privacy

Dec 08, 2022

Abstract:Deep neural networks have strong capabilities of memorizing the underlying training data, which can be a serious privacy concern. An effective solution to this problem is to train models with differential privacy, which provides rigorous privacy guarantees by injecting random noise to the gradients. This paper focuses on the scenario where sensitive data are distributed among multiple participants, who jointly train a model through federated learning (FL), using both secure multiparty computation (MPC) to ensure the confidentiality of each gradient update, and differential privacy to avoid data leakage in the resulting model. A major challenge in this setting is that common mechanisms for enforcing DP in deep learning, which inject real-valued noise, are fundamentally incompatible with MPC, which exchanges finite-field integers among the participants. Consequently, most existing DP mechanisms require rather high noise levels, leading to poor model utility. Motivated by this, we propose Skellam mixture mechanism (SMM), an approach to enforce DP on models built via FL. Compared to existing methods, SMM eliminates the assumption that the input gradients must be integer-valued, and, thus, reduces the amount of noise injected to preserve DP. Further, SMM allows tight privacy accounting due to the nice composition and sub-sampling properties of the Skellam distribution, which are key to accurate deep learning with DP. The theoretical analysis of SMM is highly non-trivial, especially considering (i) the complicated math of differentially private deep learning in general and (ii) the fact that the mixture of two Skellam distributions is rather complex, and to our knowledge, has not been studied in the DP literature. Extensive experiments on various practical settings demonstrate that SMM consistently and significantly outperforms existing solutions in terms of the utility of the resulting model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge