Yihua Chen

Noisy Ostracods: A Fine-Grained, Imbalanced Real-World Dataset for Benchmarking Robust Machine Learning and Label Correction Methods

Dec 03, 2024

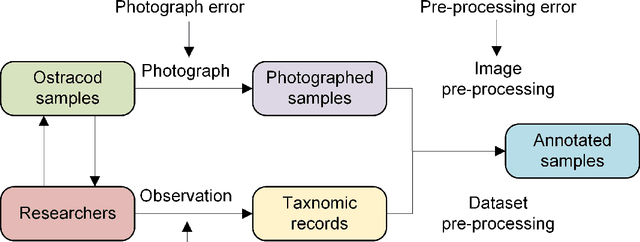

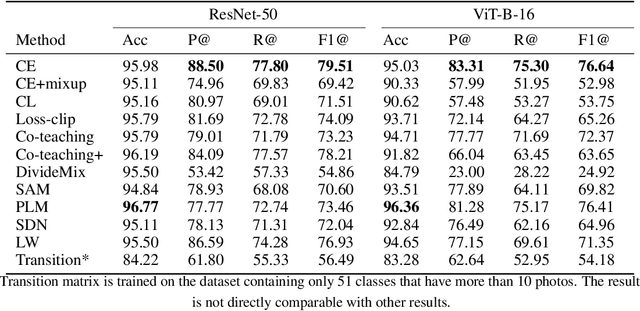

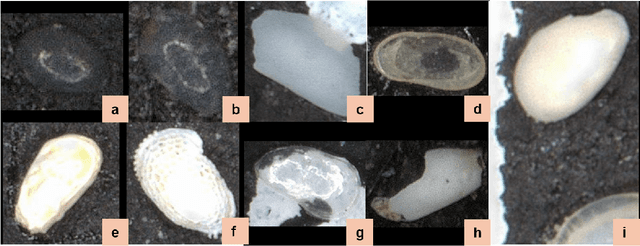

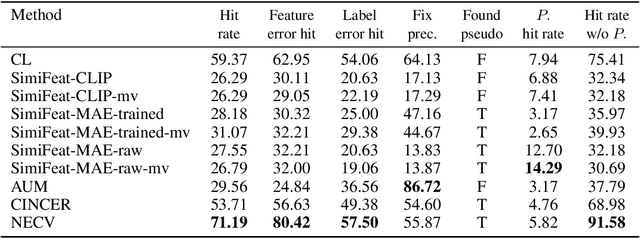

Abstract:We present the Noisy Ostracods, a noisy dataset for genus and species classification of crustacean ostracods with specialists' annotations. Over the 71466 specimens collected, 5.58% of them are estimated to be noisy (possibly problematic) at genus level. The dataset is created to addressing a real-world challenge: creating a clean fine-grained taxonomy dataset. The Noisy Ostracods dataset has diverse noises from multiple sources. Firstly, the noise is open-set, including new classes discovered during curation that were not part of the original annotation. The dataset has pseudo-classes, where annotators misclassified samples that should belong to an existing class into a new pseudo-class. The Noisy Ostracods dataset is highly imbalanced with a imbalance factor $\rho$ = 22429. This presents a unique challenge for robust machine learning methods, as existing approaches have not been extensively evaluated on fine-grained classification tasks with such diverse real-world noise. Initial experiments using current robust learning techniques have not yielded significant performance improvements on the Noisy Ostracods dataset compared to cross-entropy training on the raw, noisy data. On the other hand, noise detection methods have underperformed in error hit rate compared to naive cross-validation ensembling for identifying problematic labels. These findings suggest that the fine-grained, imbalanced nature, and complex noise characteristics of the dataset present considerable challenges for existing noise-robust algorithms. By openly releasing the Noisy Ostracods dataset, our goal is to encourage further research into the development of noise-resilient machine learning methods capable of effectively handling diverse, real-world noise in fine-grained classification tasks. The dataset, along with its evaluation protocols, can be accessed at https://github.com/H-Jamieu/Noisy_ostracods.

DSelect-k: Differentiable Selection in the Mixture of Experts with Applications to Multi-Task Learning

Jun 09, 2021

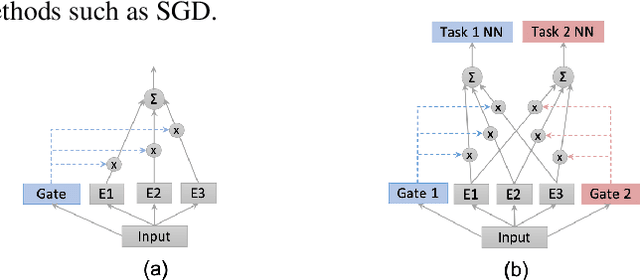

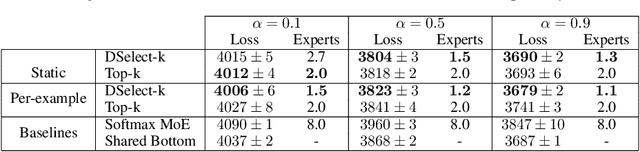

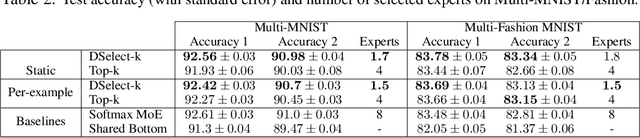

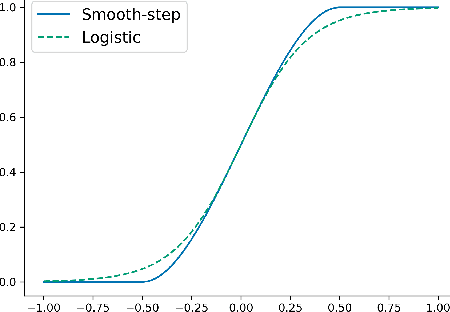

Abstract:The Mixture-of-experts (MoE) architecture is showing promising results in multi-task learning (MTL) and in scaling high-capacity neural networks. State-of-the-art MoE models use a trainable sparse gate to select a subset of the experts for each input example. While conceptually appealing, existing sparse gates, such as Top-k, are not smooth. The lack of smoothness can lead to convergence and statistical performance issues when training with gradient-based methods. In this paper, we develop DSelect-k: the first, continuously differentiable and sparse gate for MoE, based on a novel binary encoding formulation. Our gate can be trained using first-order methods, such as stochastic gradient descent, and offers explicit control over the number of experts to select. We demonstrate the effectiveness of DSelect-k in the context of MTL, on both synthetic and real datasets with up to 128 tasks. Our experiments indicate that MoE models based on DSelect-k can achieve statistically significant improvements in predictive and expert selection performance. Notably, on a real-world large-scale recommender system, DSelect-k achieves over 22% average improvement in predictive performance compared to the Top-k gate. We provide an open-source TensorFlow implementation of our gate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge