Yichao Xu

Integrated Communication and Remote Sensing in LEO Satellite Systems: Protocol, Architecture and Prototype

Aug 14, 2025Abstract:In this paper, we explore the integration of communication and synthetic aperture radar (SAR)-based remote sensing in low Earth orbit (LEO) satellite systems to provide real-time SAR imaging and information transmission. Considering the high-mobility characteristics of satellite channels and limited processing capabilities of satellite payloads, we propose an integrated communication and remote sensing architecture based on an orthogonal delay-Doppler division multiplexing (ODDM) signal waveform. Both communication and SAR imaging functionalities are achieved with an integrated transceiver onboard the LEO satellite, utilizing the same waveform and radio frequency (RF) front-end. Based on such an architecture, we propose a transmission protocol compatible with the 5G NR standard using downlink pilots for joint channel estimation and SAR imaging. Furthermore, we design a unified signal processing framework for the integrated satellite receiver to simultaneously achieve high-performance channel sensing, low-complexity channel equalization and interference-free SAR imaging. Finally, the performance of the proposed integrated system is demonstrated through comprehensive analysis and extensive simulations in the sub-6 GHz band. Moreover, a software-defined radio (SDR) prototype is presented to validate its effectiveness for real-time SAR imaging and information transmission in satellite direct-connect user equipment (UE) scenarios within the millimeter-wave (mmWave) band.

Advances in 4D Generation: A Survey

Mar 19, 2025Abstract:Generative artificial intelligence (AI) has made significant progress across various domains in recent years. Building on the rapid advancements in 2D, video, and 3D content generation fields, 4D generation has emerged as a novel and rapidly evolving research area, attracting growing attention. 4D generation focuses on creating dynamic 3D assets with spatiotemporal consistency based on user input, offering greater creative freedom and richer immersive experiences. This paper presents a comprehensive survey of the 4D generation field, systematically summarizing its core technologies, developmental trajectory, key challenges, and practical applications, while also exploring potential future research directions. The survey begins by introducing various fundamental 4D representation models, followed by a review of 4D generation frameworks built upon these representations and the key technologies that incorporate motion and geometry priors into 4D assets. We summarize five major challenges of 4D generation: consistency, controllability, diversity, efficiency, and fidelity, accompanied by an outline of existing solutions to address these issues. We systematically analyze applications of 4D generation, spanning dynamic object generation, scene generation, digital human synthesis, 4D editing, and autonomous driving. Finally, we provide an in-depth discussion of the obstacles currently hindering the development of the 4D generation. This survey offers a clear and comprehensive overview of 4D generation, aiming to stimulate further exploration and innovation in this rapidly evolving field. Our code is publicly available at: https://github.com/MiaoQiaowei/Awesome-4D.

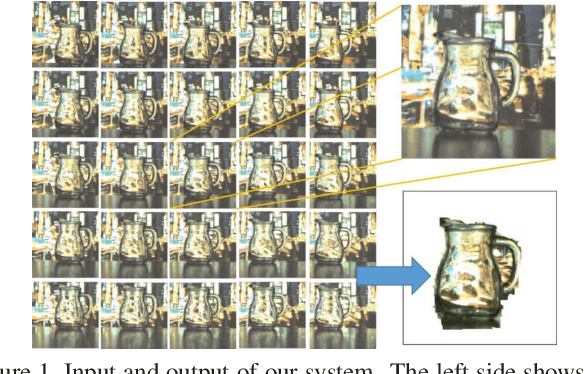

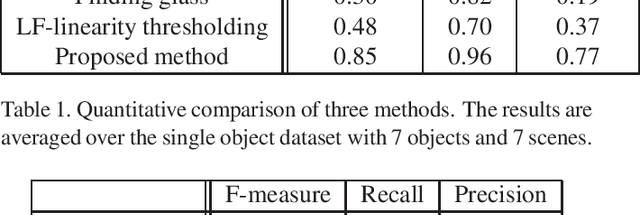

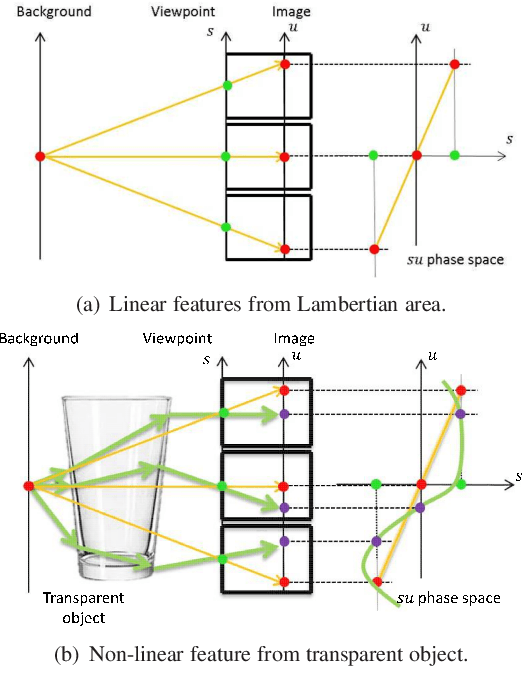

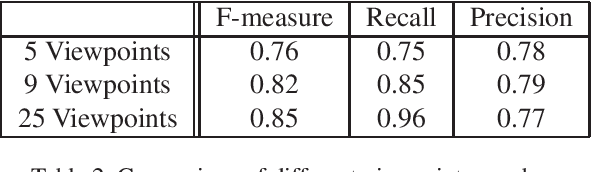

TransCut: Transparent Object Segmentation from a Light-Field Image

Nov 21, 2015

Abstract:The segmentation of transparent objects can be very useful in computer vision applications. However, because they borrow texture from their background and have a similar appearance to their surroundings, transparent objects are not handled well by regular image segmentation methods. We propose a method that overcomes these problems using the consistency and distortion properties of a light-field image. Graph-cut optimization is applied for the pixel labeling problem. The light-field linearity is used to estimate the likelihood of a pixel belonging to the transparent object or Lambertian background, and the occlusion detector is used to find the occlusion boundary. We acquire a light field dataset for the transparent object, and use this dataset to evaluate our method. The results demonstrate that the proposed method successfully segments transparent objects from the background.

Mobile Camera Array Calibration for Light Field Acquisition

Jul 16, 2014

Abstract:The light field camera is useful for computer graphics and vision applications. Calibration is an essential step for these applications. After calibration, we can rectify the captured image by using the calibrated camera parameters. However, the large camera array calibration method, which assumes that all cameras are on the same plane, ignores the orientation and intrinsic parameters. The multi-camera calibration technique usually assumes that the working volume and viewpoints are fixed. In this paper, we describe a calibration algorithm suitable for a mobile camera array based light field acquisition system. The algorithm performs in Zhang's style by moving a checkerboard, and computes the initial parameters in closed form. Global optimization is then applied to refine all the parameters simultaneously. Our implementation is rather flexible in that users can assign the number of viewpoints and refinement of intrinsic parameters is optional. Experiments on both simulated data and real data acquired by a commercial product show that our method yields good results. Digital refocusing application shows the calibrated light field can well focus to the target object we desired.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge