Yeongmin Ko

Light Robust Monocular Depth Estimation For Outdoor Environment Via Monochrome And Color Camera Fusion

Feb 24, 2022

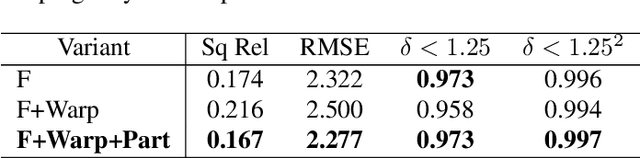

Abstract:Depth estimation plays a important role in SLAM, odometry, and autonomous driving. Especially, monocular depth estimation is profitable technology because of its low cost, memory, and computation. However, it is not a sufficiently predicting depth map due to a camera often failing to get a clean image because of light conditions. To solve this problem, various sensor fusion method has been proposed. Even though it is a powerful method, sensor fusion requires expensive sensors, additional memory, and high computational performance. In this paper, we present color image and monochrome image pixel-level fusion and stereo matching with partially enhanced correlation coefficient maximization. Our methods not only outperform the state-of-the-art works across all metrics but also efficient in terms of cost, memory, and computation. We also validate the effectiveness of our design with an ablation study.

Task-Driven Deep Image Enhancement Network for Autonomous Driving in Bad Weather

Oct 14, 2021

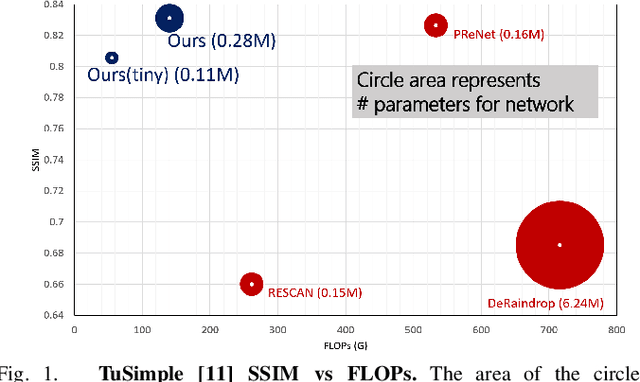

Abstract:Visual perception in autonomous driving is a crucial part of a vehicle to navigate safely and sustainably in different traffic conditions. However, in bad weather such as heavy rain and haze, the performance of visual perception is greatly affected by several degrading effects. Recently, deep learning-based perception methods have addressed multiple degrading effects to reflect real-world bad weather cases but have shown limited success due to 1) high computational costs for deployment on mobile devices and 2) poor relevance between image enhancement and visual perception in terms of the model ability. To solve these issues, we propose a task-driven image enhancement network connected to the high-level vision task, which takes in an image corrupted by bad weather as input. Specifically, we introduce a novel low memory network to reduce most of the layer connections of dense blocks for less memory and computational cost while maintaining high performance. We also introduce a new task-driven training strategy to robustly guide the high-level task model suitable for both high-quality restoration of images and highly accurate perception. Experiment results demonstrate that the proposed method improves the performance among lane and 2D object detection, and depth estimation largely under adverse weather in terms of both low memory and accuracy.

Key Points Estimation and Point Instance Segmentation Approach for Lane Detection

Feb 18, 2020

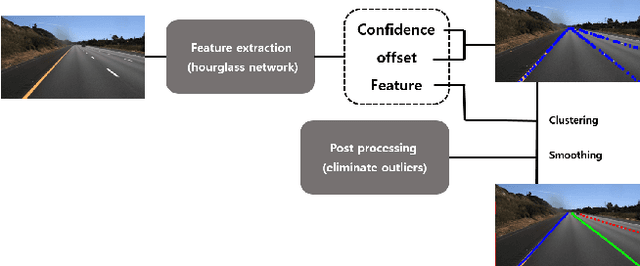

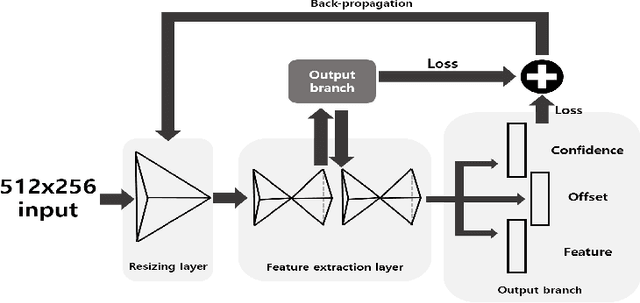

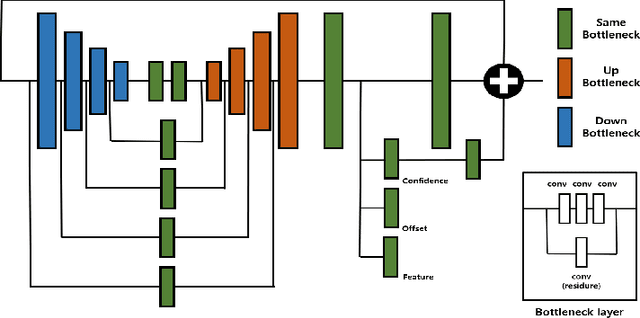

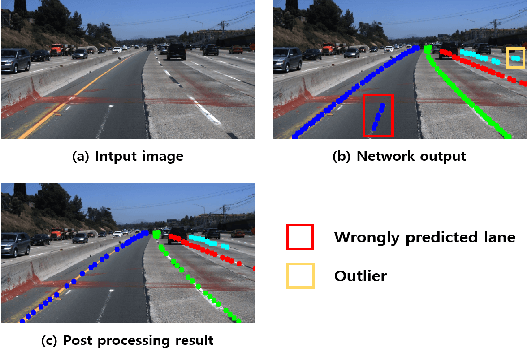

Abstract:State-of-the-art lane detection methods achieve successful performance. Despite their advantages, these methods have critical deficiencies such as the limited number of detectable lanes and high false positive. In especial, high false positive can cause wrong and dangerous control. In this paper, we propose a novel lane detection method for the arbitrary number of lanes using the deep learning method, which has the lower number of false positives than other recent lane detection methods. The architecture of the proposed method has the shared feature extraction layers and several branches for detection and embedding to cluster lanes. The proposed method can generate exact points on the lanes, and we cast a clustering problem for the generated points as a point cloud instance segmentation problem. The proposed method is more compact because it generates fewer points than the original image pixel size. Our proposed post processing method eliminates outliers successfully and increases the performance notably. Whole proposed framework achieves competitive results on the tuSimple dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge