Yash Mantri

Department of Bioengineering, University of California, San Diego

Initial Investigations Towards Non-invasive Monitoring of Chronic Wound Healing Using Deep Learning and Ultrasound Imaging

Jan 25, 2022

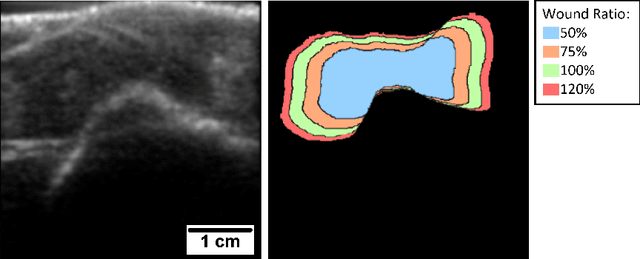

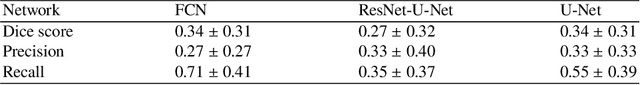

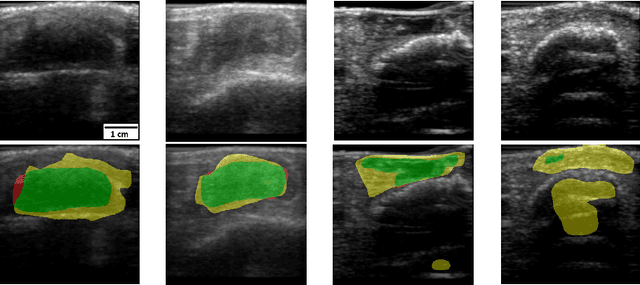

Abstract:Chronic wounds including diabetic and arterial/venous insufficiency injuries have become a major burden for healthcare systems worldwide. Demographic changes suggest that wound care will play an even bigger role in the coming decades. Predicting and monitoring response to therapy in wound care is currently largely based on visual inspection with little information on the underlying tissue. Thus, there is an urgent unmet need for innovative approaches that facilitate personalized diagnostics and treatments at the point-of-care. It has been recently shown that ultrasound imaging can monitor response to therapy in wound care, but this work required onerous manual image annotations. In this study, we present initial results of a deep learning-based automatic segmentation of cross-sectional wound size in ultrasound images and identify requirements and challenges for future research on this application. Evaluation of the segmentation results underscores the potential of the proposed deep learning approach to complement non-invasive imaging with Dice scores of 0.34 (U-Net, FCN) and 0.27 (ResNet-U-Net) but also highlights the need for improving robustness further. We conclude that deep learning-supported analysis of non-invasive ultrasound images is a promising area of research to automatically extract cross-sectional wound size and depth information with potential value in monitoring response to therapy.

Deep Learning Improves Contrast in Low-Fluence Photoacoustic Imaging

Apr 19, 2020

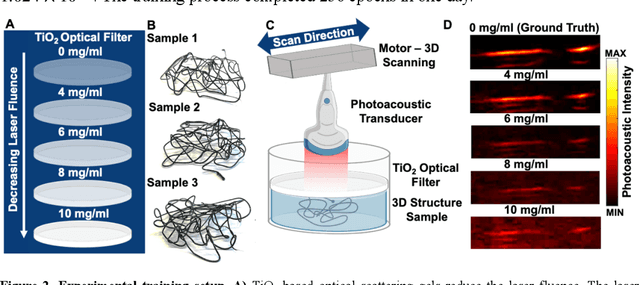

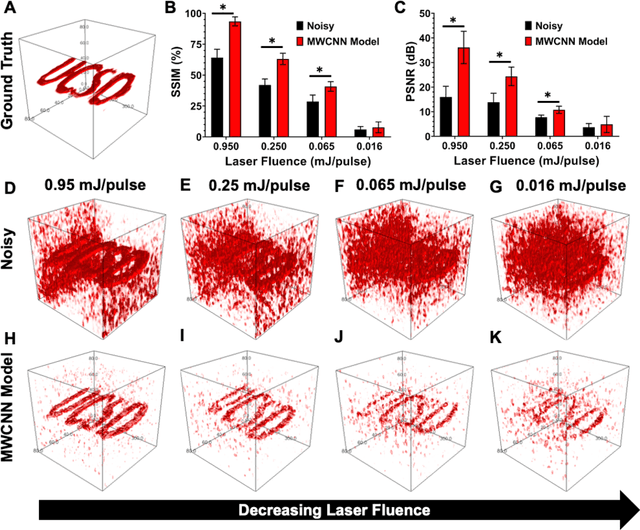

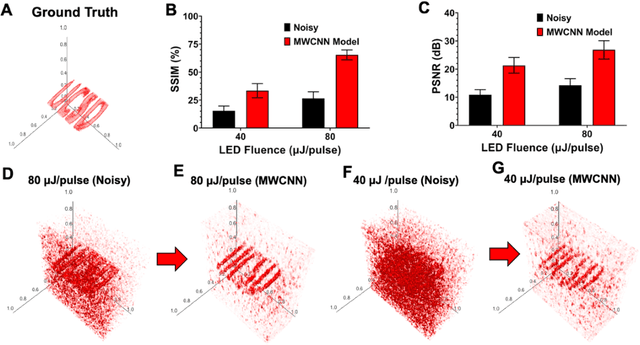

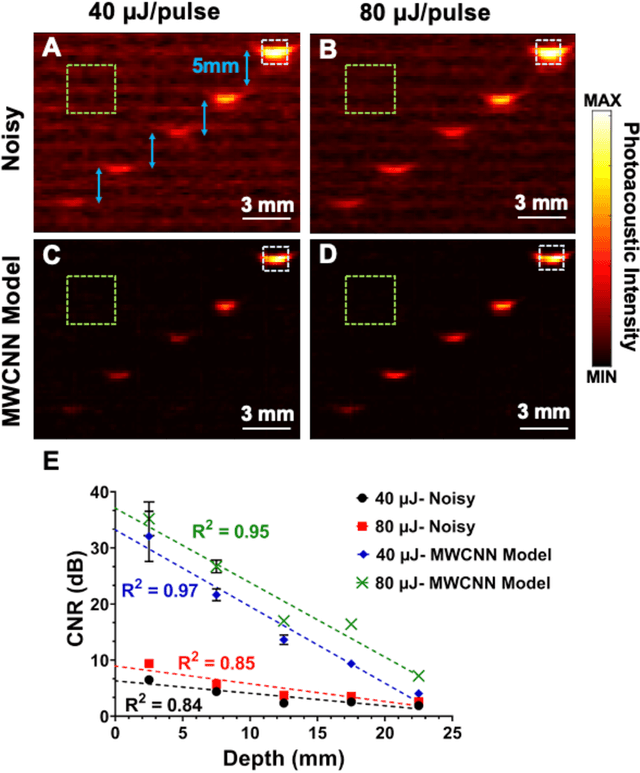

Abstract:Low fluence illumination sources can facilitate clinical transition of photoacoustic imaging because they are rugged, portable, affordable, and safe. However, these sources also decrease image quality due to their low fluence. Here, we propose a denoising method using a multi-level wavelet-convolutional neural network to map low fluence illumination source images to its corresponding high fluence excitation map. Quantitative and qualitative results show a significant potential to remove the background noise and preserve the structures of target. Substantial improvements up to 2.20, 2.25, and 4.3-fold for PSNR, SSIM, and CNR metrics were observed, respectively. We also observed enhanced contrast (up to 1.76-fold) in an in vivo application using our proposed methods. We suggest that this tool can improve the value of such sources in photoacoustic imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge