Yanyu Qi

Open-Vocabulary Audio-Visual Semantic Segmentation

Jul 31, 2024

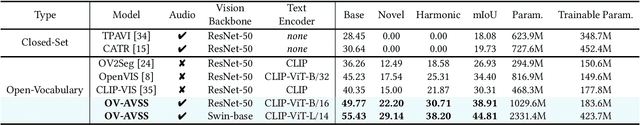

Abstract:Audio-visual semantic segmentation (AVSS) aims to segment and classify sounding objects in videos with acoustic cues. However, most approaches operate on the close-set assumption and only identify pre-defined categories from training data, lacking the generalization ability to detect novel categories in practical applications. In this paper, we introduce a new task: open-vocabulary audio-visual semantic segmentation, extending AVSS task to open-world scenarios beyond the annotated label space. This is a more challenging task that requires recognizing all categories, even those that have never been seen nor heard during training. Moreover, we propose the first open-vocabulary AVSS framework, OV-AVSS, which mainly consists of two parts: 1) a universal sound source localization module to perform audio-visual fusion and locate all potential sounding objects and 2) an open-vocabulary classification module to predict categories with the help of the prior knowledge from large-scale pre-trained vision-language models. To properly evaluate the open-vocabulary AVSS, we split zero-shot training and testing subsets based on the AVSBench-semantic benchmark, namely AVSBench-OV. Extensive experiments demonstrate the strong segmentation and zero-shot generalization ability of our model on all categories. On the AVSBench-OV dataset, OV-AVSS achieves 55.43% mIoU on base categories and 29.14% mIoU on novel categories, exceeding the state-of-the-art zero-shot method by 41.88%/20.61% and open-vocabulary method by 10.2%/11.6%. The code is available at https://github.com/ruohaoguo/ovavss.

Audio-Visual Instance Segmentation

Oct 28, 2023

Abstract:In this paper, we propose a new multi-modal task, namely audio-visual instance segmentation (AVIS), in which the goal is to identify, segment, and track individual sounding object instances in audible videos, simultaneously. To our knowledge, it is the first time that instance segmentation has been extended into the audio-visual domain. To better facilitate this research, we construct the first audio-visual instance segmentation benchmark (AVISeg). Specifically, AVISeg consists of 1,258 videos with an average duration of 62.6 seconds from YouTube and public audio-visual datasets, where 117 videos have been annotated by using an interactive semi-automatic labeling tool based on the Segment Anything Model (SAM). In addition, we present a simple baseline model for the AVIS task. Our new model introduces an audio branch and a cross-modal fusion module to Mask2Former to locate all sounding objects. Finally, we evaluate the proposed method using two backbones on AVISeg. We believe that AVIS will inspire the community towards a more comprehensive multi-modal understanding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge