Yanmei Yu

Adaptive Ensemble of Classifiers with Regularization for Imbalanced Data Classification

Aug 13, 2019

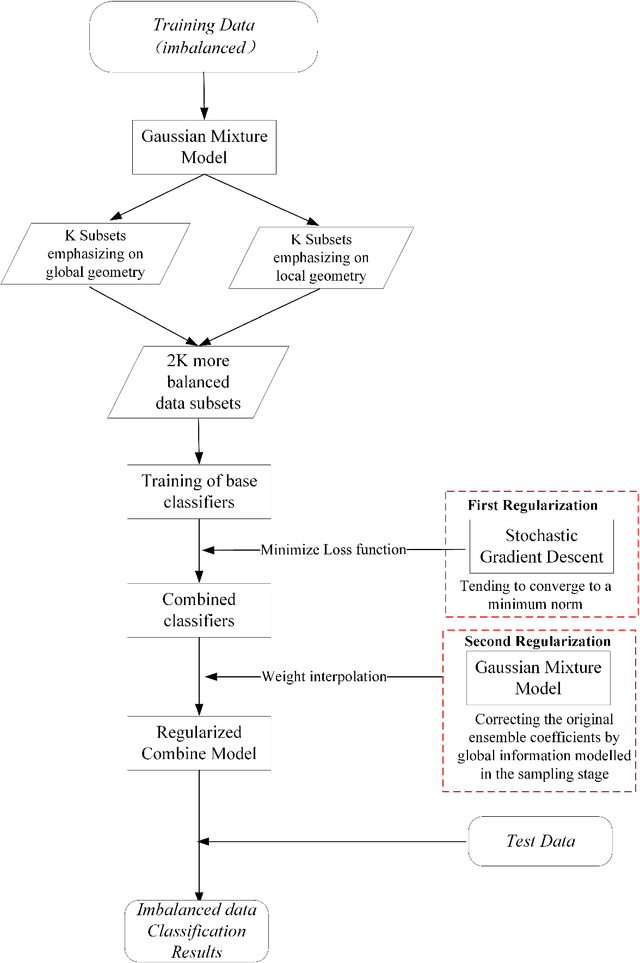

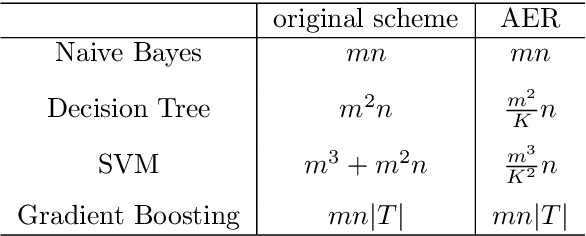

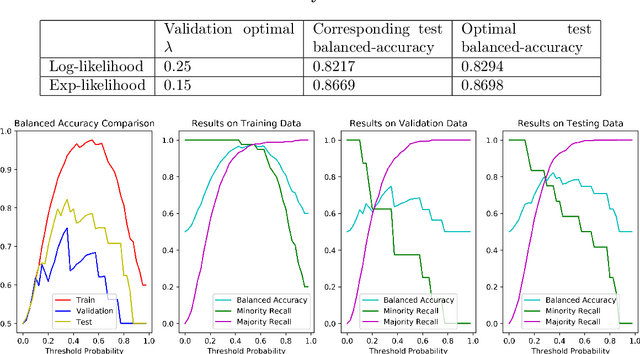

Abstract:Dynamic ensembling of classifiers is an effective approach in processing label-imbalanced classifications. However, in dynamic ensemble methods, the combination of classifiers is usually determined by the local competence and conventional regularization methods are difficult to apply, leaving the technique prone to overfitting. In this paper, focusing on the binary label-imbalanced classification field, a novel method of Adaptive Ensemble of classifiers with Regularization (AER) has been proposed. The method deals with the overfitting problem from a perspective of implicit regularization. Specifically, it leverages the properties of Stochastic Gradient Descent (SGD) to obtain the solution with the minimum norm to achieve regularization, and interpolates ensemble weights via the global geometry of data to further prevent overfitting. The method enjoys a favorable time and memory complexity, and theoretical proofs show that algorithms implemented with AER paradigm have time and memory complexities upper-bounded by their original implementations. Furthermore, the proposed AER method is tested with a specific implementation based on Gradient Boosting Machine (XGBoost) on the three datasets: UCI Bioassay, KEEL Abalone19, and a set of GMM-sampled artificial dataset. Results show that the proposed AER algorithm can outperform the major existing algorithms based on multiple metrics, and Mcnemar's tests are applied to validate performance superiorities. To summarize, this work complements regularization for dynamic ensemble methods and develops an algorithm superior in grasping both the global and local geometry of data to alleviate overfitting in imbalanced data classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge