Yangjing Zhang

Alternating minimization for square root principal component pursuit

Dec 31, 2024Abstract:Recently, the square root principal component pursuit (SRPCP) model has garnered significant research interest. It is shown in the literature that the SRPCP model guarantees robust matrix recovery with a universal, constant penalty parameter. While its statistical advantages are well-documented, the computational aspects from an optimization perspective remain largely unexplored. In this paper, we focus on developing efficient optimization algorithms for solving the SRPCP problem. Specifically, we propose a tuning-free alternating minimization (AltMin) algorithm, where each iteration involves subproblems enjoying closed-form optimal solutions. Additionally, we introduce techniques based on the variational formulation of the nuclear norm and Burer-Monteiro decomposition to further accelerate the AltMin method. Extensive numerical experiments confirm the efficiency and robustness of our algorithms.

DNNLasso: Scalable Graph Learning for Matrix-Variate Data

Mar 05, 2024Abstract:We consider the problem of jointly learning row-wise and column-wise dependencies of matrix-variate observations, which are modelled separately by two precision matrices. Due to the complicated structure of Kronecker-product precision matrices in the commonly used matrix-variate Gaussian graphical models, a sparser Kronecker-sum structure was proposed recently based on the Cartesian product of graphs. However, existing methods for estimating Kronecker-sum structured precision matrices do not scale well to large scale datasets. In this paper, we introduce DNNLasso, a diagonally non-negative graphical lasso model for estimating the Kronecker-sum structured precision matrix, which outperforms the state-of-the-art methods by a large margin in both accuracy and computational time. Our code is available at https://github.com/YangjingZhang/DNNLasso.

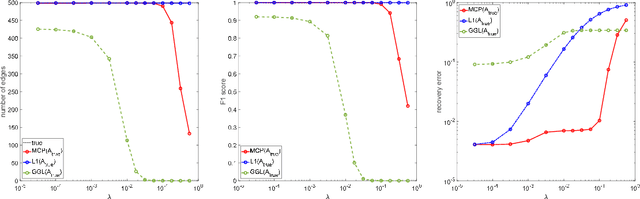

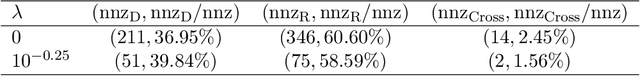

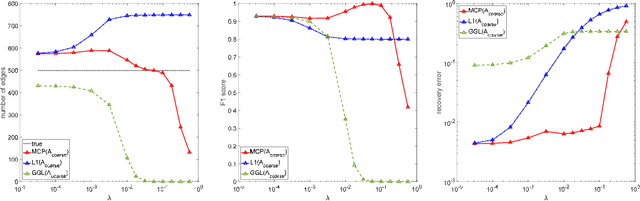

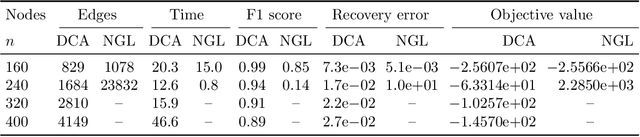

Learning Graph Laplacian with MCP

Oct 22, 2020

Abstract:Motivated by the observation that the ability of the $\ell_1$ norm in promoting sparsity in graphical models with Laplacian constraints is much weakened, this paper proposes to learn graph Laplacian with a non-convex penalty: minimax concave penalty (MCP). For solving the MCP penalized graphical model, we design an inexact proximal difference-of-convex algorithm (DCA) and prove its convergence to critical points. We note that each subproblem of the proximal DCA enjoys the nice property that the objective function in its dual problem is continuously differentiable with a semismooth gradient. Therefore, we apply an efficient semismooth Newton method to subproblems of the proximal DCA. Numerical experiments on various synthetic and real data sets demonstrate the effectiveness of the non-convex penalty MCP in promoting sparsity. Compared with the state-of-the-art method \cite[Algorithm~1]{ying2020does}, our method is demonstrated to be more efficient and reliable for learning graph Laplacian with MCP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge